Large language models (LLMs) used to be brilliant talkers with no hands. They could explain how to change a tire, describe the weather in Tokyo, or even write a poem about your cat-but they couldn’t actually do any of it. That changed in 2023 when OpenAI introduced function calling. Suddenly, LLMs could reach out, grab real-time data, run calculations, update databases, and trigger actions-all while keeping the conversation natural. This isn’t science fiction. It’s what’s powering customer service bots, financial dashboards, and internal tools at companies today.

What Function Calling Actually Does

Function calling lets an LLM decide when it needs help from the outside world. Instead of guessing a stock price from its 2023 training data, it can ask a financial API: "What’s Apple’s current stock price?" Then it gets back a clean number and says, "Apple is trading at $198.45 as of today." No hallucinations. No outdated info. Just facts. The model doesn’t run the code itself. It doesn’t connect to databases or call APIs directly. It just outputs a structured JSON message like this:{

"name": "get_stock_price",

"arguments": "{\"symbol\": \"AAPL\"}"

}

Your application reads that JSON, runs the function, gets the result, and feeds it back to the model. The LLM then uses that result to give a final answer. It’s like having a very smart assistant who knows when to ask you for help-and exactly how to ask.

How Major Models Handle It Differently

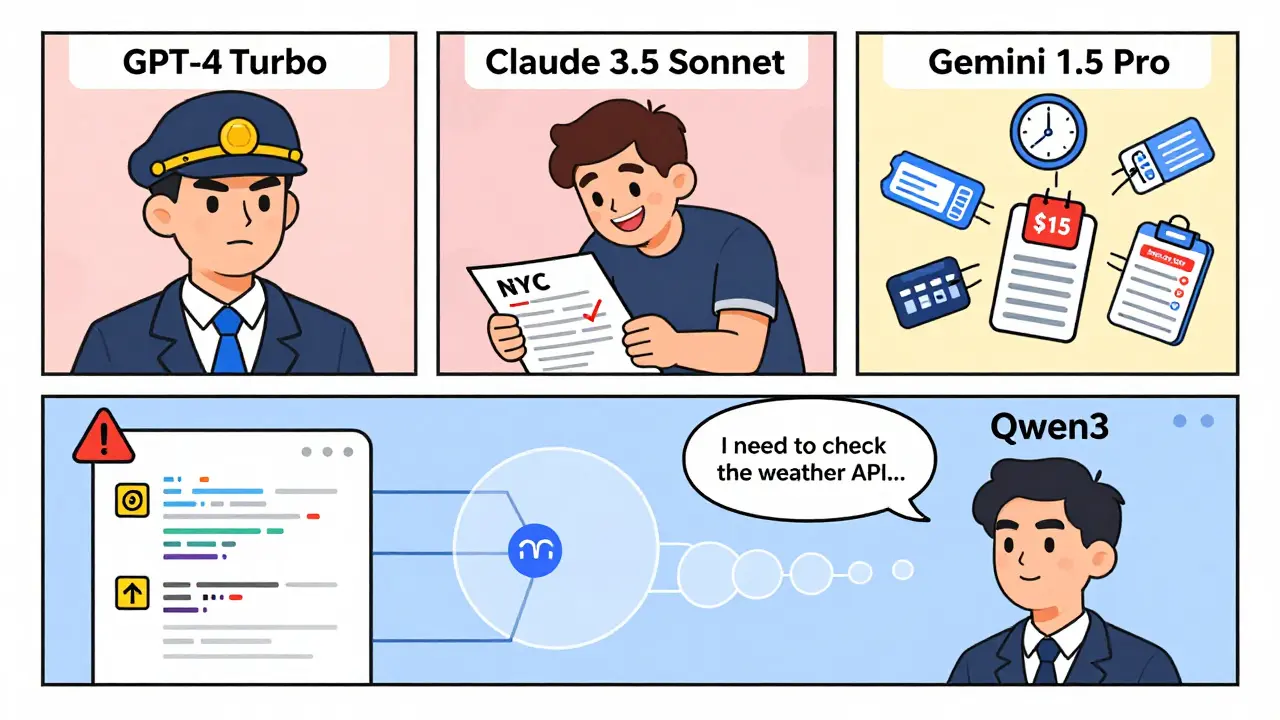

Not all function calling is built the same. Each big player has its own twist.OpenAI’s GPT-4 Turbo is the most widely used. It demands precision. If you forget a required parameter or spell a field wrong, the call fails. No second chances. That’s why 53% of developer complaints on GitHub focus on parameter validation. But it’s also why it works so well in enterprise settings-when it works, it’s rock solid. It integrates with over 2,400 third-party tools, from Salesforce to Stripe.

Claude 3.5 Sonnet by Anthropic is the opposite. It’s forgiving. If you say, "Tell me about the weather in NYC," and your function expects "city_name," Claude can still figure out you meant "New York City." It gets 94.3% accuracy on messy inputs. That’s huge for consumer apps where users don’t talk like API docs. But it only supports about 850 integrations-less than half of OpenAI’s.

Google’s Gemini 1.5 Pro is the slowest but the smartest at multi-step tasks. If you ask, "Find flights from Chicago to Seattle next week under $400, then book the cheapest one," Gemini breaks it into steps automatically. It’s 34% better than OpenAI at chaining tools together. But each step adds 150-300ms of delay. For real-time chat, that’s noticeable.

Alibaba’s Qwen3 does something unique: it shows its thinking. Before calling a function, it writes out, "I need to check the weather API because the user asked about tomorrow’s forecast." That transparency builds trust. In Alibaba’s tests, users were 63% more likely to believe the result. But outside China, adoption is low. Only 15% of English-speaking devs use it, per Stack Overflow’s 2025 survey.

Where It Works Best (and Where It Fails)

Function calling shines in three areas:- Real-time data: Stock prices, weather, live sports scores, news updates.

- Complex calculations: Tax estimates, mortgage payments, currency conversions.

- Database actions: Updating customer records, pulling order history, scheduling appointments.

Companies using it for customer service report 53% faster resolution times. One startup cut its support tickets by 70% by letting the AI pull order details from Shopify and refund amounts from Stripe-without human help.

But it fails hard when:

- There’s no API for the needed data (like medical diagnoses without a licensed clinical system).

- The user’s request is vague. "Tell me something interesting" doesn’t map to any function.

- The model gets stuck in a loop. "Check the weather. Then check it again. Then check it again..."

Johns Hopkins found a 41% error rate on medical queries without proper tool integration. That’s not a glitch-it’s a danger. LLMs aren’t doctors. They’re translators between people and systems.

What Developers Actually Struggle With

You can’t just plug in function calling and expect magic. Most devs spend 20-40 hours debugging it.The top three headaches:

- Ambiguous parameters: "Book a flight for John"-which John? Which airport? The model doesn’t know. You need follow-up prompts.

- Failed calls: What if the API is down? What if the user’s credit card expired? 43% of developers say they struggle with graceful fallbacks.

- Validation errors: One missing comma in the JSON? The whole call dies. OpenAI’s strictness is a double-edged sword.

Stack Overflow’s 2025 survey showed 78% of developers spent over 20 hours fixing function calling issues. Half of that time? Just fixing parameter typos.

Best practice? Use four-shot prompting. Show the model four clear examples of how to call your functions. Pan et al.’s 2024 research found that’s the sweet spot-more examples don’t help, fewer don’t work.

Getting Started: The Four Steps

If you’re ready to try it:- Define your functions: Use JSON Schema. List the name, description, and exact parameters. Be specific. "get_user_email" isn’t enough. "get_user_email(user_id: string)" is.

- Build the router: Write a small piece of code that listens for the model’s JSON output, runs the matching function, and returns the result.

- Handle errors: Plan for failures. If the API is down, tell the user: "I couldn’t pull your order history right now. Try again in a minute." Don’t let silence confuse them.

- Design the flow: Will the model ask for clarification? Will it retry failed calls? Will it limit loops to 5 steps? Build these rules in.

Documentation matters. OpenAI’s guides are detailed but skip real-world failures. Qwen’s docs are better for debugging. If you’re learning, start with Qwen’s examples-they show what goes wrong and how to fix it.

The Bigger Picture: Why This Matters

Function calling isn’t a feature. It’s infrastructure. Gartner says 78% of enterprises using LLMs now rely on it. The market for this tech hit $2.4 billion in 2025 and is on track to hit $9.7 billion by 2028.Why? Because it fixes the biggest weakness of LLMs: their ignorance of the real world. Without function calling, they’re like a librarian who remembers every book ever written-but can’t go to the shelf to fetch one.

But there are risks. Dr. Percy Liang at Stanford found 37% of implementations are vulnerable to parameter injection attacks. A hacker could slip in malicious code disguised as a user request. Always validate inputs. Never trust the model’s output blindly.

And as Dr. Emily Bender warns, function calling creates an illusion. The model isn’t understanding your request. It’s pattern-matching. It’s mimicking the behavior of someone who knows how to use tools. That’s fine for customer service. It’s dangerous for legal or medical advice.

Still, Pieter Abbeel at UC Berkeley calls it "the missing link between language and action." And he’s right. When an LLM can update your calendar, check your inventory, or send a payment-suddenly it’s not just a chatbot. It’s a teammate.

What’s Next?

The next wave is automation. OpenAI’s GPT-5, released in October 2025, uses "adaptive parameter validation"-it learns from past mistakes and adjusts. Claude 3.5 now chains tools automatically. Google’s new "tool grounding" checks if the function result matches the model’s internal knowledge. If they conflict, it flags it.By 2027, Forrester predicts 92% of enterprise LLMs will use function calling. But standardization is a mess. Every vendor has its own schema. That’s why companies like Apideck are building unified API layers-so you don’t have to rewrite your code for every new model.

The future isn’t just smarter chatbots. It’s systems that act. And function calling is the bridge.

What’s the difference between function calling and fine-tuning?

Fine-tuning changes how the model understands language by training it on new examples. Function calling doesn’t change the model at all-it just gives it access to external tools. You don’t need to retrain anything. You just define what functions are available and how to call them.

Can function calling work with any API?

Yes, as long as you can describe it in JSON Schema. Whether it’s a weather API, a CRM system, or a custom database query, if you can write a clear function definition with inputs and outputs, the model can call it. The challenge isn’t the API-it’s making sure the model understands exactly what to ask for.

Is function calling secure?

Not by default. The model can be tricked into calling malicious functions or injecting bad data. Always validate inputs on your end. Never let the model’s output run directly. Treat every function call like a user input-sanitize, filter, and check permissions before executing.

Do I need a lot of data to use function calling?

No. Unlike fine-tuning, you don’t need thousands of labeled examples. You need clear function definitions and a few good prompts. Most teams get good results with just 5-10 well-crafted examples of how to use each function.

What if the external API is slow or down?

Your app needs to handle it. The model doesn’t know if the API failed. You must build timeouts, retries, and fallback messages. If the weather API is down, don’t say "I don’t know." Say, "I couldn’t get the weather right now, but it’s usually sunny in Asheville this time of year." That keeps the conversation going.

Chris Heffron

December 23, 2025 AT 22:23Man, I tried function calling last week and spent 3 hours just fixing a missing comma in the JSON. 🤦♂️ Why can't it just guess what I meant like Claude does? Still, once it works, it's magic. My bot now books my dentist appointments. No more calling in.

Adrienne Temple

December 24, 2025 AT 01:12Love how this breaks it down so clearly! 💡 I’m teaching my niece (14) how to use LLMs for her school project, and we used Qwen’s examples-she actually understood the error handling part. Kids today are gonna be so much better at this than we were. Just remember: if the API’s down, don’t leave them hanging. A little ‘I’m thinking’ goes a long way. 😊

Sandy Dog

December 25, 2025 AT 06:03OK BUT DID YOU KNOW?? I once had a function call loop for 17 minutes because the model kept asking for the same weather data like it was a broken record?? 🤯 I thought my server was hacked. My laptop fan sounded like a jet engine. I screamed. My cat ran under the bed. I had to physically unplug the router. And then I realized-I forgot to cap the retry count. 😭 People, set limits. Set. Limits. I’m not even mad, I’m just… emotionally scarred. Also, I now have a shrine to Claude 3.5 in my office. It’s a stuffed animal with a tiny ‘forgiving’ sign around its neck. It’s my spirit animal now.

Nick Rios

December 26, 2025 AT 23:12Great breakdown. I’ve been on both sides-building these systems and trying to explain them to non-tech stakeholders. The biggest hurdle isn’t the code, it’s trust. People think the AI ‘knows’ things. It doesn’t. It’s just a very good translator between a fuzzy human request and a rigid machine API. If you treat it like a nervous intern who needs clear instructions and backup plans, it works. If you treat it like a genius, it fails spectacularly. And yes, validation matters. Always validate. Always.

Amanda Harkins

December 28, 2025 AT 21:19It’s wild, right? We built this thing to mimic thought, but what we really built was a really fancy autocomplete for APIs. The model doesn’t understand weather or stock prices-it just knows the pattern of when to ask for them. It’s like giving a parrot a phone and teaching it to say ‘Can I get the current price of Tesla?’… and then acting like it’s a financial advisor. We’re outsourcing cognition to a statistical ghost. And yet… it works. Weird. Beautiful. A little terrifying. 🤔