Most people think AI understands them the same way another person does. But if you’re not a native English speaker, have a cognitive difference, or come from a culture where direct questions aren’t common, large language models often fail you-quietly, repeatedly, and without warning. The problem isn’t the AI. It’s the prompts. And if your prompts assume everyone thinks, speaks, and learns the same way, you’re leaving out nearly half the people who could benefit from these tools.

Why Standard Prompts Exclude So Many

Standard prompts are built for a myth: the ideal user. Fluent in English. Tech-savvy. Comfortable with abstract reasoning. Clear in their goals. But real users? They’re diverse. A grandmother in Mexico City trying to book a doctor’s appointment. A teenager in Lagos with limited internet. A veteran in Ohio with memory challenges. A student in Vietnam who reads English at a B1 level. These people aren’t broken. The prompts are.Research from the University of Salford shows that 68.4% of users from marginalized groups give up on LLMs after just three failed attempts. Why? Prompts that demand precision they don’t have. Assumptions they can’t meet. Language they don’t fully grasp. The result? A digital divide hidden inside a chatbox.

Compare that to inclusive prompt design. When prompts are built with real human variation in mind, task completion rates jump by 37.2% for non-native speakers. Frustration drops by 28.6% for users with cognitive disabilities. That’s not a small tweak. That’s a transformation.

The IPEM Framework: How Inclusive Prompts Actually Work

The Inclusive Prompt Engineering Model (a modular framework developed in 2024 by researchers at the University of Salford to make LLM interactions accessible across language, culture, and ability, IPEM) is the first system designed from the ground up to handle this diversity. It doesn’t just add filters. It rebuilds how prompts interact with users.IPEM has three core parts:

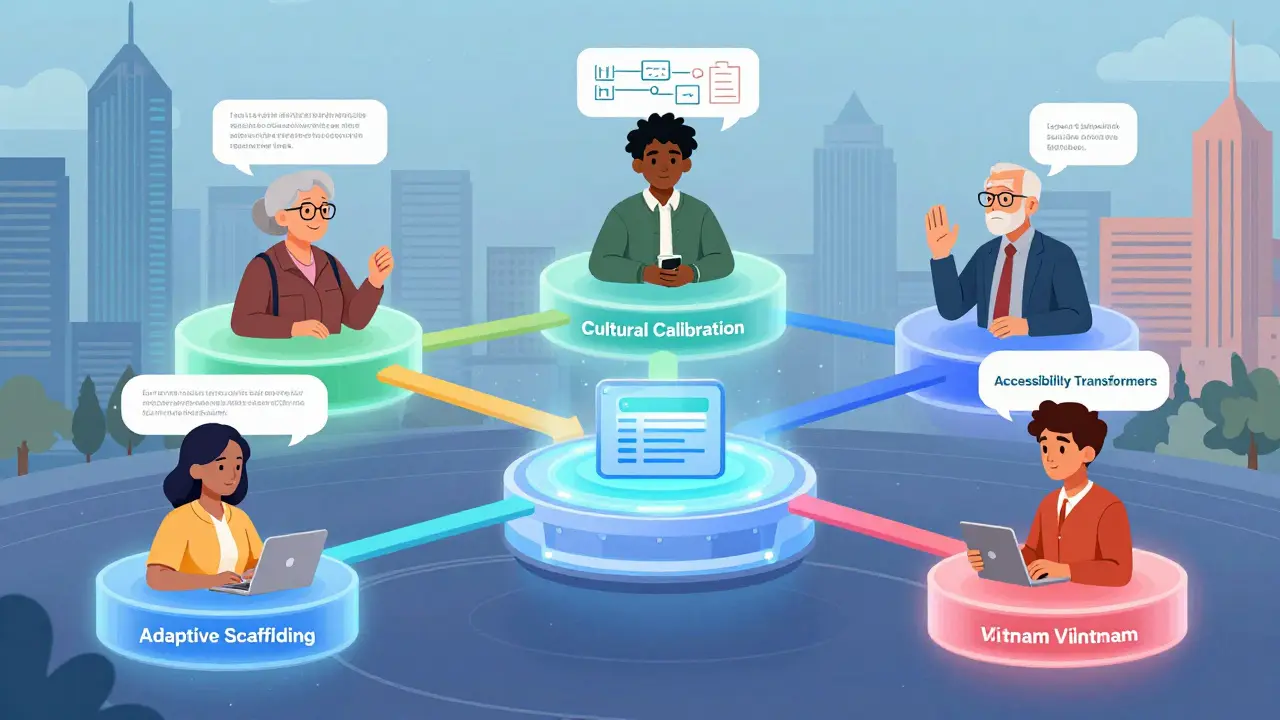

- Adaptive Scaffolding - The prompt changes complexity based on how the user responds. If they struggle with a long question, it breaks it down. If they answer simply, it doesn’t overcomplicate the next one.

- Cultural Calibration - It checks for cultural norms. In some cultures, asking directly for help is rude. In others, being vague is confusing. IPEM uses data from over 142 cultural dimensions to adjust tone, structure, and expectations.

- Accessibility Transformers - It can turn a text prompt into a visual flowchart, a simplified version in plain language, or even a voice-guided script-all from the same input.

These aren’t optional extras. They’re built into the engine. And they work in real time. A 2026 IEEE study showed that for users with low literacy, response time dropped from 4.7 minutes to 2.1 minutes-and accuracy went from 63.2% to 82.7%.

How It Compares to Other Approaches

You might have heard of tools like Google’s Prompting Guidelines or Anthropic’s Constitutional AI. They offer tips. But they don’t adapt. They’re static. Like giving someone a map to a city they’ve never visited, without telling them which streets are closed.IPEM, on the other hand, is dynamic. It learns from interaction. In tests across 127 tasks, inclusive prompts outperformed standard ones by 22.8% for users with TOEFL scores under 80. For users over 65, the gain was 31.4%. Meanwhile, the Automatic Prompt Engineer (APE) framework, while clever, failed 35.6% more often with marginalized groups because it never asked: Who is this for?

And it’s not just about accuracy. It’s about dignity. A Reddit user shared how their 78-year-old grandmother, who spoke mostly Spanish, went from avoiding AI to using it daily after simplified prompts with visual cues were added. That’s not a feature. That’s a lifeline.

Real-World Impact Across Industries

Healthcare leads adoption. Why? Because the EU’s AI Act and U.S. Section 508 now require accessibility in digital services-including AI. Hospitals using IPEM-based prompts saw fewer missed appointments, clearer medication instructions, and reduced calls to support lines. One European hospital cut support tickets by 29%.Education is next. Duolingo integrated inclusive prompts into its language app. Result? Non-native speaker engagement jumped 33.5%. Students weren’t just learning vocabulary-they were learning how to interact with AI, safely and confidently.

Government agencies, especially in the U.S. and Europe, are moving fast. 47.2% of them now use inclusive prompt frameworks. Why? Because they serve everyone. Not just the fluent, the young, or the tech-savvy.

Even financial services are catching on. Banks using these tools saw a drop in customer confusion, fewer errors in form submissions, and higher satisfaction scores. One bank reported that users over 65 were completing online banking tasks at the same rate as younger users-for the first time.

The Cost of Not Doing It

Some say inclusive design slows things down. It adds complexity. It costs more.But the cost of exclusion is higher.

Professor Kenji Tanaka from MIT calculated that standard prompts exclude 41.7% of potential users with disabilities. That’s a $1.2 trillion market-gone. Not because people don’t want to use AI. Because the AI doesn’t want to use them.

And legally? The risk is growing. By 2027, failing to implement inclusive prompts could be seen as a violation of accessibility laws in the U.S., EU, and beyond. It’s no longer just ethics. It’s compliance.

Dr. Elena Rodriguez from Stanford put it bluntly: “Inclusive prompt engineering isn’t just ethical-it’s economically essential.” Her team found a 204% return on investment through wider reach and lower support costs.

Implementation Isn’t Perfect-But It’s Getting Better

Yes, there are challenges. Setting up IPEM takes time. Developers need Python skills and experience with NLP tools like spaCy. Initial setup can add 30-40% more work. Cultural calibration can drift over time. Regional dialects-especially from Nigeria, India, or Indigenous communities-still trip up the system.But solutions are emerging. IPEM 2.0, released in January 2026, added real-time dialect adaptation using 87 regional English variants. Open-source communities on GitHub have built 1,243 examples to help others avoid common mistakes. The University of Washington’s Coursera course on inclusive prompting has trained over 42,000 people-and cut implementation errors by 39%.

And support? There’s an active Discord server with 4,812 members. Weekly office hours from the University of Salford team. GitHub repos with tested templates. You’re not alone.

What’s Next?

Big tech is catching up. Google’s “Project Inclusive” is coming in Q3 2026, with tools to auto-simplify prompts for K-12 classrooms. Anthropic plans to bake IPEM principles into Claude 4 by May 2026. Microsoft launched its own Inclusive Prompt Designer in November 2025 and now holds 22% of the enterprise market.The goal isn’t to make AI “nicer.” It’s to make it work-for everyone.

By 2028, experts predict 92% of enterprise AI systems will include formal inclusive prompt frameworks. Just like responsive web design became standard, inclusive prompting will too. The question isn’t whether you’ll adopt it. It’s whether you’ll be ahead of the curve-or left behind by your own tools.

Where to Start Today

You don’t need to rebuild everything. Start small:- Test your prompts with someone who speaks English as a second language. Do they understand the question?

- Try rewriting one prompt in plain language. Cut jargon. Use short sentences.

- Add a visual cue: “Here’s what we’re asking for: [icon or diagram].”

- Use the IPEM open-source templates on GitHub. They’re free, tested, and updated.

- Ask your users: “What made this hard?” Not “Did this work?”

Inclusive prompt design isn’t about lowering standards. It’s about raising access. It’s about recognizing that intelligence doesn’t look the same in every language, every culture, every mind. The future of AI isn’t just smarter. It’s fairer. And it starts with how you ask the question.

What exactly is inclusive prompt design?

Inclusive prompt design is the practice of creating prompts for large language models that work well for people of all backgrounds, abilities, languages, and cognitive styles. Instead of assuming everyone thinks and speaks the same way, it adapts to real human differences-like limited English proficiency, neurodiversity, cultural communication norms, or low tech literacy-to ensure no one is locked out.

How is it different from regular prompt engineering?

Regular prompt engineering focuses on getting the best output from an AI, often using complex, precise language. Inclusive prompt design adds a layer: it asks, ‘Who is using this?’ and then adjusts the prompt to match their needs-simplifying language, adding visuals, changing tone for cultural context, or breaking tasks into steps. It’s not just about accuracy-it’s about accessibility.

Does inclusive prompting reduce AI performance?

Not usually. In fact, studies show it improves accuracy and speed for many users. For low-literacy users, response accuracy jumped from 63.2% to 82.7%. However, in highly technical domains like legal or medical coding, gains are smaller-around 12.3%-because precision matters more than simplification. The key is balancing clarity with needed detail.

Can inclusive prompts reinforce stereotypes?

Yes, if they’re poorly designed. Oversimplifying cultural norms-like assuming all elderly users need slow speech, or all non-native speakers need basic vocabulary-can create patronizing or inaccurate assumptions. That’s why IPEM includes cultural calibration engines and requires regular validation. The goal isn’t to generalize, but to personalize responsibly.

Is this only for big companies?

No. Open-source versions of the Inclusive Prompt Engineering Model (IPEM) are free and used by educators, nonprofits, and individual developers. Many small teams start by testing one prompt with a diverse group of users and making small adjustments. You don’t need a big budget-just awareness and willingness to listen.

What tools can I use to build inclusive prompts?

The open-source IPEM framework on GitHub is the most comprehensive. Microsoft’s Inclusive Prompt Designer is a commercial option. Google and Anthropic are building similar tools into their next-gen models. For beginners, start with plain language checkers like Hemingway Editor, then try the IPEM template library. The University of Washington’s Coursera course offers free, step-by-step guidance.

How long does it take to implement inclusive prompts?

A basic version can be tested in a day. Full integration using IPEM’s three-part system takes 6-8 weeks on average, including assessment, configuration, and testing. Technical teams need Python and NLP experience. But many organizations report seeing real improvements in user satisfaction within two weeks of starting.

Are there legal requirements for inclusive prompts?

Yes. The EU’s AI Act (Article 14) and U.S. Section 508 now explicitly require accessibility in AI systems-including how prompts are designed. Failure to comply could lead to legal risk, especially in healthcare, education, and government services. By 2027, this will be standard in procurement contracts across the U.S. and Europe.

Kieran Danagher

January 19, 2026 AT 16:42Let me tell you something - most of these so-called 'inclusive prompts' are just corporate buzzword bingo dressed up as ethics. I've seen teams spend six months 'calibrating cultural norms' while their chatbot still can't understand 'Can you help me?' in Indian English. The real issue? Nobody actually talks to users. They just run surveys and call it inclusive. The IPEM framework? Cute. But it's still built by people who think 'B1 English' means someone can't say 'I need medicine.'

Veera Mavalwala

January 21, 2026 AT 02:10Oh sweet mercy, here we go again with the ‘dignity’ narrative. You know what dignity looks like? It looks like my aunt in Jaipur, who got scammed by an AI voice bot that kept calling her ‘dear’ in a robotic British accent while trying to book a train ticket. She didn’t need a visual flowchart - she needed someone who spoke Hindi, knew the train schedule, and didn’t treat her like a confused child. This ‘IPEM’ thing sounds like tech bros trying to solve a problem they’ve never actually seen. They think ‘simplifying language’ means dumbing it down. No. It means respecting the intelligence of someone who just doesn’t speak your dialect. And no, I don’t want a diagram of a medication schedule. I want the damn app to stop mispronouncing ‘paracetamol’ as ‘pah-rah-seh-tah-mole.’

Patrick Sieber

January 22, 2026 AT 03:25As someone who’s implemented IPEM in a small community health project in County Clare, I can say this: it works. Not perfectly, but better than anything else out there. We had a 72-year-old man who’d never used a smartphone before. We gave him a prompt that broke down his appointment booking into three steps, with a simple icon for ‘confirm’ and a voice option. He called us two weeks later to say he’d booked his first blood test in five years. That’s not magic. That’s thoughtful design. The cost? Maybe 20% more dev time. The ROI? A human being who no longer feels invisible. That’s worth every line of code.

Sheila Alston

January 23, 2026 AT 10:07It’s not about accessibility. It’s about surrendering standards. Why should AI adapt to people who can’t form a coherent sentence? Why should we dumb down language for those who refuse to learn? This isn’t inclusion - it’s appeasement. We’re not here to coddle users who can’t handle basic English. If you can’t read a prompt, maybe you shouldn’t be using AI. This whole movement feels like a leftist social engineering project disguised as tech innovation. And now they want to make it law? Next they’ll force AI to speak in emojis so your grandma doesn’t get ‘confused.’

sampa Karjee

January 23, 2026 AT 12:46Let’s be real - this is just another Western tech export wrapped in virtue signaling. The IPEM framework assumes everyone wants the same kind of interaction. But in India, we don’t always ask directly. We hint. We imply. We wait. You can’t ‘calibrate’ that with a 142-dimension matrix. And don’t get me started on ‘visual cues’ - half the rural users don’t even have smartphones with color screens. This isn’t innovation. It’s arrogance. The real solution? Train local developers to build their own tools, not import Silicon Valley’s paternalistic templates.

Ray Htoo

January 25, 2026 AT 04:11Man, I’ve been testing this stuff for months now. I started with a simple rewrite: changed ‘Please provide a detailed account of your symptoms’ to ‘What’s hurting? Tell me in one sentence.’ For a non-native speaker? Huge difference. One guy from Nigeria replied, ‘My head hurts and my stomach feels heavy.’ The AI didn’t overcomplicate it - just asked, ‘Which hurts more?’ That’s it. No jargon. No pressure. And guess what? He got the right medical advice. IPEM isn’t about lowering the bar - it’s about moving the starting line so everyone can run. And yeah, it takes time. But so did making websites mobile-friendly. We didn’t quit then. Why quit now?

OONAGH Ffrench

January 27, 2026 AT 00:46The real question isn’t whether prompts should be inclusive it’s whether we’re willing to stop pretending that intelligence is uniform

AI doesn’t need to understand every accent every culture every cognitive style it needs to understand that understanding is not a single path

What we’re seeing here is not a technical challenge but a philosophical one

The moment we assume that clarity equals simplicity we’ve already lost

True accessibility isn’t about making things easier it’s about making space for difference

And space requires silence not more instructions

Let the user lead not the prompt

Natasha Madison

January 27, 2026 AT 21:33Just wait - this is how they start. First they make AI ‘inclusive,’ then they force you to use it. Next thing you know, your bank’s chatbot will refuse to help you unless you answer a 10-question cultural sensitivity quiz. This is the slippery slope to mandatory AI compliance. They’re not helping people. They’re controlling them. And don’t tell me it’s ‘ethical.’ Ethics is what you do when no one’s watching. This is about liability. This is about lawsuits. This is about big tech locking us into their version of ‘fair.’