Generative AI in enterprises keeps promising smarter decisions, faster responses, and automated workflows. But too often, it delivers hallucinations - confident, plausible-sounding lies that cost time, money, and sometimes lives. A pharmaceutical company’s AI suggests speeding up a chemical reaction that’s physically impossible. A bank’s chatbot recommends a loan structure that violates SEC rules. A hospital’s assistant recommends a treatment that contradicts FDA guidelines. These aren’t bugs. They’re symptoms of using general-purpose AI in high-stakes environments.

The fix isn’t better prompts. It’s not more data. It’s not even fine-tuning a model on your internal documents. The real solution is a domain-specific knowledge base - a structured, rule-driven layer that tells the AI what’s true, what’s allowed, and what’s absolutely off-limits in your industry.

Why General AI Fails in Enterprise Settings

Most enterprise AI today runs on models like GPT-4 or Claude, trained on public internet data. These models are brilliant at predicting the next word. But they have no memory of your company’s compliance rules, your factory’s safety limits, or your supply chain’s real-world constraints. They guess. And when they guess wrong in finance, healthcare, or manufacturing, the damage is real.

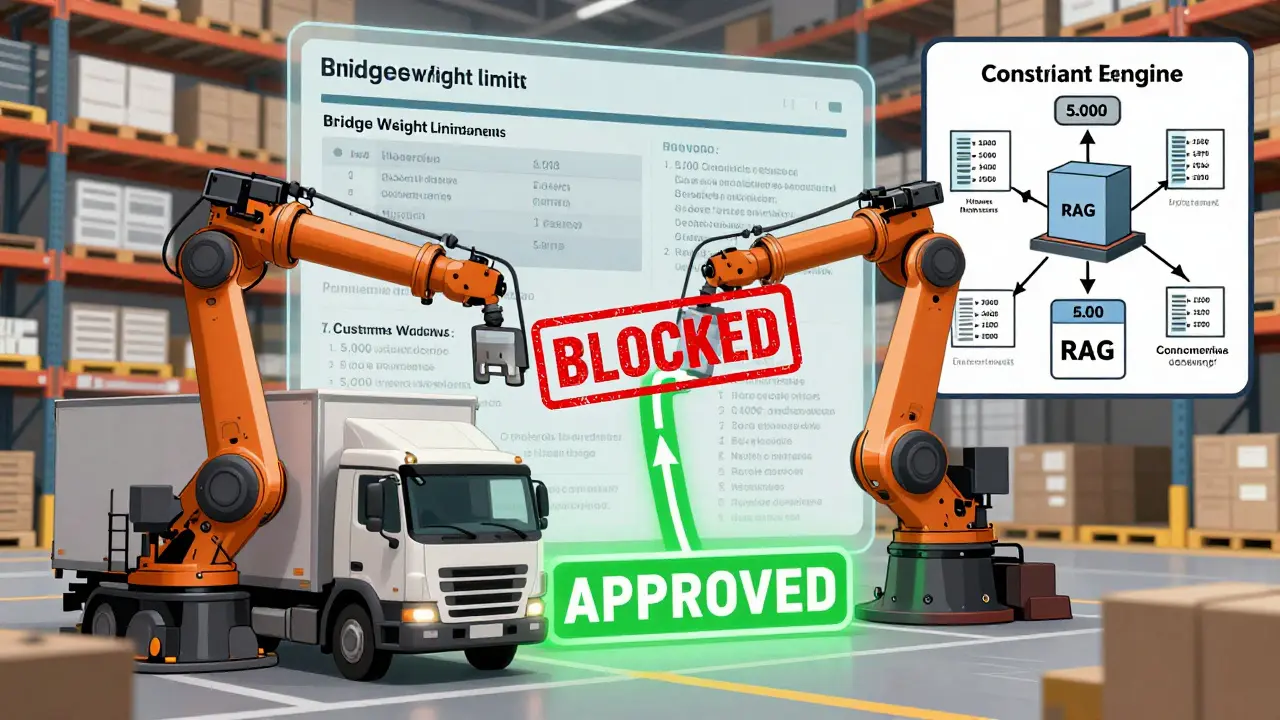

InfoQ reported that 78% of companies using general LLMs faced operational errors because of hallucinations. In one case, a logistics AI suggested shipping routes that ignored weight limits on bridges - leading to a $2.3 million delay. In another, a compliance bot approved transactions that violated anti-money laundering rules. These aren’t edge cases. They’re predictable failures of systems that lack grounding in domain reality.

How Domain-Specific Knowledge Bases Work

A domain-specific knowledge base isn’t just a document library. It’s a living, rule-based system that integrates directly into the AI’s reasoning process. Think of it like giving your AI a rulebook written by your best experts - and then forcing it to follow every rule, every time.

Here’s how it breaks down:

- Structured rules: Not just text, but encoded constraints - e.g., “Drug manufacturing step X cannot exceed 72°C” or “Loan approval requires dual-signature if amount > $500,000.”

- Ontologies and knowledge graphs: Relationships between entities - e.g., “Insulin is a Class B drug,” “FDA Regulation 21 CFR Part 11 governs electronic records.”

- Real-time retrieval: Using Retrieval-Augmented Generation (RAG), the AI pulls from your latest SOPs, regulatory updates, or equipment manuals before generating a response.

- Constraint enforcement: The AI doesn’t just retrieve info - it validates outputs against your rules before outputting anything.

Unlike generic RAG, which only retrieves relevant snippets, domain-specific systems actively block outputs that violate business logic. They don’t say “maybe” - they say “no.”

Real Results: Numbers That Matter

Companies that switched from general LLMs to domain-specific systems didn’t just reduce errors - they transformed operations.

- Pharmaceuticals: A Fortune 500 company cut drug production scheduling errors from 22% to 4% by embedding FDA and GMP rules into their AI. The system now knows that certain reactions can’t be sped up - no matter how convincing the AI’s guess.

- Healthcare: A hospital system using medical ontologies improved diagnostic accuracy from 62% (GPT-4) to 89%. Mistakes like suggesting contraindicated drug combos dropped by 41%.

- Finance: A global bank reduced false positives in fraud detection by 68%. Their AI now understands SEC Rule 10b-5 and FINRA guidelines as hard constraints, not suggestions.

- Logistics: One firm slashed impossible route suggestions from 45% to just 3%. The AI now respects bridge weight limits, trucking regulations, and customs windows.

According to OpenArc’s 2024 benchmark, domain-specific systems delivered 2.3x better accuracy at 37% of the computational cost. They don’t need trillions of tokens - just 5,000 well-curated documents.

What’s Different About This Approach?

Most companies think they’re doing domain-specific AI by uploading PDFs to a chatbot. They’re not. That’s just RAG with no enforcement. True domain-specific systems do three things:

- Embed hard constraints: Some rules are non-negotiable. The AI must refuse to generate content that violates them.

- Optimize within boundaries: It can suggest cost-saving options - but only if they comply with safety, legal, and operational limits.

- Validate before output: Every response goes through a rule-checking engine before being delivered.

This is why AWS Bedrock’s October 2025 update - which added constraint enforcement - reduced hallucinations by 74% in pilots. It’s not about better context. It’s about better control.

Who Benefits Most?

Not every industry needs this. But if your business is governed by rules, regulations, or physical limits - you’re a perfect candidate.

- Healthcare: FDA, HIPAA, clinical guidelines - one hallucination can kill.

- Finance: SEC, FINRA, AML, Basel III - non-compliance means fines or jail.

- Manufacturing: OSHA, ISO standards, equipment specs - mistakes cause shutdowns.

- Pharmaceuticals: GMP, clinical trial protocols, drug interactions - precision is life-or-death.

- Energy & Utilities: NERC, EPA, grid safety rules - errors risk blackouts.

Gartner found that 63% of financial institutions and 58% of healthcare organizations have already deployed domain-specific knowledge bases. Retail and marketing? Not so much. The difference? Risk tolerance.

The Hidden Cost: Knowledge Engineering

There’s no magic button. Building a domain-specific knowledge base takes work.

According to Gartner, each implementation requires 200-500 hours of collaboration between data scientists and domain experts. You need:

- 3-5 experts who know your rules inside out (compliance officers, engineers, pharmacists).

- 2-3 data scientists to structure the rules into machine-readable formats.

- 1-2 ML engineers to integrate the system with your AI pipeline.

One manufacturing executive on Capterra said it took six months to encode their facility’s safety constraints. But the ROI hit in four months - because they stopped losing production time to AI-generated errors.

Biggest challenge? Fragmented knowledge. IBM found 68% of enterprises had rules scattered across Word docs, SharePoint, emails, and legacy systems. Cleaning that up is half the battle.

Limitations and Criticisms

Is this perfect? No.

Domain-specific systems struggle with novel situations. A supply chain disruption no one has seen before? The AI might freeze or give a wrong answer because it has no rule for it. One manufacturing system degraded 32% in unprecedented crisis scenarios.

Dr. Emily Bender of the University of Washington warns that over-specialization creates brittle systems. “You trade adaptability for accuracy,” she said in her 2023 ACM keynote. “And in fast-changing markets, that can backfire.”

But here’s the counterpoint: General AI doesn’t adapt - it hallucinates. And hallucinations in enterprise settings aren’t just annoying. They’re dangerous.

Future updates are solving this. Microsoft’s January 2026 update lets Copilot Studio auto-validate against ontologies. AWS and others are building dynamic constraint adaptation - where the system learns from feedback loops. Cross-domain knowledge transfer is coming by 2027. The goal isn’t to lock the AI in a cage - it’s to give it a compass.

The Bottom Line: Accuracy Over Creativity

Generative AI in enterprise isn’t about writing poetry or brainstorming slogans. It’s about making decisions that affect compliance, safety, and revenue. In that context, creativity is a liability.

Domain-specific knowledge bases shift the goal from “generate something interesting” to “generate something correct.” And that’s a game-changer.

By 2028, Gartner predicts 78% of new enterprise AI projects will use this approach. The market, projected to hit $41.2 billion by 2027, is growing 38.7% annually - more than double the pace of general-purpose AI.

The companies winning aren’t the ones with the fanciest LLMs. They’re the ones who stopped asking AI to guess - and started teaching it to know.

What’s the difference between a domain-specific knowledge base and RAG?

RAG retrieves relevant documents and feeds them to the AI. The AI still generates responses based on patterns - and can still hallucinate. A domain-specific knowledge base doesn’t just retrieve - it enforces. It checks every output against hard rules and blocks anything that violates them. RAG gives context. Domain-specific systems give control.

Do I need to train my own model to use a domain-specific knowledge base?

No. Most enterprises use existing LLMs (like those in AWS Bedrock or Microsoft Copilot Studio) and layer their domain rules on top. You’re not retraining the model - you’re adding guardrails. This keeps costs low and speeds up deployment.

How long does it take to implement a domain-specific knowledge base?

Most implementations take 3-6 months. The first 6-8 weeks focus on gathering and structuring rules. Another 4-6 weeks integrate the system and test it. ROI typically shows up in 4-9 months. The upfront effort is high, but the cost of AI errors is higher.

Can a domain-specific knowledge base handle unstructured data like emails or Slack messages?

Not directly. Domain-specific systems rely on structured rules and ontologies. Unstructured data like Slack chats can be used as input to identify new rules - but those rules must be formally documented and encoded before the AI can use them. The system doesn’t learn from chatter - it learns from codified policy.

Which cloud providers offer built-in support for domain-specific knowledge bases?

AWS (Bedrock Knowledge Bases), Microsoft (Copilot Studio), and Google Cloud (Vertex AI with constraint enforcement) all now offer native tools. AWS launched constraint enforcement in October 2025. Microsoft updated its integration in January 2026. These tools let you upload your rules, link them to your LLM, and activate enforcement with a toggle.

Is this only for large enterprises?

No. While most deployments are in large organizations due to complexity, mid-sized companies in regulated industries - like regional hospitals, insurance agencies, or specialty manufacturers - are adopting it too. The key isn’t size - it’s risk. If a hallucination could cost you a fine, a shutdown, or a life, you need this.

Eka Prabha

February 9, 2026 AT 06:34This is all just corporate vaporware dressed up as innovation. You think encoding rules into AI will stop hallucinations? What about the rules that were never written down? The ones buried in old emails, whispered in meetings, or forgotten by HR after a merger? The system doesn’t know what it doesn’t have - and that’s where the real damage happens. I’ve seen this before. They call it ‘guardrails’ - I call it a false sense of security. The AI will still find loopholes. It always does. And when it does, someone’s job will be on the line - not the vendor’s.

And don’t get me started on AWS and Microsoft ‘native tools’. Those aren’t solutions - they’re lock-in traps. You think you’re controlling the AI? You’re just paying more to let them control you. The real power isn’t in the knowledge base - it’s in the people who built it. And if they leave? The whole system crumbles. Welcome to the new technical debt.

Bharat Patel

February 10, 2026 AT 09:55I love how this post frames AI as a student who needs a rulebook. But isn’t that the whole problem? We’re treating intelligence like obedience. Real understanding doesn’t come from enforced limits - it comes from context, experience, and sometimes, even mistakes. Maybe the AI suggesting that impossible reaction isn’t wrong - maybe it’s seeing something we’ve forgotten. The real danger isn’t hallucination. It’s rigidity. We’re building AI to be perfect, but perfection is the enemy of adaptation.

Think of it like driving. A car with no speed limit might crash. But a car with a single fixed speed limit can’t navigate traffic, weather, or emergencies. We need flexibility - not just rules. Maybe the answer isn’t ‘no’ - but ‘why not?’

Bhagyashri Zokarkar

February 11, 2026 AT 14:02Rakesh Dorwal

February 12, 2026 AT 02:24Let me tell you something - this whole ‘domain-specific knowledge base’ idea is just Western tech companies trying to outsource their failure. They built AI on internet garbage, now they want us to pay for Indian engineers to manually encode every single regulation? And they call it ‘innovation’? In India, we’ve been doing this for decades - human experts, paper checklists, and common sense. No AI needed. Now they want to make us pay for a glorified autocomplete? This isn’t progress - it’s colonialism with a cloud API.

And don’t even get me started on AWS. You think they care about your compliance? They care about your subscription fee. That ‘constraint enforcement’ toggle? It’s a upsell. The real solution is local expertise - not corporate SaaS.

Rajat Patil

February 13, 2026 AT 12:40I appreciate the depth of this article. It’s rare to see a discussion that doesn’t just hype AI, but actually acknowledges its limits. The emphasis on enforcement over retrieval is crucial. Too often, we confuse information with understanding. A knowledge base that says ‘no’ when it should is not a restriction - it’s a responsibility.

I work in a regional hospital, and we’ve seen what happens when AI guesses. We don’t need more creativity. We need certainty. Even if it’s slow. Even if it’s tedious. Safety isn’t optional. This isn’t about technology - it’s about ethics. And for that, I’m grateful this approach is gaining traction.

deepak srinivasa

February 15, 2026 AT 08:17Interesting point about novel situations. What happens when a new regulation comes out? Or a new drug is approved? Or a bridge gets rebuilt with different specs? Does the AI just freeze until someone re-encodes the rules? That sounds like a bottleneck. Is there a feedback loop where the system can flag potential gaps - not just enforce what’s already there? Maybe the knowledge base should be semi-autonomous - not just a static rulebook, but a living archive that invites human input? Like a wiki with gates?

Also - how often do these systems get audited? Who checks if the rules themselves are outdated? Because if the rulebook is wrong, the AI is just a very polite liar.

NIKHIL TRIPATHI

February 16, 2026 AT 10:19I’ve been implementing this at my company - logistics, not pharma - and honestly? The ROI was insane. We cut route errors from 40% to under 5% in 3 months. But here’s the thing no one talks about: the hardest part wasn’t the tech. It was getting the engineers and the ops team to stop arguing.

The ops guys had 17 different PDFs with conflicting weight limits. The engineers wanted to automate everything. We spent six weeks just merging documents. No AI. Just meetings. Coffee. Whiteboards. And one guy who remembered the 2018 memo about the old bridge in Gujarat.

So yeah - the system works. But the real magic? It’s the people who care enough to sit down and map it all out. The tech is just the envelope. The content? That’s all human.

Shivani Vaidya

February 16, 2026 AT 20:36Thank you for this comprehensive breakdown. I particularly appreciate the distinction between RAG and constraint enforcement. Many organizations confuse the two, leading to dangerous overconfidence in their AI deployments.

However, I would like to add one nuance: while domain-specific knowledge bases are critical for high-risk environments, they must be designed with humility. The rules are not absolute truths - they are current best practices, subject to revision. Therefore, the system should not only enforce, but also log deviations, flag inconsistencies, and trigger human review - not as a fallback, but as a continuous improvement loop.

True resilience lies not in rigidity, but in structured adaptability. The goal is not to eliminate all uncertainty - but to ensure that uncertainty is acknowledged, documented, and addressed by those who understand context. This is not just technical architecture - it is organizational wisdom encoded.