Enterprise vibe coding isn’t science fiction. It’s what’s happening in engineering teams right now - quietly, efficiently, and with growing urgency. By January 2026, 90% of companies with engineering teams have already brought AI into their day-to-day coding workflows. But here’s the catch: most of them didn’t just plug in GitHub Copilot and call it a day. They built guardrails. They rewired their toolchains. They learned how to make AI work inside their legacy ERP systems, their compliance-heavy environments, and their tightly controlled CI/CD pipelines. This isn’t about replacing developers. It’s about redefining what development looks like when AI doesn’t just suggest lines of code - it builds entire modules, fixes its own bugs, and connects directly to Salesforce or SAP without glue code.

What Enterprise Vibe Coding Actually Means

Vibe coding, as defined by Superblocks and adopted by ServiceNow and Salesforce, is AI-assisted development with enterprise-grade control. It’s not autocomplete. It’s not copy-pasting Stack Overflow answers generated by a chatbot. It’s when an engineer types, “Build a dashboard that pulls live inventory data from SAP and flags low-stock items over 48 hours,” and the AI generates a working React frontend, a secure API endpoint, a database schema, and automated alerts - all in under ten minutes. And then it self-tests, documents itself, and flags security risks before anyone even reviews it. The difference between consumer AI tools and enterprise vibe coding is the guardrails. Consumer tools give you speed. Enterprise systems give you speed without chaos. They enforce coding standards. They block access to sensitive data. They log every change. They roll back if something breaks. ServiceNow’s January 2026 update added human-in-the-loop previews, rollback options, and automated compliance checks that scan for PCI-DSS or HIPAA violations before code even leaves the IDE.How It Fits Into Existing Toolchains

You can’t just drop an AI agent into a 10-year-old Jenkins pipeline and expect it to work. Enterprise vibe coding requires layers:- AI-enabled IDEs - Cursor, Windsurf, or Copilot in VSCode - handle individual developer tasks like writing functions, generating tests, or refactoring legacy code.

- Orchestration layers - These coordinate multiple AI agents. One agent writes the code, another writes the test, a third checks for security flaws, and a fourth updates the documentation. All run in sequence, with human approval points built in.

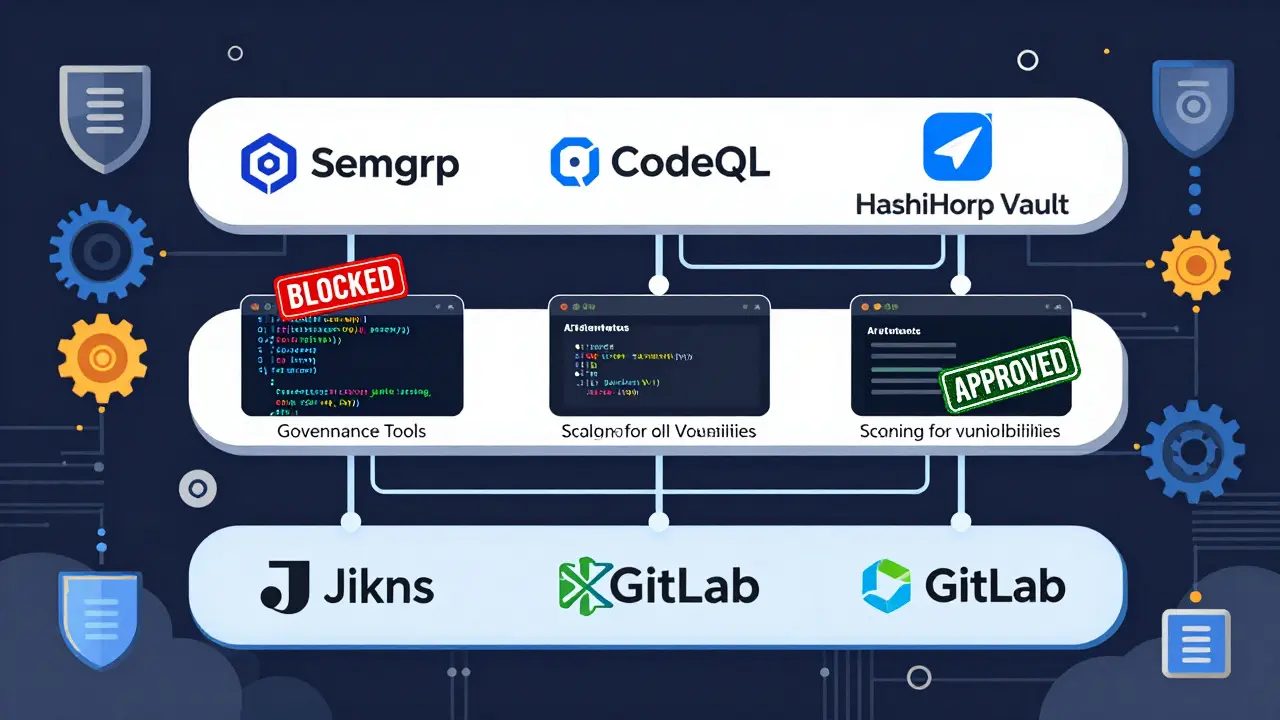

- Governance middleware - This is the enforcement layer. Tools like Semgrep and CodeQL scan every line of AI-generated code. HashiCorp Vault manages secrets. Access controls ensure AI agents can’t touch customer PII unless explicitly permitted.

Where It Shines (And Where It Fails)

Vibe coding doesn’t replace everything. It excels in three areas:- Internal tools - Building dashboards, approval workflows, or reporting tools that used to take weeks now take days. Salesforce reports 73% faster deployment cycles for these kinds of apps.

- Legacy modernization - Migrating COBOL systems or outdated Java apps? AI can map old data structures to new APIs, rewrite UIs, and even suggest refactoring patterns based on similar codebases.

- Workflow automation - ServiceNow’s internal metrics show 92% fewer errors in AI-generated automation scripts than manually written ones.

Skills You Need Now (That You Didn’t Five Years Ago)

If you’re still hiring for “full-stack developers” who only know JavaScript and React, you’re behind. The new profile includes:- Prompt engineering - Not just “write code,” but “write a secure, scalable, documented API endpoint that connects to our internal auth system and returns only active users.”

- AI testing - You can’t just test the output. You need to test the AI’s behavior. Does it hallucinate? Does it reuse old credentials? Does it ignore your company’s coding standards?

- Orchestration - Understanding how multiple AI agents interact, how to chain them, and when to insert human review.

- Cloud-native infrastructure - AI tools run in containers. They need secrets management. They need monitoring. You still need to know how to deploy them.

Security Isn’t Optional - It’s Built In

A lot of fear around vibe coding comes from security. And rightly so. But the best enterprise implementations don’t rely on humans to catch mistakes. They bake security into the system:- AI agents can’t access production databases unless explicitly granted permission - and even then, only through encrypted, rotated secrets managed by HashiCorp Vault.

- All generated code is scanned by Semgrep and CodeQL before merging. No exceptions.

- For highly regulated industries (finance, healthcare), AI-generated code must be self-documenting and explainable. Every function has a comment explaining why it was written, what data it touches, and which policy it follows.

- Some companies run local AI models on-premises so no code leaves the firewall.

What’s Next? Smarter Guardrails, Deeper Integration

The next phase isn’t about more AI. It’s about better AI. ServiceNow’s January 2026 update introduced “adaptive guardrails” - systems that learn from past mistakes. If an AI keeps generating code that triggers a specific security rule, the system learns to block that pattern before it’s even written. Google Cloud’s partnership with Replit, announced in February 2026, is another sign. They’re embedding Gemini 3 directly into Replit’s design mode, so developers can build UIs with natural language and get production-ready backend code in real time - all hosted on Google Cloud with built-in compliance. The future isn’t “AI writes all the code.” It’s “AI handles the repetitive, error-prone, boilerplate-heavy parts - so humans can focus on architecture, ethics, and innovation.”Getting Started Without Chaos

If you’re considering vibe coding, here’s how to avoid the common traps:- Start with AI-enabled IDEs - Give your team Cursor or Copilot for VSCode. Let them use it for internal tools first.

- Define a scope - Pick one type of task: dashboard updates, API endpoints, or test generation. Don’t try to automate everything.

- Build your guardrails - Set up Semgrep, CodeQL, and access controls before you scale.

- Train on prompt engineering - Don’t assume your devs know how to talk to AI. Run workshops.

- Measure before you scale - Track time saved, error rates, and security incidents. If numbers improve, expand. If not, pause.

Is vibe coding the same as low-code platforms?

No. Low-code platforms let users drag and drop components with limited customization. Vibe coding lets developers describe goals in natural language and get fully customizable, production-ready code. It’s not about avoiding code - it’s about writing less of it, faster, and with better guardrails.

Can AI-generated code pass audits?

Yes - if it’s built with compliance in mind. Leading platforms like ServiceNow and Salesforce now generate code that includes automated documentation, test coverage reports, and security annotations. Regulators are starting to accept AI-generated code as long as it’s traceable, testable, and follows documented standards.

Do we still need senior engineers with vibe coding?

More than ever. Senior engineers now act as AI coaches. They design guardrails, review agent behavior, fix hallucinations, and ensure AI aligns with business goals. The role isn’t disappearing - it’s evolving from code writer to system architect and AI supervisor.

What’s the biggest risk of vibe coding?

The biggest risk isn’t security - it’s skill erosion. If developers stop learning how to write code from scratch, they lose the ability to debug complex issues or design scalable systems. The goal isn’t to replace coding skills - it’s to augment them.

Which tools are best for enterprise vibe coding?

For individual productivity: GitHub Copilot, Cursor, or Windsurf. For enterprise integration: ServiceNow’s AI Platform and Salesforce’s Agentforce Builder. For cloud-native deployments: Replit with Google Cloud’s Gemini 3. The right tool depends on your existing stack and governance needs.

Nathan Pena

January 23, 2026 AT 03:14Let’s be real - if your ‘vibe coding’ requires a compliance middleware layer thicker than your corporate HR policy, you’re not innovating. You’re just outsourcing your technical debt to a bot that can’t even spell ‘PCI-DSS’ without a 12-step audit trail. This isn’t engineering. It’s corporate theater dressed up in LLM glitter.

Mike Marciniak

January 23, 2026 AT 11:55They’re not building guardrails. They’re building surveillance states. Every line of code logged, every prompt tracked, every ‘self-test’ monitored by some AI auditor who doesn’t know what a null pointer is. This isn’t progress - it’s the death of creativity in software. They’re turning engineers into code janitors with security badges.

VIRENDER KAUL

January 23, 2026 AT 22:04Enterprise vibe coding is not a trend it is a paradigm shift in software development lifecycle management. The integration of AI within legacy systems demands rigorous governance frameworks and structured orchestration protocols. Without formalized access controls and compliance scanning mechanisms the entire architecture collapses under its own weight. The data is clear: unregulated AI code generation leads to exponential technical debt. Organizations that fail to adopt semgrep codeql and vault integration will be left behind in the digital evolution. This is not speculation it is operational reality.

Mbuyiselwa Cindi

January 25, 2026 AT 08:55I’ve seen teams go from zero to shipping internal tools in days using Copilot + guardrails. It’s not magic, but it’s close. The key is starting small - one dashboard, one API endpoint. Don’t try to automate your whole HR system on day one. And please, train your devs on how to prompt properly. ‘Make it work’ doesn’t cut it. ‘Make it secure, documented, and scoped to active users only’ does. You’ll thank yourself later.

Krzysztof Lasocki

January 27, 2026 AT 04:22So let me get this straight - we’re paying engineers to babysit AI that writes code better than they ever could… but only if it passes 17 compliance checks and has a 37-page audit trail? Cool. Cool cool cool. Meanwhile, the guy who wrote the original COBOL system in 1987 is still on call because the AI kept trying to ‘optimize’ his 40-year-old payroll logic into a TikTok dance. We’re not evolving. We’re just adding more bureaucracy to the chaos.

Henry Kelley

January 28, 2026 AT 07:38Honestly? I’m all for it. I used to spend weeks building internal dashboards. Now I say ‘build me a report that shows inventory dips over 48hrs from SAP’ and boom - it’s done. Yeah, the AI messed up the auth once, but we fixed the guardrails. No need to be a hero and write everything from scratch. Let the bot do the boring stuff. I’ll focus on making sure it doesn’t break anything. That’s my job now.

Victoria Kingsbury

January 29, 2026 AT 00:57Let’s not romanticize this. AI-generated code is still code - and if you’re not running Semgrep, CodeQL, and Vault on every commit, you’re just gambling with your production environment. The ‘vibe’ is real, but so are the 89 security flaws in that HR system the Reddit guy mentioned. We’re not replacing devs - we’re replacing the *idiot* devs who thought they could ship untested AI output. The new skill? Knowing when to say ‘no’ to the bot.

Tonya Trottman

January 29, 2026 AT 12:24Oh wow. So now we’re calling it ‘vibe coding’ like it’s some kind of yoga session for developers? You mean ‘AI hallucinates, we patch it, then we call it ‘enterprise-grade’ because we slapped a compliance layer on it like duct tape on a nuclear reactor?’ Please. The only thing ‘adaptive’ here is the corporate buzzword generator. And don’t even get me started on ‘prompt engineering’ - that’s just ‘learning how to beg a robot for code’ with a fancy title. We used to call it ‘debugging.’ Now we call it ‘orchestrating agents.’ Same thing. Just more PowerPoint.

Rocky Wyatt

January 31, 2026 AT 11:11You people are missing the point. The real danger isn’t security flaws or compliance - it’s that we’re raising a generation of devs who can’t write a for loop without asking an AI. When the AI goes down, or the vendor changes their API, or the model gets banned - what’s left? A room full of people who don’t know how to code. That’s not progress. That’s a slow-motion collapse. And you’re all just cheering while the foundation burns.