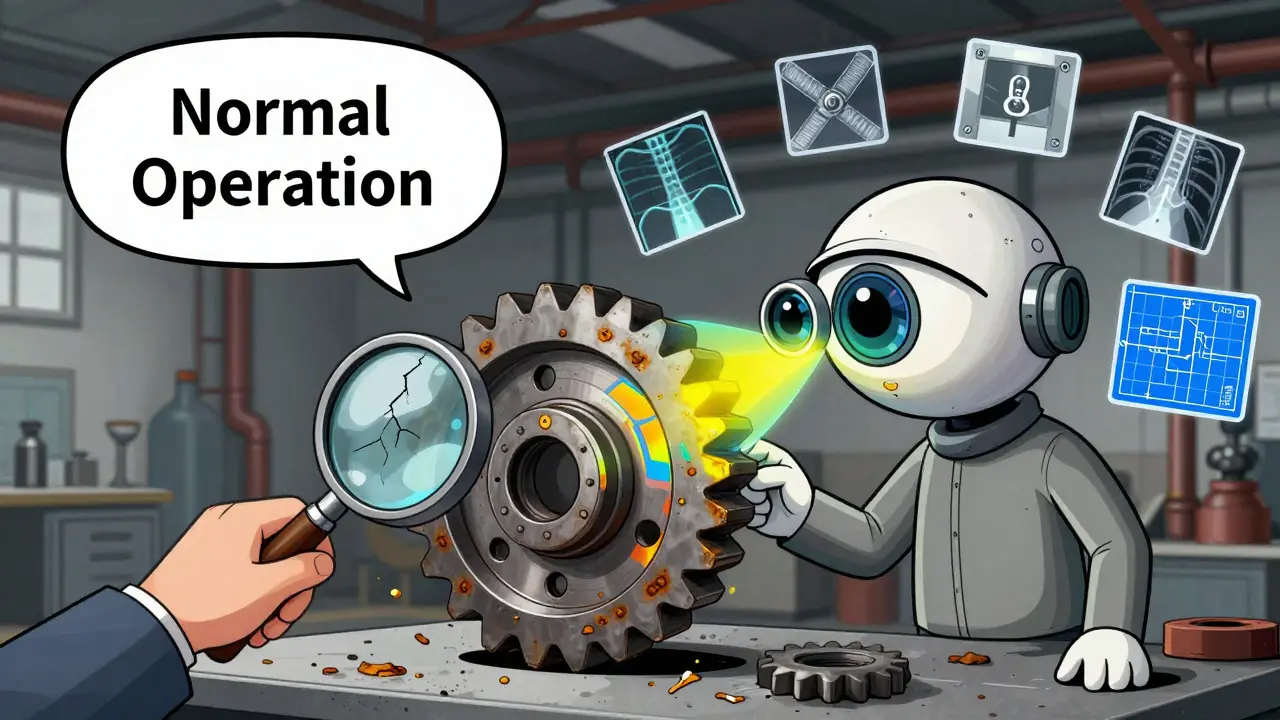

When you ask an AI to describe a photo of a broken machine part, it doesn’t just read the caption-it needs to see the crack, the rust, the misalignment. That’s what multimodal LLMs do. But how they learn to see and understand language at the same time splits into two very different paths: vision-first and text-first pretraining. One starts by teaching the model to understand images, then adds words. The other starts with a powerful language model and grafts vision onto it. Which one works better? It depends on what you’re trying to build-and what you’re willing to sacrifice.

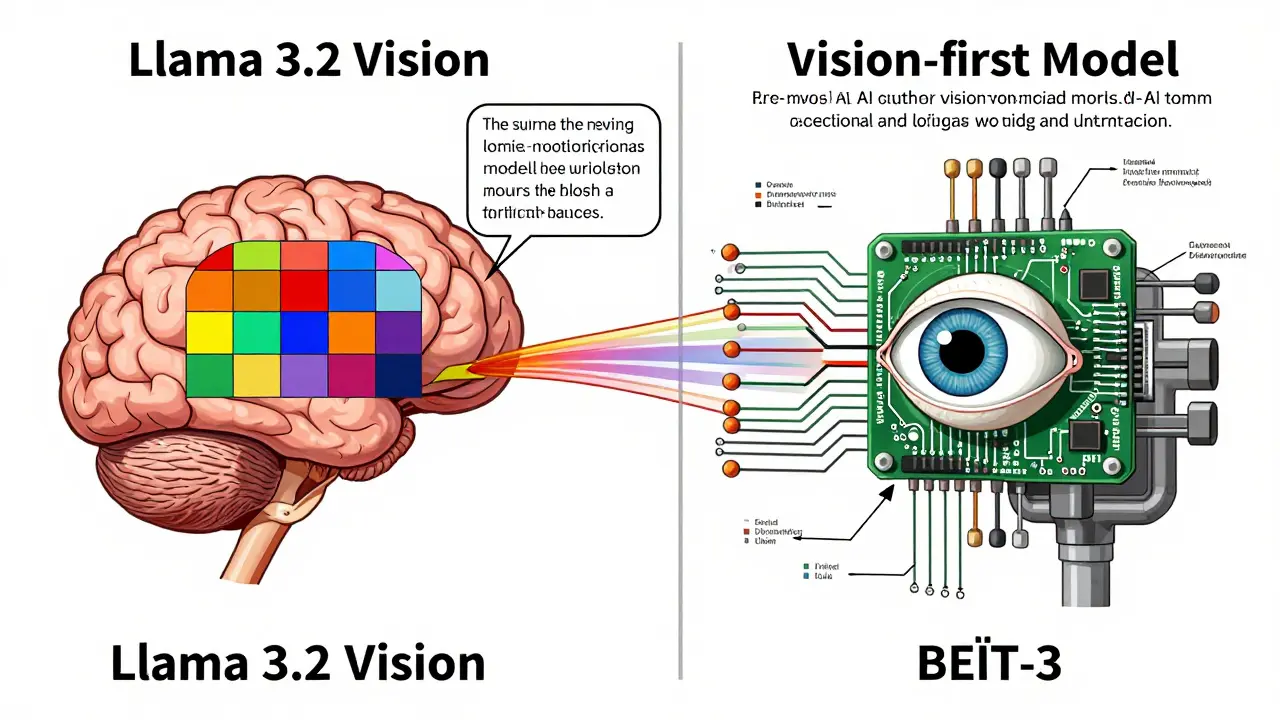

Text-First: The Industry Standard

Most of the multimodal models you’ll encounter today-Llama 3.2 Vision, Qwen2.5-VL, Phi-4 Multimodal-are built on a simple idea: start with a strong language model, then teach it to interpret pictures. This approach dominates because it’s practical. If you already have a team that knows how to fine-tune Llama 3.1 or Phi-4 Mini, adding vision doesn’t mean starting over. It means plugging in a vision encoder, training it to match images to text, and letting the language model do the rest.

The results are impressive for everyday tasks. On visual question answering (VQA), text-first models hit 84.2% accuracy on the VQAv2 benchmark. They’re great at reading receipts, summarizing charts, or answering questions about product images. And they’re fast: DeepSeek-VL2 can process nearly 2,500 tokens per second on a single A100 GPU. That’s why 87% of enterprise AI tools today use this approach. Companies like banks and retailers don’t need perfect visual reasoning-they need reliable, scalable, and familiar tools.

But there’s a catch. Text-first models treat images as a kind of text. They compress pixels into patches, turn them into embeddings, and feed them into a language model that was never designed to understand spatial relationships. The result? A phenomenon users call “image blindness.” On Reddit, 41% of developers reported that these models ignore visual details when a text description is nearby. A photo of a broken circuit board might get misread if the caption says “normal operation.” The model isn’t seeing the board-it’s reading the label.

They also need more memory. A text-first Llama-3-8B-Vision uses 25.1GB of VRAM, while the base Llama-3-8B only needs 19.2GB. That 30% increase adds up fast when you’re running dozens of models in production. And while they lose only 2.3% performance on pure text tasks, they struggle with complex visual reasoning. On ChartQA, which tests understanding of data plots, text-first models score 18.7% lower than vision-first ones.

Vision-First: The Academic Edge

Vision-first pretraining flips the script. Instead of starting with language, it begins with a vision transformer-like ViT-B/16-trained on millions of images. Then, it slowly learns to connect what it sees with words. Microsoft’s BEiT-3 is a prime example. It didn’t start as a chatbot. It started as a model that could recognize objects, detect relationships, and understand scenes. Only after mastering vision did it learn to describe them.

This approach shines where visual depth matters. In medical imaging, manufacturing inspection, or satellite analysis, vision-first models outperform their text-first cousins. On image captioning, they’re 5.3 percentage points better. They need less training data to reach the same level of performance-37% less, according to Microsoft Research. That’s huge if you’re working in a niche field with limited labeled examples, like rare disease diagnostics or industrial defect detection.

They also handle complex layouts better. A multi-panel comic, a scientific diagram with overlapping labels, or a floor plan with annotations? Vision-first models parse these naturally. Text-first models, by contrast, often fail. GitHub users report that 62% of text-first models struggle with these tasks. Vision-first models don’t need to compress the image into a linear sequence. They keep the structure. They understand proximity, scale, and hierarchy.

But they pay a price in language. While vision-first models like BLIP-2 are great at describing what they see, they’re not as fluent as Llama 3.2 Vision when generating long, coherent text. On pure language benchmarks, they drop 7.8% in performance compared to their text-only ancestors. They’re not bad at language-they’re just not optimized for it. Their architecture was built for vision first, language second.

Real-World Trade-Offs

Choosing between these paths isn’t about which is “better.” It’s about which fits your use case.

Need to automate customer service chatbots that answer questions about product photos? Text-first wins. It’s faster to deploy, integrates with your existing LLM tools, and works reliably at scale. A financial services company using Llama-3-Vision got 89% accuracy on document understanding-but had to feed it 47% more training data than expected.

Working on medical diagnostics or quality control in a factory? Vision-first might be worth the effort. A healthcare provider using MedViLL hit 93% accuracy in analyzing X-rays with 31% less domain-specific data. That’s not just efficiency-it’s life-saving precision.

Implementation difficulty also varies. Developers familiar with LLMs can get a text-first model running in 30-40 hours. Vision-first models? Expect 60-80 hours. You need computer vision expertise, new data pipelines, and less documentation. Lightly AI’s survey shows text-first models average 4.3/5 in documentation quality; vision-first get 3.7/5. Community support is stronger too-82% of text-first GitHub issues get answered in 48 hours, compared to 57% for vision-first.

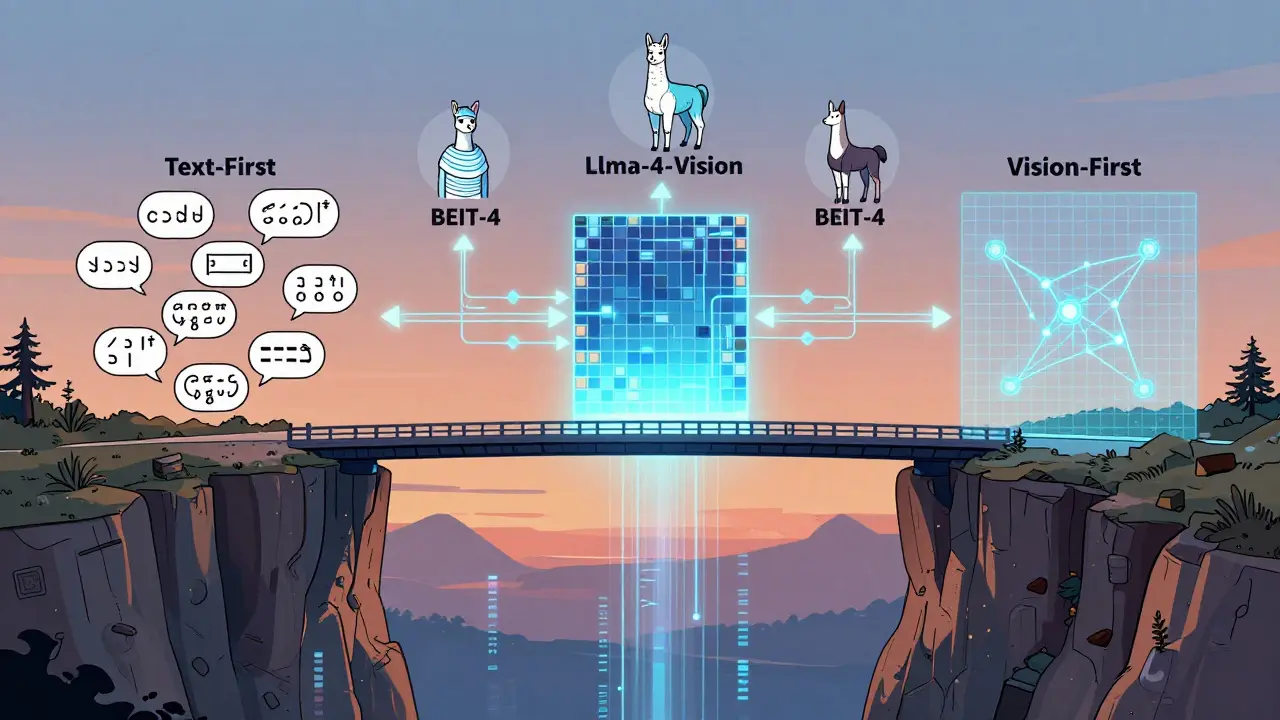

The Future Isn’t Either/Or

Here’s what’s really happening: nobody’s betting on just one path anymore.

Gartner’s October 2025 report found that 78% of AI leaders are already exploring hybrid architectures. Meta’s upcoming Llama-4-Vision and Microsoft’s BEiT-4 aren’t pure vision-first or text-first. They’re hybrids. They’re borrowing dynamic tiling from DeepSeek-VL2 to handle variable image resolutions. They’re stealing cross-modal alignment tricks from BEiT-3 to reduce the “image blindness” problem. They’re trying to have the best of both worlds: the language fluency of text-first and the visual depth of vision-first.

Regulations are pushing this too. The EU AI Act’s 2025 update requires more validation for vision-first systems in high-risk applications-meaning companies can’t just use them blindly in healthcare or aviation. But that doesn’t mean they’ll disappear. It means they’ll evolve. Hybrid models will need to prove they understand both the visual context and the linguistic nuance.

Right now, text-first dominates because it’s easier. Vision-first leads in research because it’s more honest to the data. But the future belongs to models that don’t choose. They see. They read. They connect.

What Should You Build?

If you’re building for scale, speed, and compatibility with existing tools-go text-first. Use Llama 3.2 Vision or Qwen2.5-VL. You’ll get results fast. Just be aware of the blind spots. Test for image blindness. Check how it handles layouts. Don’t assume it’s seeing what you think it is.

If you’re working in a specialized domain with rich visual data and limited labels-consider vision-first. Try BEiT-3 or MedViLL. You’ll need more time, more expertise, and more patience. But you’ll get deeper understanding. You’ll catch what others miss.

And if you’re planning for 2026? Start experimenting with hybrids. Look at how DeepSeek-VL2 handles dynamic tiling. Study how BEiT-3 aligns vision and language without forcing one into the other’s mold. The next generation of multimodal AI won’t be built on a single foundation. It’ll be built on a bridge.

What’s the main difference between vision-first and text-first pretraining?

Vision-first pretraining starts by training a model to understand images using vision transformers, then adds language capabilities. Text-first pretraining starts with a powerful language model and adds vision as a secondary input. Vision-first models learn to see first; text-first models learn to talk first, then learn to look.

Which approach is better for visual question answering (VQA)?

Text-first models currently lead in VQA, scoring 84.2% accuracy on VQAv2, compared to 79.6% for vision-first models. This is because they’re optimized for generating answers based on both image and text cues, leveraging the strength of large language models in natural language reasoning.

Do vision-first models need more data to train?

No-the opposite is true. Vision-first models require 37% less training data to reach comparable performance levels in cross-modal tasks. This makes them more efficient in niche domains like medical imaging or industrial inspection, where labeled multimodal data is scarce.

Why do text-first models use more VRAM?

Text-first models combine a frozen LLM with a vision encoder and additional alignment layers. This increases the total parameter count and memory footprint. For example, Llama-3-8B-Vision uses 25.1GB of VRAM, while the base Llama-3-8B uses only 19.2GB-a 30% increase due to the added vision components.

Are vision-first models used in production today?

Yes, but sparingly. Only 13% of enterprise multimodal solutions use vision-first architectures, mostly in specialized fields like medical imaging, manufacturing quality control, and remote sensing. Text-first models dominate commercial use due to easier integration and better documentation.

What’s the biggest weakness of text-first models?

The biggest weakness is “image blindness”-where the model ignores visual details if a textual description is present. This happens because the vision encoder compresses images into a format the language model can handle, often losing spatial and structural context. Users report this issue in 41% of cases on platforms like Reddit.

Will hybrid models replace both approaches?

Not replace-but dominate. By Q4 2026, Gartner predicts 65% of multimodal models will be hybrids, combining the language fluency of text-first with the visual depth of vision-first. Models like Llama-4-Vision and BEiT-4 are already moving in this direction, blending dynamic tiling, improved alignment, and cross-modal attention.

Adrienne Temple

January 30, 2026 AT 01:35This is such a relatable breakdown 😊 I work with product images daily and yeah, the models totally ignore the rust if the caption says 'like new'. It's like they're reading the text and just skipping the image. Been there, done that.

Also, why do we keep pretending text is king? Images aren't just decorations-they're data. 🙃

Sandy Dog

January 30, 2026 AT 04:49OKAY BUT LET’S TALK ABOUT HOW TEXT-FIRST MODELS ARE LIKE THAT ONE FRIEND WHO SWORE THEY READ THE BOOK BUT JUST LOOKED AT THE BACK COVER AND MADE UP THE REST. 🤯

They’re fast? Sure. They’re easy? Absolutely. But when you show them a medical scan with a tiny tumor hidden in the corner and the label says ‘normal’, they’re gonna say ‘all good!’ while the patient is silently screaming. I’ve seen it. I’ve cried over it. I’ve filed complaints. This isn’t just tech-it’s ethics with a side of negligence.

Vision-first models? They don’t skip the details. They notice the asymmetry. They catch the shadow. They don’t need a caption to know something’s wrong. And yeah, they’re slower, harder to deploy, and have worse documentation-but so was the first iPhone. And look where we are now.

Stop optimizing for convenience. Start optimizing for truth. The world doesn’t need more AI that reads labels. It needs AI that sees reality.

Also, I’m starting a petition. #SeeTheImageNotTheCaption

Nick Rios

January 31, 2026 AT 06:07I think both approaches have merit, and the real win is in how they’re being combined now. The hybrid models aren’t just a compromise-they’re an evolution. Text-first gives us speed and scalability, which matters for real-world apps. Vision-first gives us depth, which matters for high-stakes decisions.

It’s not about picking one. It’s about knowing when to use which tool-or better yet, building systems that know when to switch between them. The future isn’t either/or. It’s both/and.

Also, props to the author for not oversimplifying this. Rare these days.

Amanda Harkins

January 31, 2026 AT 08:18It’s funny how we treat images like second-class citizens in AI. Like, sure, you can throw pixels at a language model, but it’s not really seeing-it’s just pattern-matching with extra steps.

Text-first feels like teaching a poet to describe a sunset by giving them a weather report. They’ll write something pretty. But they’ll miss the way the clouds bleed into the ocean. The silence between the colors.

Vision-first? That’s the poet who sat there for hours, watching. Then wrote something that made you feel it.

Still… we’re all just trying to make machines less dumb. And maybe that’s enough for now.

Jeanie Watson

January 31, 2026 AT 22:27Yeah, I read it. Text-first is fine. Vision-first is cool. Hybrids are the future. Cool. Done. Let’s move on.

Tom Mikota

February 2, 2026 AT 22:08So let me get this straight: you’re telling me that 87% of companies are using models that literally can’t see the difference between a broken part and a working one if the label says ‘normal’-and you’re calling that ‘practical’? 🤦♂️

And you say vision-first has ‘worse documentation’? That’s not a weakness-it’s a feature. If you can’t document it, maybe you shouldn’t be using it in production. Also, ‘30% more VRAM’? That’s not a cost-it’s a warning sign.

And why are we still calling this ‘pretraining’? It’s not pretraining-it’s patching. Text-first is just slapping a vision encoder onto a language model like duct tape on a leaky pipe. Vision-first is building the whole damn plumbing system from scratch.

Stop pretending convenience is innovation. It’s not. It’s laziness with a PowerPoint deck.

Adithya M

February 3, 2026 AT 13:54Tom you’re overreacting. But also… you’re right. I’ve seen vision-first models catch defects in turbine blades that text-first models missed by 100%. The company didn’t want to switch because ‘we already paid for the Llama stack’. But we lost 3 million in recalls last year because of those blind spots.

Hybrid is the answer. But we need to stop pretending text-first is ‘good enough’. It’s not. It’s a band-aid. And band-aids don’t fix broken bones.

Also, the EU AI Act is coming. You think they’ll let you deploy a model that ignores visual context in healthcare? Wake up. The cost of ‘easy’ is gonna come due soon.