Tag: data poisoning

Incident Response for AI-Introduced Defects and Vulnerabilities

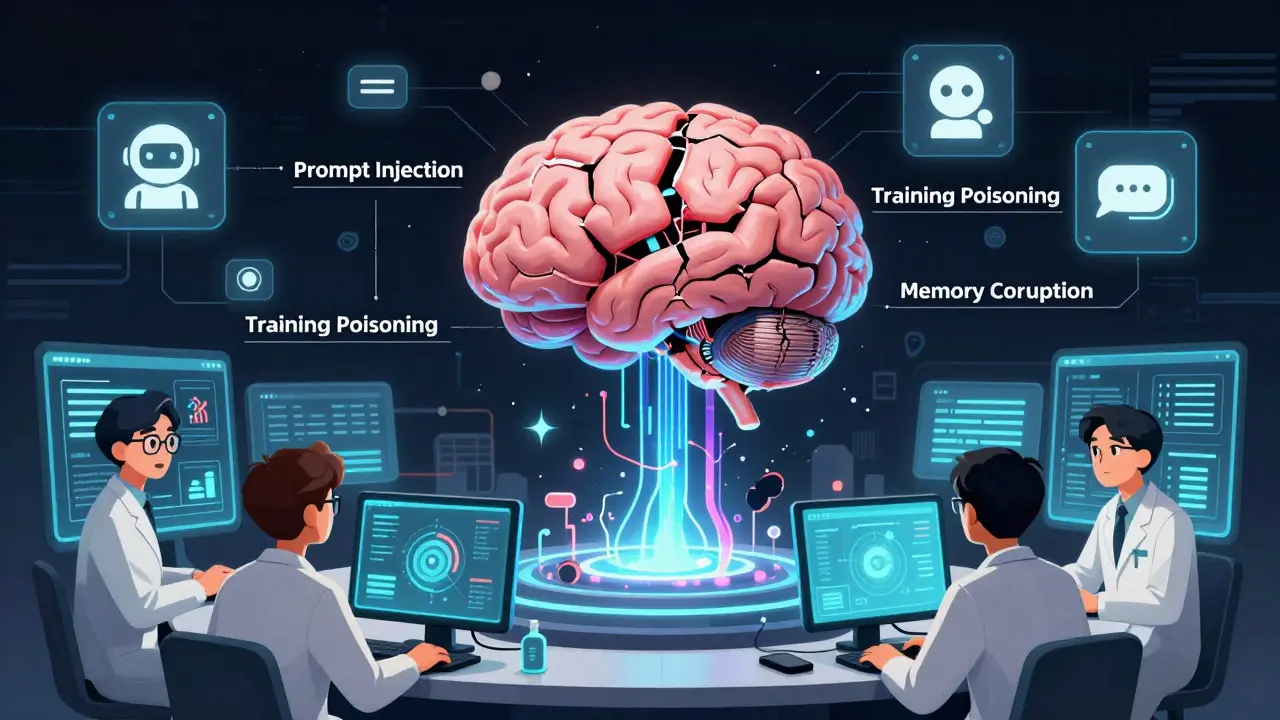

AI introduces unique security risks like prompt injection and data poisoning that traditional incident response can't handle. Learn how to build a specialized response plan using the CoSAI framework and AI-specific monitoring.

Read more