Tag: text-first pretraining

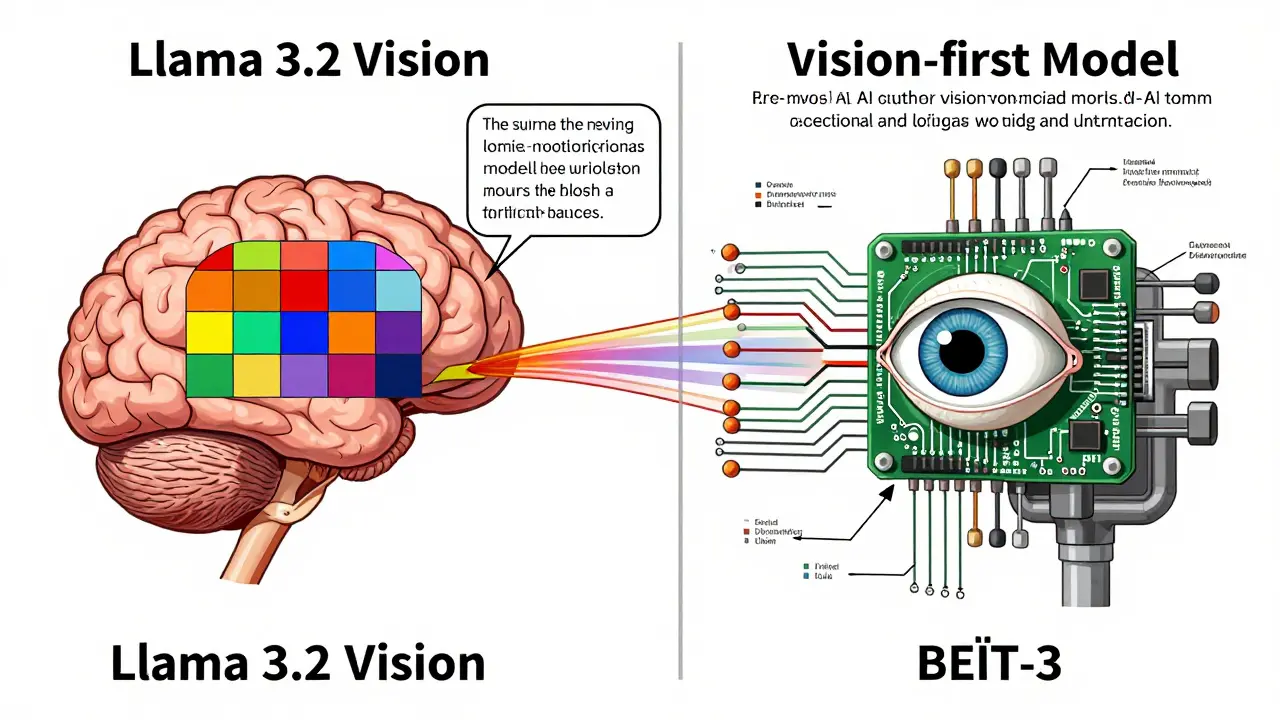

Vision-First vs Text-First Pretraining: Which Path Leads to Better Multimodal LLMs?

Vision-first and text-first pretraining offer two paths to multimodal AI. Text-first dominates industry use for its speed and compatibility; vision-first leads in research for deeper visual understanding. The future belongs to hybrids that combine both.

Read more