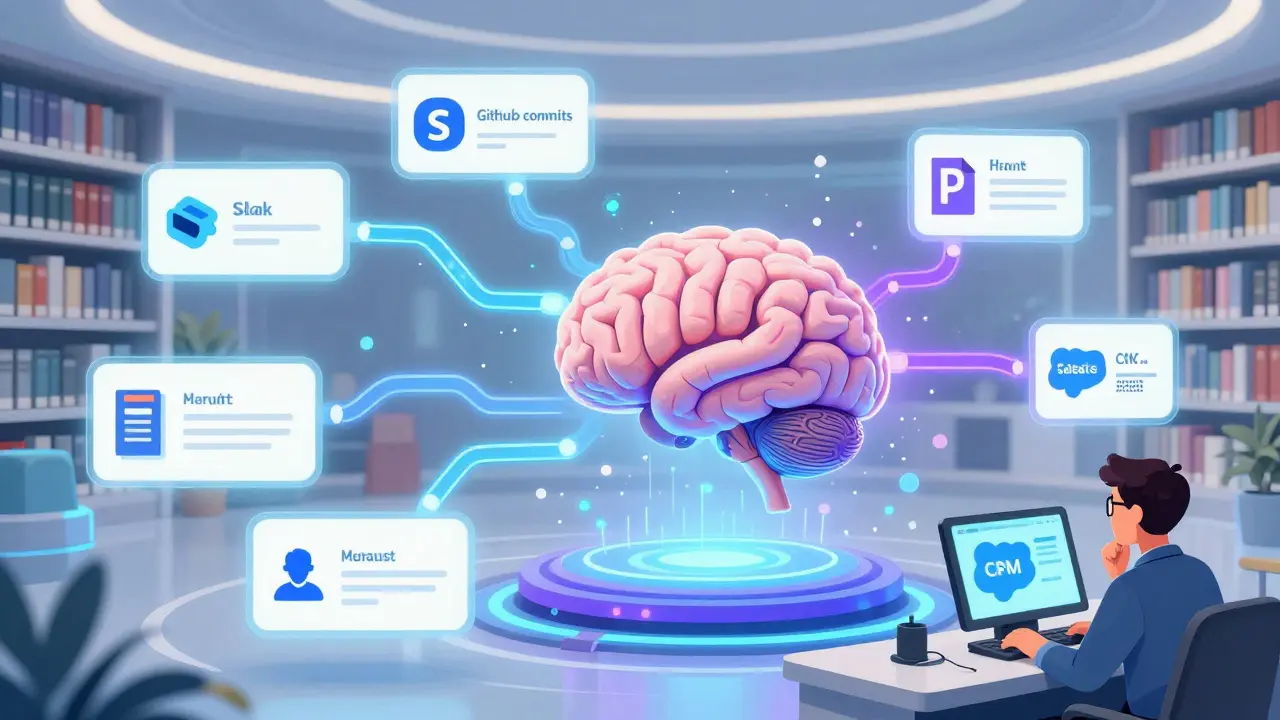

When companies first started using generative AI, they hit a wall. The models were smart, sure-but they didn’t know what was happening in their own company. Slack messages, updated SOPs, internal wikis, GitHub code repos-all of it was invisible to the AI. If you asked a question about last quarter’s project status, the model would guess. And guesses from large language models? They’re often wrong, sometimes dangerously so.

That’s where enterprise RAG comes in. It’s not just another AI buzzword. It’s the architecture that lets your LLMs access live, accurate, company-specific data without retraining. And it works through three core pieces: connectors, indices, and caching. Get those right, and you go from slow, unreliable AI to something that feels like a supercharged employee who’s read every document, attended every meeting, and remembers every detail.

Connectors: The Data Pipeline

Before you can retrieve anything, you need to get the data in. Enterprise RAG starts with connectors-software bridges that pull information from wherever it lives. This isn’t just about uploading PDFs. Real systems connect to:

- SharePoint and OneDrive (corporate documents)

- Slack and Microsoft Teams (real-time chat history)

- Google Workspace (Docs, Sheets, Drive files)

- GitHub and GitLab (code, commit messages, issue threads)

- CRM systems like Salesforce (customer interactions)

- Internal wikis and Confluence pages

Each connector has to handle format, access permissions, and update frequency. A Slack message from yesterday needs to be indexed as fast as possible. A quarterly financial report? It can wait for a nightly batch. The key is not to index everything at once. Smart teams map which sources are critical for which use cases. Sales teams need CRM data. Engineers need code repos. HR needs policy docs. You don’t need to connect to every system-just the ones that matter for your AI’s job.

And don’t forget change detection. If someone edits a document in Google Docs, the system should know. That’s where Change Data Capture (CDC) comes in. Instead of re-indexing the whole company’s knowledge base every hour, CDC listens for updates and only reprocesses what changed. It’s like having a librarian who only shuffles the books that were moved, not the entire shelf.

Indices: Where Knowledge Lives

Once data is in, it needs to be organized so the AI can find it fast. That’s the job of indices. And here’s where most RAG systems fail: they use only one kind.

Modern enterprise RAG uses hybrid indexing. Two types work together:

- Vector indices (like FAISS, Pinecone, or Qdrant) store text as numerical embeddings-mathematical representations of meaning. When you ask, “What’s our policy on remote work?”, the system converts your question into a vector and finds the closest matches in the database. This handles semantic search: it understands that “work from home” and “telecommuting” mean the same thing.

- BM25 indices (used in Elasticsearch or OpenSearch) look for exact keyword matches. It’s great for finding documents with the phrase “HR policy” or “Q3 budget.” But it doesn’t get nuance.

Why use both? Because neither works alone. A vector index might miss “Q2 financial summary” if it’s phrased as “second quarter earnings report.” A BM25 index might return 20 documents with the word “budget” but none that actually answer your question. Together, they cover each other’s blind spots.

Storage matters too. If you have millions of documents, you can’t keep all vectors in RAM. That’s where disk-based solutions like DiskANN with Vamana come in. They let you index billions of vectors on cheap SSDs without sacrificing search speed. Some teams even split storage: hot data (last 90 days) in RAM, older docs on disk. It’s like keeping your most-used books on your desk and the rest in the basement-fast access when you need it, but still there when you do.

Caching: The Secret Weapon

Here’s the truth no one talks about: 70% of enterprise RAG queries are repeats. Someone asks, “What’s our onboarding checklist?” five times a day. Another asks, “Who’s the product lead for Project Orion?” ten times a week. If you’re re-running the full retrieval and generation pipeline every time, you’re wasting time, money, and compute.

Semantic caching fixes this. Instead of processing the same question again, you store the query and its answer. Next time someone asks the same thing-or something close-you return the cached result.

How close is close? That’s where similarity thresholds come in. Most teams use 0.85-0.95. If your system uses a 0.90 threshold, it means: “Only reuse an answer if the new question is at least 90% similar in meaning to a past one.” High-stakes domains like legal or medical use 0.95. Customer support teams might drop to 0.85 to save costs, accepting a tiny risk of slightly outdated answers.

Redis is the go-to tool for this. It’s fast, simple, and integrates cleanly with LangChain. With RedisSemanticCache, you can store query embeddings and their responses. A cache hit? Response time drops from 2.5 seconds to under 50 milliseconds. That’s 50x faster. Users notice. Managers notice. Your cloud bill notices too.

But caching doesn’t stop at query-response pairs. The next level is RAGCache, which caches internal model states-specifically, the key-value (KV) tensors generated during the LLM’s attention mechanism. Think of it like saving the brain’s scratchpad after it’s done thinking about a document. When the same document comes up again, you don’t recompute all those intermediate steps. You just reuse them. This cuts prefill time-the slowest part of generation-by 60% or more.

And then there’s ARC, the breakthrough from March 2025. Instead of caching everything that’s been asked before, ARC figures out which pieces of knowledge are most likely to be reused based on how they sit in the embedding space. It’s like predicting which books on your shelf will be picked up next, not by how often they’ve been read, but by their shape, size, and location on the shelf. The result? With just 0.015% of your total data stored, ARC answers 79.8% of questions. That’s not caching. That’s intelligence.

Sync, Scale, and Stability

Here’s the hard part: your data is always changing. Someone adds a new policy. A developer pushes a code update. A product manager revises the roadmap. Your RAG system must keep up.

Most teams use hybrid sync: batch updates overnight for historical docs, and real-time CDC for live channels like Slack or GitHub. This balances freshness with performance. Pure real-time indexing? Too slow. Pure batch? You’re answering questions with data that’s two days old. That’s dangerous.

Multi-server setups need shared caching. If you have five LLM inference nodes, each one can’t have its own cache. That’s wasteful. Solutions like RAG-DCache use a central, distributed cache-partly in RAM, partly on NVMe SSDs-with prefetching that predicts which documents will be needed next based on query queues. Clustered queries? Group them. If 12 users ask about the same project, you fetch the documents once and serve all 12 from the same cached KV states.

And for graph-based RAG-where answers require chaining multiple documents together-SubGCache precomputes cached states for common subgraphs. Instead of fetching and processing five documents per query, you fetch one representative subgraph and reuse it across similar queries. Result? Up to 6.68x faster time-to-first-token. And yes, accuracy improves because the model gets more consistent context.

What Happens When You Skip This?

Without proper connectors? Your AI is blind to your latest policies. Without hybrid indices? It misses half the answers. Without caching? You’re burning through API calls and GPU time like it’s free. A single enterprise RAG system can generate 50,000 queries a day. Without caching, that’s 50,000 full LLM inferences. With caching? Maybe 10,000. The difference? A $120,000 monthly cloud bill drops to $25,000.

And the user experience? Sluggish, unreliable AI turns into something that feels like magic. Answers come back instantly. The system remembers what you asked last week. It doesn’t contradict itself. It doesn’t hallucinate about policies that were updated yesterday.

This isn’t theoretical. Companies like Siemens, Adobe, and a dozen Fortune 500s have deployed this stack. The ones that succeeded? They didn’t start with the fanciest LLM. They started with smart connectors, clean indices, and aggressive caching.

Build your RAG system like you’re building a library-not a one-time data dump. Design for updates. Optimize for reuse. Cache like your budget depends on it. Because it does.