Tag: token-level logging

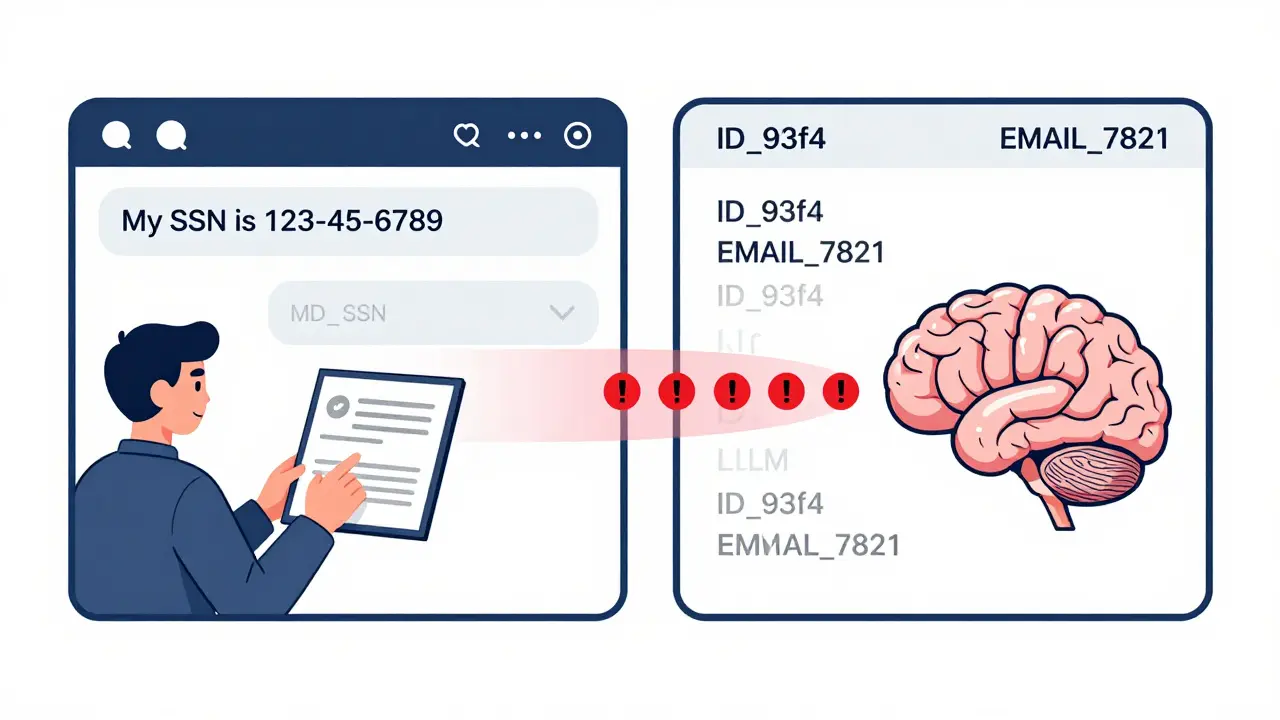

Token-Level Logging Minimization: How to Protect Privacy in LLM Systems Without Killing Performance

Token-level logging minimization stops sensitive data from being stored in LLM logs by replacing PII with anonymous tokens. Learn how it works, why it's required by GDPR and the EU AI Act, and how to implement it without killing performance.

Read more