When your team starts using large language models for customer support, internal documentation, or even drafting legal contracts, you might think: it’s just an AI chatbot. But here’s the hard truth - if you’re using ChatGPT, Gemini, or any public API-based LLM to process sensitive data, you’re already risking your company’s compliance, reputation, and possibly your job.

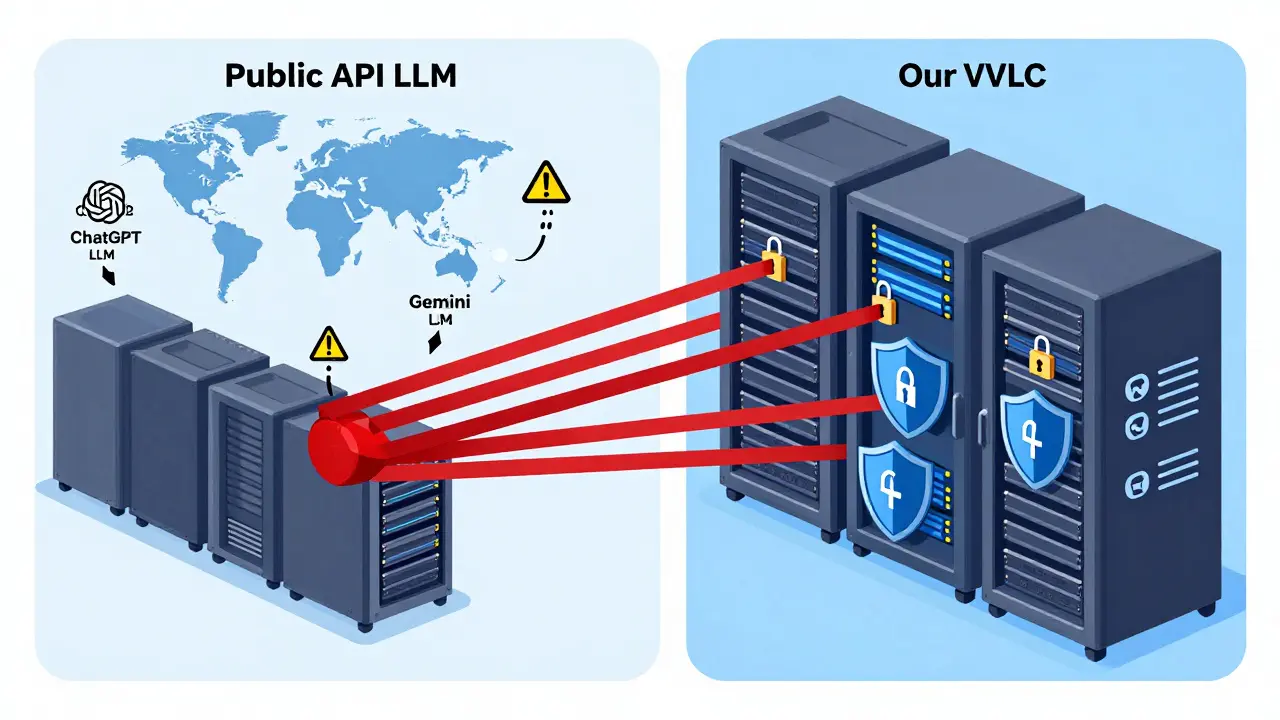

There’s a massive difference between using a public LLM through an API and running your own private model. One keeps your data locked inside your network. The other sends it flying across the internet to servers you can’t see, touch, or audit. And once it’s gone, you can’t get it back.

What Happens When You Use a Public API LLM?

Every time someone types a question into ChatGPT or Google Gemini using your company’s login, that prompt - along with any attached file, customer list, product roadmap, or internal memo - gets sent to an external server. It doesn’t matter if you signed a data processing agreement. Those agreements don’t stop your data from being used to train future versions of the model.

Matillion’s research found that over 60% of enterprises using public LLMs for internal tasks accidentally exposed confidential information. A single HR manager asking, “What’s the severance policy for employees in California?” could be feeding proprietary HR templates into a global training dataset. And there’s no way to undo that.

Even worse - you have zero visibility into what happens after your data leaves your system. Did the provider store it? Did they log it? Did someone else’s employee accidentally trigger a prompt that mixed your data with theirs? You can’t answer those questions. Regulators won’t accept “we trusted them” as proof of compliance.

Why Data Sovereignty Isn’t Just a Buzzword

Data sovereignty means your data stays where you say it should. In Europe, GDPR says personal data of EU citizens must not leave the bloc without strict safeguards. In the U.S., HIPAA requires health data to be handled within controlled environments. California’s CCPA gives consumers the right to know how their data is used - and who it’s shared with.

Public LLMs ignore all of that. Their infrastructure is global, multi-tenant, and designed for scale - not compliance. Your data might be processed in Ireland one minute and Singapore the next. You can’t control it. You can’t even track it.

Private LLMs, on the other hand, run inside your own AWS, Azure, or Google Cloud environment. Data never leaves your virtual private cloud (VPC). You choose the region. You control the encryption keys. You decide who can access it. If your compliance officer asks, “Where was this customer’s data processed?” you can point to a network diagram and say, “Here. In our us-east-1 subnet. Encrypted with our KMS key.”

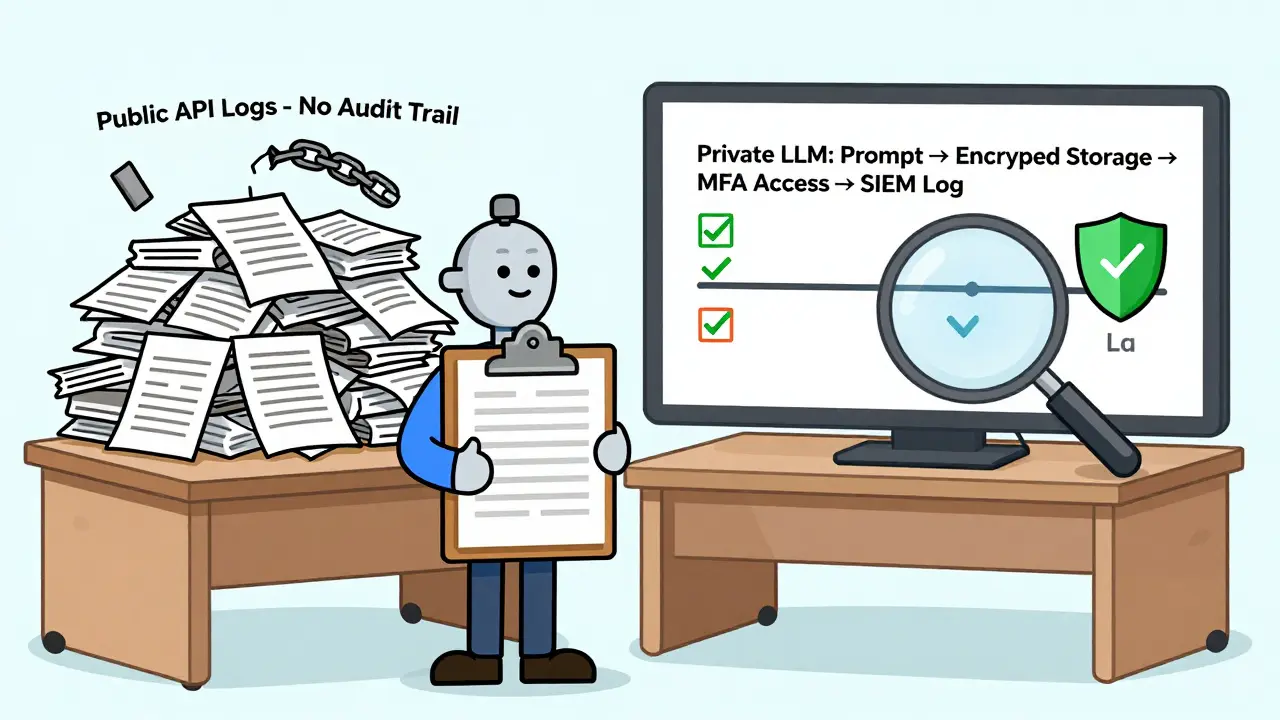

Audit Trails: The Difference Between Proof and Promises

Imagine a regulator comes knocking. They want to see exactly how your AI handled a patient’s medical record last month. What do you show them?

If you used a public API, you can only show them the log from your app: “User X submitted query at 3:14 PM.” That’s it. You have no access to the provider’s internal logs. No record of which model processed it. No proof of deletion. No trace of whether the data was cached or reused.

With a private LLM, you own the entire audit trail. Every prompt. Every response. Every API call. Every access attempt. All logged in your SIEM system. All tied to user roles, MFA authentication, and network source IPs. You can generate a full report in minutes. Regulators love that. Auditors sleep better at night because of it.

Third-Party Risk Just Got Real

The Financial Conduct Authority (FCA) in the UK classifies any external AI service that processes client data as a “material outsourcing arrangement.” That means you need:

- Due diligence reports on the vendor’s security practices

- Contractual clauses that limit data usage

- Continuous monitoring of their uptime and breach history

- Exit plans if they get hacked or go out of business

Now imagine you’re running a private LLM on your own Azure subscription. Who’s the vendor? You. Your team. Your infrastructure. The LLM is just another application - like your CRM or ERP system. No extra vendor risk. No separate governance layer. No legal team spending months negotiating terms.

That’s not a convenience. That’s a massive reduction in operational and regulatory overhead.

Network Security: You Can’t Secure What You Don’t Control

Public API LLMs require your app to make calls over the public internet. That means:

- Data travels through uncontrolled networks

- You can’t restrict which IP addresses can call the API

- You can’t apply your own firewall rules or zero-trust policies

- Encryption? You rely on the provider’s implementation

Private LLMs? You deploy them in private subnets. You lock them behind VPC endpoints like AWS PrivateLink. You enforce MFA for every user accessing the model. You rotate encryption keys quarterly. You monitor for unusual query patterns - like someone trying to extract 500 customer emails in one hour.

These aren’t theoretical security best practices. These are baseline requirements for financial institutions, healthcare providers, and government contractors. And public APIs don’t let you meet them.

Intellectual Property and Competitive Edge

Let’s say your company spent two years building a custom dataset of customer service interactions to train a model that predicts churn with 92% accuracy. You want to fine-tune your LLM on it.

If you use a public API, you’re uploading that dataset - your crown jewel - to a third party. Even if they claim it’s “anonymized,” there’s no guarantee it won’t be used to improve their next model. Competitors could end up with a version of your system - trained on your data - without ever paying for it.

With a private LLM, your data never leaves your environment. You can fine-tune, test, and deploy without exposing your IP. Your competitive advantage stays yours.

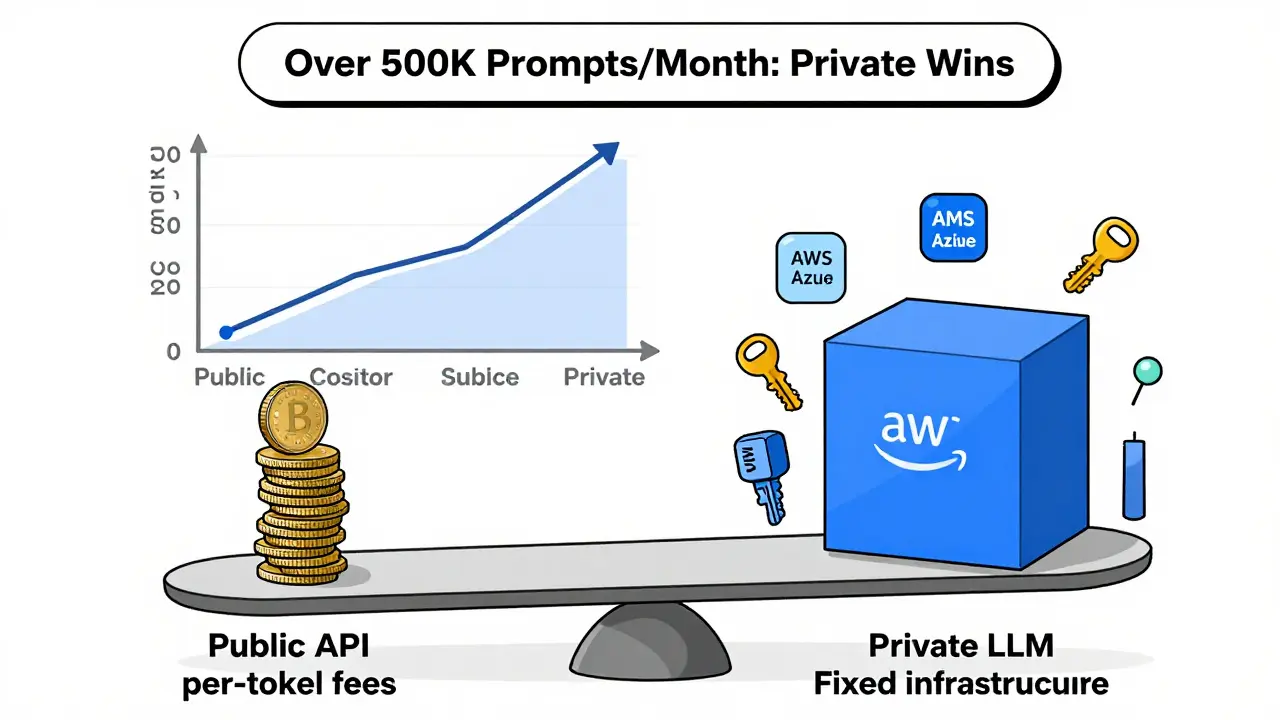

Costs: The Hidden Trap of Public APIs

At first, public APIs look cheaper. $0.01 per 1,000 tokens? Sounds great. But what happens when your team starts using it daily? When support agents rely on it for 200 queries a day? When legal drafts go through 500 prompts per week?

Matillion found that companies processing over 1 million prompts monthly often paid more for public API usage than they would for a private deployment. Why? Because private LLMs run on fixed infrastructure. You pay for compute, storage, and bandwidth - not per query.

Once you hit scale, private becomes cheaper. And you get more: full control, security, compliance, and auditability. Public APIs? You’re paying for convenience - and it adds up.

Hybrid? Maybe. But Don’t Trick Yourself

Some teams try a hybrid approach: use public LLMs for general questions, private ones for sensitive data. Sounds smart. But here’s the catch: you need a foolproof system to classify every prompt before it’s sent. One mistake - a customer’s SSN slipped into a “general FAQ” query - and you’re in violation.

Most organizations don’t have the engineering bandwidth to build and maintain that kind of real-time data classifier. And if they do, they’re better off just using private LLMs from day one.

The Bottom Line

If you’re in finance, healthcare, legal, or any regulated industry - or even if you just handle customer data, internal documents, or proprietary code - public API LLMs are a liability waiting to happen.

Private LLMs aren’t just more secure. They’re the only way to meet compliance, protect IP, maintain audit control, and reduce third-party risk. Yes, they require setup. Yes, you need cloud expertise. But those are investments - not costs.

The companies that win in AI aren’t the ones using ChatGPT for everything. They’re the ones who built their own secure, controlled, auditable systems. And they’re not waiting for regulations to catch up. They’re already compliant.

Can I use public LLMs if I delete the data afterward?

No. Even if you delete the prompt from your logs, the provider may still retain copies for training, caching, or debugging. There’s no technical guarantee that your data won’t be used to improve their models. Once data leaves your infrastructure, you lose control. Delete logs all you want - you can’t delete what someone else has already stored.

Are private LLMs more expensive than public APIs?

Initially, yes - you need cloud resources and skilled staff to set them up. But at scale, private LLMs are often cheaper. Public APIs charge per token, so as usage grows, so does your bill. Private deployments have fixed infrastructure costs. Once you’re processing more than 500,000 prompts per month, private usually wins on cost - and gives you security and compliance you can’t buy with API credits.

What if my company doesn’t handle sensitive data?

Even if you think your data isn’t sensitive, it might be. Internal meeting notes, product roadmaps, employee emails, and even draft marketing copy can contain information that, if leaked, harms your competitive position. Also, regulators don’t care if you think it’s harmless - if your LLM processes any data from EU citizens, GDPR applies. Best practice: assume everything is sensitive until proven otherwise.

Can I use AWS Bedrock or Azure OpenAI as a private LLM?

Yes - and that’s exactly what you should do. AWS Bedrock and Azure OpenAI Services let you run models like Llama 3, Claude, or GPT-4 inside your own VPC. Data never leaves your cloud account. You control access, encryption, and logging. These aren’t public APIs - they’re private, enterprise-grade LLM platforms designed for compliance.

Do I need a data scientist to run a private LLM?

Not necessarily. You need engineers who know how to set up cloud networks, manage IAM roles, and monitor performance. You don’t need to train the model yourself. Managed services from AWS, Azure, and Snowflake handle model hosting and updates. Your team just needs to connect their apps securely and control access. Think of it like using a managed database - you don’t need to be a DBA to use it.