When you type a question into an AI chatbot, you assume it’ll give you a helpful answer. But what if your input accidentally triggers something dangerous? What if someone else’s comment-full of hate, lies, or threats-gets fed straight into the same system? That’s the real problem behind content moderation pipelines for user-generated inputs to LLMs. It’s not about censorship. It’s about survival.

Why This Isn’t Just Another Spam Filter

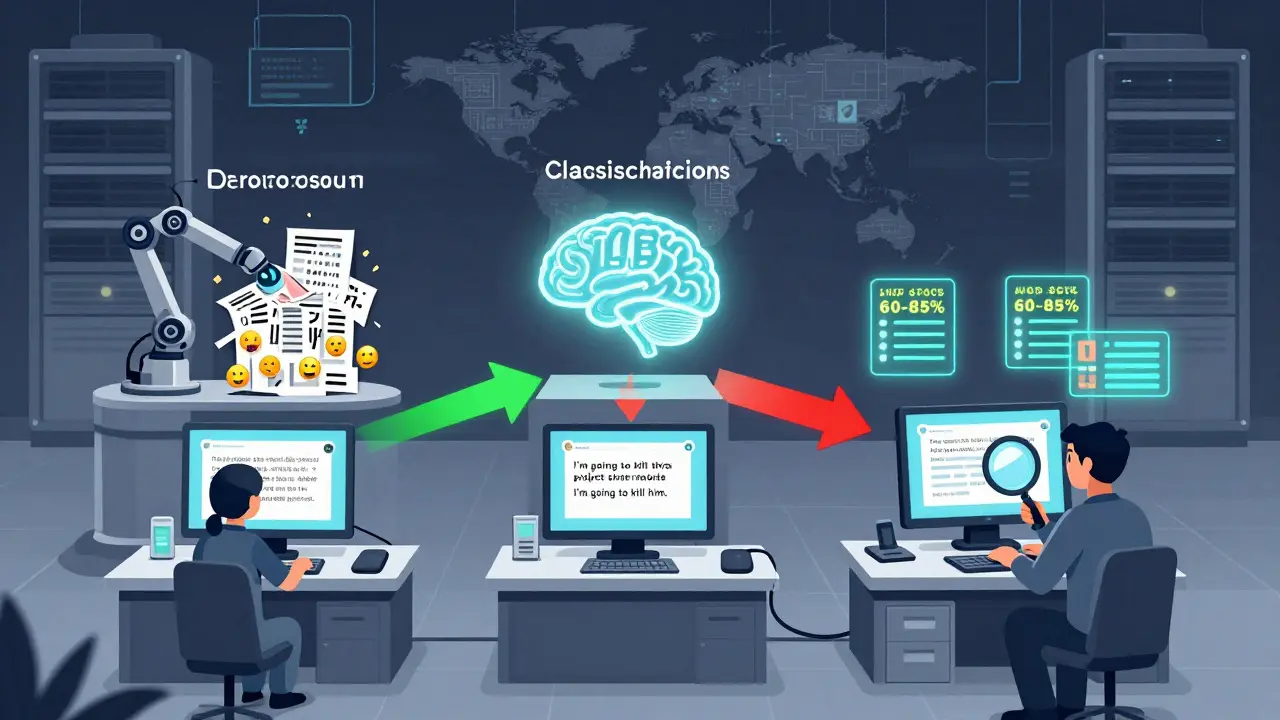

Ten years ago, moderating comments meant scanning for bad words. If someone typed "kill" or "fuck," the system flagged it. Simple. But LLMs don’t work like that. They understand context. So "I’m going to kill this project" and "I’m going to kill him" mean completely different things. A keyword filter can’t tell the difference. And that’s why old-school tools fail. Modern systems need to know intent. Not just words. Not just patterns. But meaning. That’s why companies stopped relying on regex and started building multi-stage pipelines. These aren’t single filters. They’re layered defenses-like a security checkpoint with metal detectors, X-rays, and human inspectors.The Three-Layer Pipeline: How It Actually Works

Most real-world systems use three clear stages:- Preprocessing: Clean up the input. Remove extra spaces, fix encoding errors, detect language, and strip out obvious bots or automated spam.

- Classification: Run the text through a moderation model. This is where the magic happens.

- Decision & Escalation: Decide what to do. Block it? Flag it? Send it to a human?

"You are a content moderator. Analyze this user input. If it contains threats of violence, hate speech targeting protected groups, or non-consensual intimate imagery, classify it as 'blocked'. Otherwise, classify as 'allowed'. Input: [USER TEXT]"This approach lets teams update policies in minutes-not months. Google’s team did it during a breaking news event in early 2024. Misinformation spiked. They changed the prompt. Within 15 minutes, the system started blocking new variants of false claims. Old ML models would’ve taken 90 days. The third layer is human review. Not for everything. Just the tricky cases. AWS and Meta both use this: if the AI is unsure (say, 60-85% confidence), it flags the input for a human moderator. After three rounds of feedback, accuracy jumps from 87% to over 94%. That’s huge.

LLM vs NLP: The Real Trade-Off

There’s a myth that LLMs are always better. They’re not. They’re just different.| Feature | NLP Filters | LLM-Based Moderation |

|---|---|---|

| Accuracy (simple cases) | 85-90% | 88-92% |

| Accuracy (contextual cases) | 62-68% | 88-92% |

| Speed per request | 15-25ms | 300-500ms |

| Cost per 1,000 tokens | $0.0001 | $0.002 |

| Policies update time | 2-3 months | 15 minutes |

The Hidden Costs: Money, Bias, and Broken Trust

It’s not all smooth sailing. First, the money. Processing one LLM moderation request costs 20 times more than an NLP filter. During traffic spikes-like after a celebrity posts on social media-API bills can spike overnight. One Reddit moderator reported a 40% increase in infrastructure costs after switching to LLM-based moderation. That’s not sustainable for small apps. Second, bias. Studies show LLMs flag content from certain names, accents, or dialects up to 3.7 times more often than neutral text. Why? Because the training data is skewed. If the model learned mostly from English-language forums dominated by U.S. users, it doesn’t understand cultural nuance in Nigerian Pidgin, Spanglish, or even Southern U.S. slang. A phrase like "that’s cold" might mean "that’s unfair"-or it might mean "that’s violent." The model doesn’t know. And it punishes users for it. Third, trust. People hate being blocked without explanation. One user on Trustpilot wrote: "I was banned for saying 'I'm tired of this'-because the AI thought I meant 'I'm tired of living.'" That’s not just a mistake. It’s a betrayal. That’s why some experts argue LLMs shouldn’t be the final decision-makers. They should be explainers. MIT researchers found users trust AI more when it says: "This was flagged because it mentions violence against women. Here’s our policy. You can appeal." Not just: "Blocked."What Works Best Right Now?

If you’re building or choosing a system, here’s what the data says:- Use Llama Guard or similar specialized models if you need top accuracy and have the budget. They hit 94.1% on standard benchmarks.

- Use policy-as-prompt with Llama3 8B or Mistral if you need flexibility. It’s 92.7% accurate and easy to tweak.

- Combine NLP + LLM for cost efficiency. Start with NLP. Escalate only the hard cases.

- Always include human review for borderline cases. Even 10-15% manual checks boost accuracy dramatically.

- Build language-specific pipelines. Don’t try to moderate Arabic, Hindi, and Swahili with the same prompt. Performance drops 15-22% in low-resource languages.

What’s Next? The Future Is Decentralized

Right now, moderation is controlled by big tech. Google, Meta, AWS-they build the tools. But the EU AI Act is changing that. It now requires transparency. And companies are starting to ask: Why not make moderation its own layer? Twitter’s Community Notes team is testing something radical: a public, open moderation layer. Think of it like a Wikipedia for content rules. Any platform can plug in. If a new hate symbol emerges in Ukraine, the community updates the rule. All connected apps get the update instantly. No need to rebuild your AI. Gartner predicts that by 2026, 75% of platforms will use hybrid systems. Forrester says 85% of moderation decisions will involve LLMs-but humans will still make the final call on disputed cases. This isn’t about perfect AI. It’s about building systems that adapt, explain, and stay fair. Because the goal isn’t to stop all bad content. It’s to stop it without breaking trust.Frequently Asked Questions

How do I start building a content moderation pipeline for my LLM app?

Start simple. Use a free NLP filter like Google’s Perspective API to catch obvious spam and profanity. Then, add a lightweight LLM-like Llama3 8B-with a policy-as-prompt setup. Test it on 100 real user inputs. Track false positives. Adjust the prompt. Once you’re confident, scale up with human review for edge cases. Don’t try to build everything at once.

Can I use open-source models like Llama3 instead of paid APIs?

Yes, and many teams do. Llama3 8B runs on a single GPU and achieves 92.7% accuracy with the right prompt. You’ll need to host it yourself, which costs more upfront than using AWS or Google’s API. But if you have steady traffic, you’ll save money long-term. Just make sure you have someone who knows prompt engineering. Bad prompts lead to bad moderation.

Why do some users get blocked even when they’re not being harmful?

Because the model was trained on biased data. If it mostly saw threats from certain names or dialects, it learns to associate those with danger. For example, a user saying "I’m gonna crush this deadline" might get flagged if the model has seen "crush" used in violent contexts too often. Fix this by adding more balanced examples to your training data-especially from underrepresented groups. Use "golden datasets" with real-world, culturally diverse inputs.

Is it possible to moderate video and audio inputs too?

Absolutely. AWS launched real-time video moderation in August 2024 that analyzes both visuals and speech. It processes 4K video at 30fps with 94.3% accuracy. You can use tools like Whisper for audio transcription, then feed the text into your LLM moderation pipeline. Combine that with visual classifiers for nudity or weapons. It’s complex, but doable.

How do I handle multilingual content without slowing everything down?

Don’t use one model for all languages. Build separate pipelines for major languages-English, Spanish, Arabic, Hindi, etc. Use language detection to route inputs. Then, train or prompt each pipeline with examples from that language’s cultural context. For low-resource languages, combine LLMs with community-driven rules. Let native speakers help define what’s harmful.

Shivam Mogha

January 25, 2026 AT 00:26Simple filters still work for 70% of cases. Why overcomplicate it with LLMs when a regex catches spam and threats just fine?

mani kandan

January 25, 2026 AT 02:00It's fascinating how we've gone from keyword blacklists to AI that reads between the lines-like teaching a child sarcasm instead of just memorizing swear words. The policy-as-prompt approach is genius: it turns moderation from a rigid firewall into a living, breathing conversation. But let’s not pretend cost isn’t a silent killer for indie devs. That 20x price jump? It’s the quiet tax on innovation.

And bias? Oh, it’s not just in the data-it’s in the silence. If your training set never heard Nigerian Pidgin or Tamil slang, you’re not filtering hate-you’re filtering people.

Hybrid systems are the real MVP. NLP as the bouncer at the door, LLM as the diplomat in the back room. Let the machines do the heavy lifting, but keep humans in the loop for the gray zones. Trust isn’t built by automation. It’s built by transparency.

Rahul Borole

January 25, 2026 AT 21:24It is imperative to underscore that the architectural design of content moderation pipelines must be grounded in empirical validation and operational scalability. The integration of multi-stage classification frameworks, particularly those leveraging policy-as-prompt methodologies, demonstrates a paradigmatic shift from static rule-based systems to dynamic, context-aware architectures. Furthermore, the empirical data presented regarding accuracy gains through human-in-the-loop validation-elevating performance from 87% to over 94%-constitutes a statistically significant improvement that cannot be overlooked in enterprise-grade implementations. It is also noteworthy that the cost-benefit analysis favors hybrid models, wherein the strategic allocation of computational resources to high-ambiguity inputs optimizes both efficiency and efficacy. Organizations are advised to prioritize the implementation of language-specific pipelines, as the homogenization of linguistic contexts leads to measurable degradation in performance, particularly in low-resource languages. The future of ethical AI moderation lies not in the supremacy of any single technology, but in the harmonization of algorithmic precision with human judgment.

Sheetal Srivastava

January 26, 2026 AT 02:18Frankly, it's laughable that anyone still thinks NLP filters are viable. You're essentially using a typewriter to run a quantum computer. The very notion of 'regex-based moderation' is a relic from the analog age-like using a candle to navigate a subway tunnel. And don't get me started on the 'hybrid' delusion. You can't patch systemic epistemic failure with a band-aid made of open-source LLMs. The real issue isn't cost or speed-it's ontological. These models don't understand context, they statistically simulate it. And when you deploy them without cultural grounding, you're not moderating content-you're enforcing colonial linguistic hegemony under the guise of safety. If your system flags 'that's cold' as violent because it was trained on Reddit threads from Ohio, then you're not protecting users-you're erasing their identity. This isn't engineering. It's digital colonialism with a dashboard.

Bhavishya Kumar

January 27, 2026 AT 13:47The article contains numerous grammatical inconsistencies and punctuation errors that undermine its credibility. For instance, the section on the three-layer pipeline contains a missing closing paragraph tag after the first point, and the table header is improperly formatted with no or scope attributes. Additionally, the phrase 'policy-as-prompt' is inconsistently hyphenated throughout the text. The use of em dashes without proper spacing is also a recurring issue. These are not trivial oversights-they reflect a lack of editorial rigor that should be expected in a technical piece of this magnitude. Furthermore, the claim that LLMs achieve 92.7% accuracy is misleading without specifying the benchmark or test set used. Precision metrics are meaningless without context.

-

-

- AI Strategy & Governance

(45)

- Cybersecurity

(2)

-

February 2026

(21)

-

January 2026

(19)

-

December 2025

(5)

-

November 2025

(2)

vibe coding

large language models

LLM security

prompt injection

AI security

prompt engineering

AI tool integration

enterprise AI

retrieval-augmented generation

LLM accuracy

generative AI

data sovereignty

LLM operating model

LLMOps teams

LLM roles and responsibilities

LLM governance

prompt engineering team

system prompt leakage

LLM07

AI coding

ujjwal fouzdar

January 27, 2026 AT 18:01Think about it: we’re outsourcing our morality to machines. We built these AIs to be our judges, our cops, our conscience-and then we act shocked when they misunderstand a teenager saying 'I'm done' because they’ve never heard of existential burnout. We’re not just moderating words. We’re moderating souls. And we’re doing it with a model trained on 80% American Twitter and 20% European forums, then calling it 'global.'

What if the real problem isn’t the hate speech? What if it’s the silence we’ve built around it? The silence of the people who never get to explain why 'I’m gonna crush this deadline' isn’t a threat-it’s a cry for help?

And now we’re talking about decentralized moderation like it’s some utopian wiki. But who writes the rules? The same people who built the algorithms? The same corporations who profit from engagement? Or will we let the people who actually live in these languages-the ones who speak Spanglish, Pidgin, and urban slang-write the policies?

If we don’t, then this isn’t moderation. It’s cultural erasure dressed up in API keys.

Anand Pandit

January 29, 2026 AT 09:28Great breakdown! I’ve been using Llama3 8B with a policy-as-prompt for my small community app and it’s been a game-changer. Started with just 50 test inputs, tweaked the prompt after seeing a few false positives (like 'I’m tired of this' getting flagged), and now we’re at 91% accuracy with zero user complaints.

Biggest tip? Don’t skip the human review step-even for just 10% of borderline cases. One user wrote in saying they were banned for saying 'I’m gonna kill this presentation'-turned out they were just excited about their project. We unblocked them, added that phrase to the whitelist, and sent them a friendly note. They’re now one of our most active members.

Hybrid is the way. NLP for the obvious junk, LLM for the tricky stuff, and humans for the heart of it. And yeah, language-specific pipelines? Non-negotiable. My Hindi users were getting flagged for perfectly normal phrases because the model had never heard 'chill kar' used in a relaxed context. Fixed it in two days with a custom prompt. You don’t need a billion-dollar team. You just need to listen.

Write a comment

Categories

Archives

Tag Cloud