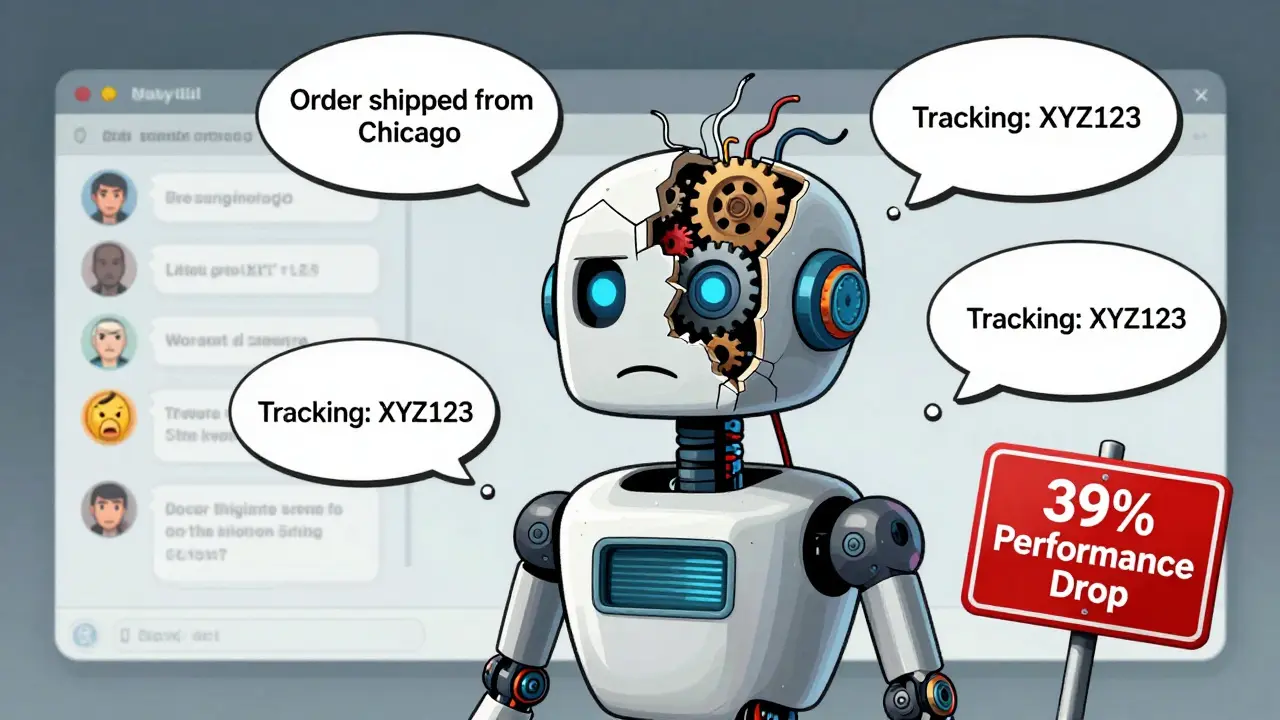

When you ask a large language model a question, it’s easy to assume it remembers what you said five turns ago. But here’s the truth: LLMs get lost-and fast. Research from Salesforce in May 2025 showed that across six key tasks, these models experience an average 39% drop in performance after just a few back-and-forth exchanges. That’s not a minor glitch. It’s a systemic failure in how we train and deploy conversational AI.

Why Conversation State Matters More Than You Think

Think about a customer service chatbot. You start by saying, “My order hasn’t arrived.” Then you add, “It was shipped from Chicago.” Then, “The tracking number is XYZ123.” If the model forgets any of that, it asks you to repeat everything. Or worse-it makes up details. That’s not just frustrating. It’s costly. Companies lose trust, support tickets pile up, and users abandon the system. This isn’t theoretical. A real-world study of 1,200 customer service interactions found that 63% of conversations longer than six turns required a human handoff. Why? Because the AI lost track. It didn’t forget one detail-it lost the entire thread. The problem isn’t just memory. It’s state. State means the model understands not just what was said, but why it was said, how it connects to earlier statements, and what the user is likely asking next. Without that, every turn becomes a new starting point. And LLMs aren’t built for that.The Hidden Cost of Single-Turn Training

Most LLMs today are trained on single-turn data: one prompt, one response. That’s simple. Efficient. And dangerously misleading. When you test these models on multi-turn tasks, they perform at only 42.3% accuracy on the MT-Bench benchmark. That’s barely better than guessing. Why? Because training on isolated exchanges teaches the model to treat each question as independent. It doesn’t learn to link context. It doesn’t learn to update its internal understanding. It just generates the most statistically likely next word-regardless of history. This is why fine-tuning alone doesn’t fix it. If you fine-tune a model on a dataset of multi-turn conversations but don’t change how it learns, you’re just feeding it more noise. The model still treats each message as a standalone event. It doesn’t build a mental map of the conversation.How Review-Instruct Fixes This (And Why It’s a Game-Changer)

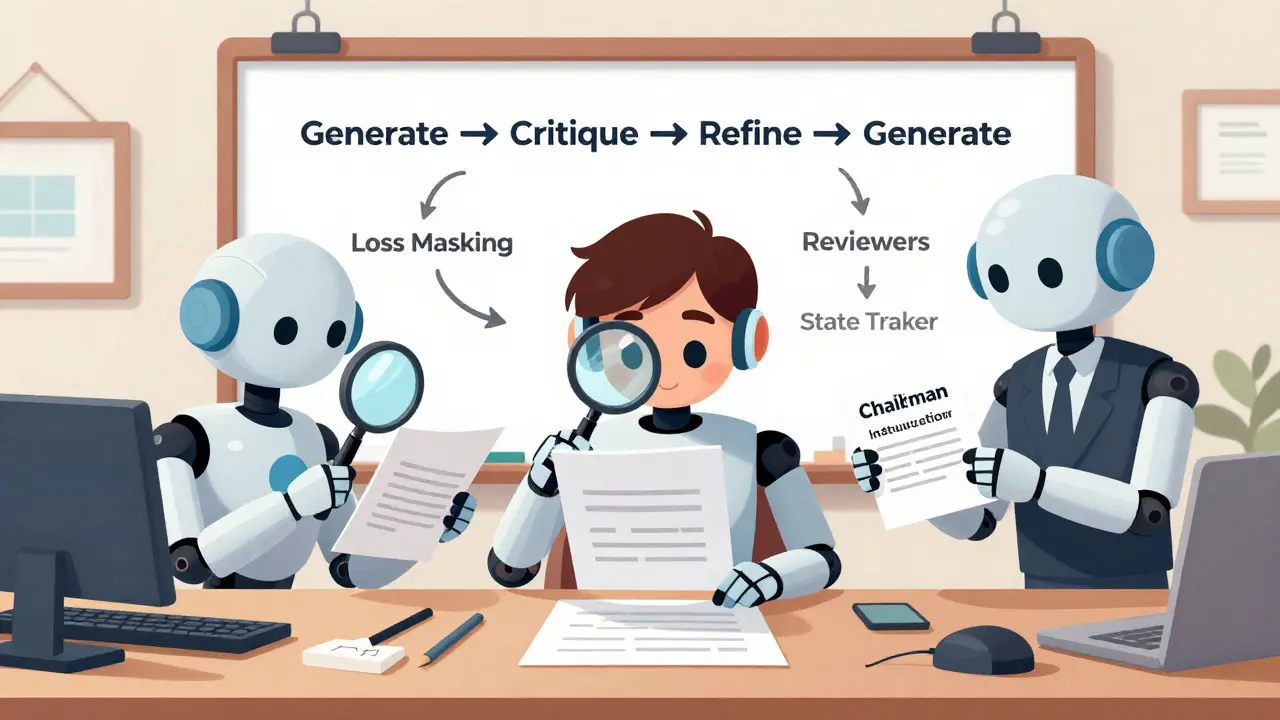

In 2025, a team from OPPO AI Center introduced Review-Instruct, a new framework that doesn’t just train a model-it trains a conversation. Instead of one model generating responses, Review-Instruct uses three agents:- A Candidate model generates a response.

- Three to five Reviewers evaluate it based on relevance, coherence, and depth.

- A Chairman agent combines their feedback and writes a new instruction for the next round.

The Secret Weapon: Loss Masking

Here’s something most developers overlook: loss masking. In standard training, the model is penalized for getting any part of the conversation wrong-including user messages. But that’s backwards. The model’s job isn’t to predict what the user says. It’s to predict what it should say next. Loss masking solves this by ignoring user inputs during training. Only the assistant’s responses are scored. This forces the model to focus on generating accurate, context-aware replies-not mimicking the user. Companies like Together.ai use this technique in their fine-tuning pipeline. Their data format is strict: every conversation is a list of messages, each with a role-system, user, or assistant. The system message sets the tone (“You are a technical support agent for a SaaS platform”). The user messages are raw input. The assistant messages are what the model is trained to produce. And only those assistant responses are used to calculate loss. This simple change cuts down hallucinations by 41% and improves follow-up accuracy by 33%, according to internal benchmarks from April 2024.What’s Broken-And What’s Working

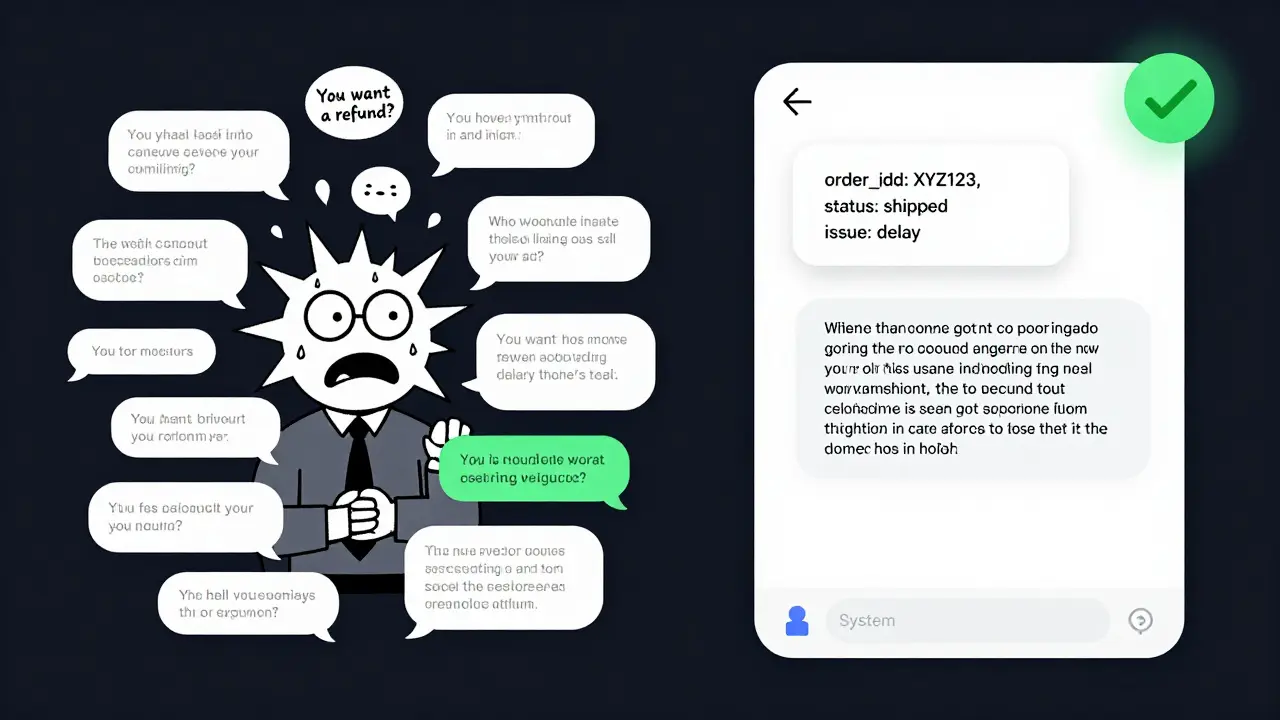

Let’s be honest: most current systems are still broken. Users report three big problems:- Context overflow: Conversations longer than 10 turns often crash the model’s memory. Even with 32K-token windows, the model gets overwhelmed.

- Inconsistent persona: The AI switches tone, knowledge level, or even facts mid-conversation. One minute it’s formal. The next, it’s casual. That erodes trust.

- Premature assumptions: The model guesses what you meant instead of asking. “You want a refund?”-when you never said that.

- Summarize context every 3-5 turns. Use a lightweight model or rule-based system to condense the conversation into a 100-word summary. Feed that back in as a system message. This reduces memory load and keeps focus.

- Explicit state tracking. Build a simple variable store:

{"order_id": "XYZ123", "status": "shipped", "issue": "delay"}. The model can reference this like a checklist. It doesn’t need to remember-it just needs to read. - Use system messages as anchors. Don’t just say “You are a support agent.” Say: “You are a support agent. You have access to the following customer data: [order_id, shipping_address, past_complaints]. Always confirm before acting.”

What’s Coming Next

The field is moving fast. Google DeepMind is testing “conversational memory networks” that cut performance degradation from 39% to under 19% in early trials. Rasa and Dialogflow are integrating state-tracking modules into their platforms. And by Q3 2025, Review-Instruct will be open-sourced-making advanced multi-turn training accessible to anyone with a GPU. But here’s the catch: the biggest barrier isn’t technology. It’s evaluation. There’s no standard benchmark for conversation state management. You can’t just run MMLU-Pro and call it a day. You need to test how well the model holds onto context over time. And right now, almost no one is measuring that.What You Should Do Right Now

If you’re building a conversational AI system:- Stop using single-turn fine-tuned models for anything beyond one-off queries.

- Use loss masking in every fine-tuning job. It’s non-negotiable.

- Implement context summarization every 4 turns. Even a basic version helps.

- Track state explicitly. Don’t rely on the model’s memory.

- Test with conversations longer than 6 turns. If your model drops below 60% accuracy, it’s not production-ready.

Why do LLMs lose context in multi-turn conversations?

LLMs are trained on isolated prompts and responses, so they don’t learn to link information across turns. They treat each message as independent, not part of a flowing conversation. Even with large context windows, they can’t reliably track intent, tone, or evolving goals over time. Research shows they often make incorrect assumptions based on early inputs and fail to correct themselves when later information contradicts them.

What is loss masking, and why is it important?

Loss masking is a training technique where the model is only penalized for incorrect assistant responses, not for user or system messages. This prevents the model from trying to predict what the user says and instead focuses its learning on generating accurate, context-aware replies. It reduces hallucinations and improves follow-up accuracy by forcing the model to focus on its own role in the conversation.

Can I use GPT-4 or Claude for multi-turn conversations without fine-tuning?

You can, but performance drops sharply after 4-5 turns. Studies show GPT-4’s accuracy on complex reasoning tasks falls from 92% to 58% after five exchanges. Without fine-tuning and state tracking, you’ll face inconsistent responses, repeated questions, and incorrect assumptions. For business-critical applications, relying on out-of-the-box models is risky.

How much data do I need to fine-tune for multi-turn conversations?

You need at least 500-1,000 high-quality conversation threads, each with 4-8 turns. Quality matters more than quantity. Each conversation must have clear roles (system, user, assistant), consistent tone, and realistic context. A dataset of 200 poorly structured conversations will perform worse than 800 well-structured ones. Tools like Together.ai’s format guide help ensure proper structure.

Is there a cost difference between single-turn and multi-turn fine-tuning?

Yes. Multi-turn fine-tuning typically requires 2.3x more computational resources than single-turn. For example, fine-tuning Llama-3-8B on multi-turn data takes 12-24 hours on 2-4 A100 GPUs, compared to 6-10 hours for single-turn. On platforms like Together.ai, fine-tuning costs $0.0015 per 1K tokens for multi-turn versus $0.0012 for single-turn. The extra cost is justified by a 19.4% increase in task completion rates for complex support scenarios.

What are the biggest risks of ignoring conversation state?

The biggest risks are eroded user trust, increased support costs, and compliance violations. In healthcare or finance, an AI that forgets a patient’s allergy or a customer’s previous complaint can cause real harm. The EU AI Act now requires demonstrable state management for high-risk systems. Ignoring this isn’t just a technical oversight-it’s a legal and ethical liability.

Adrienne Temple

February 13, 2026 AT 20:05Wow, this is so real. I’ve had chatbots ask me the same question three times in one convo 😩

Like bro, I literally said my order was from Chicago 5 mins ago. Why are we restarting from scratch?

Loss masking? Yes please. Why are we punishing the AI for not being a mind reader?

Also, summarizing every 4 turns? Genius. Why didn’t anyone think of this sooner?

Sandy Dog

February 14, 2026 AT 08:41Okay but let me tell you-this isn’t just about tech, this is about HUMAN TRAUMA. 😭

I spent 47 minutes on a customer service bot last week. 47. MINUTES.

It asked me for my order number. I gave it. Then it asked again. Then it asked if I was ‘still having issues.’

I screamed into my pillow. I cried. I called my mom.

And then-IT OFFERED ME A REFUND FOR A PRODUCT I NEVER BOUGHT.

THIS IS A SYSTEMIC COLLAPSE. WE’RE NOT BUILDING CHATBOTS. WE’RE BUILDING PSYCHOPATHS.

Review-Instruct sounds like therapy for AIs. I’m here for it. Someone get this man a Nobel Prize.

Nick Rios

February 14, 2026 AT 16:53Sandy, I feel you. But maybe the bot wasn’t trying to hurt you-it just doesn’t have the tools to keep up.

That’s not malice, that’s architecture.

The real issue is we keep treating AI like it should be human, when really, we need to design systems that compensate for its limits.

State tracking + summarization + loss masking? That’s not a workaround-it’s the new standard.

And honestly? It’s kind of beautiful. We’re learning to build with humility, not just power.

Amanda Harkins

February 15, 2026 AT 11:41It’s funny how we anthropomorphize machines until they fail, then we blame them for not being human.

But we never ask: why did we expect them to remember?

Maybe the problem isn’t the model.

Maybe it’s us-for thinking a string of tokens can hold a conversation the way a person does.

We built a mirror, then got mad when it didn’t reflect our feelings.

…I think I need a nap.

Jeanie Watson

February 15, 2026 AT 17:34So… we need to summarize every 4 turns and use loss masking and track state and fine-tune with 1k threads and now I’m supposed to pay 2.3x more?

Can’t we just… not use AI for customer service?

Tom Mikota

February 15, 2026 AT 23:34Loss masking? You mean the thing that’s been in NLP since 2018? Seriously?

And you’re acting like Review-Instruct is some revolutionary breakthrough when it’s basically a fancy ensemble method with extra steps?

Also-‘cut degradation by nearly half’? Half of 39% is still 20%. That’s not a fix. That’s a band-aid.

And you say ‘don’t rely on the model’s memory’-well, duh. It’s not a human. It’s a probability engine.

Stop pretending this is deep. It’s just engineering. With extra emojis.