Tag: AI safety

Content Moderation Pipelines for User-Generated Inputs to LLMs: How to Block Harmful Content Without Breaking Trust

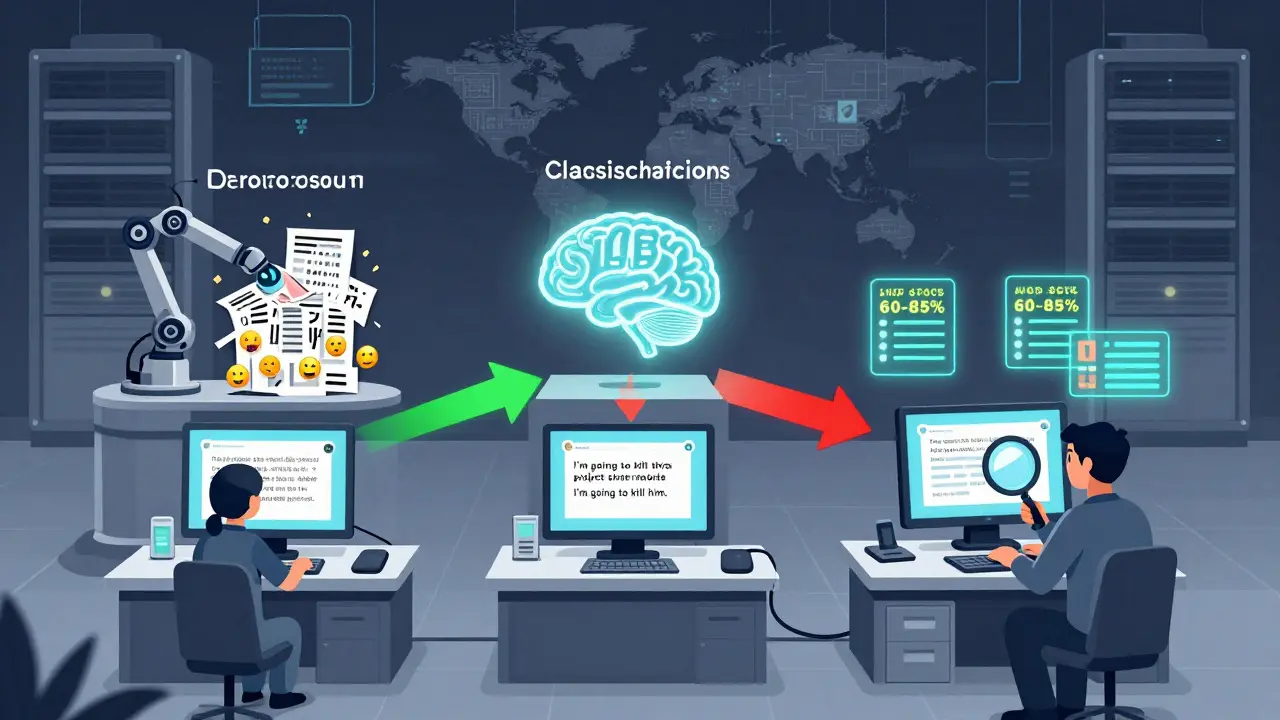

Learn how modern AI systems filter harmful user inputs before they reach LLMs using layered pipelines, policy-as-prompt techniques, and hybrid NLP+LLM strategies that balance safety, cost, and fairness.

Read more