Most teams think fine-tuning large language models is about throwing more data at the model and waiting for results. That’s not how it works. Supervised fine-tuning (SFT) is a precise, data-driven process that turns a general-purpose LLM into a reliable assistant for your specific use case - but only if you do it right. Get the data wrong, and your model forgets basic facts. Set the learning rate too high, and it loses all its pre-trained knowledge. Skip validation, and you’ll deploy a model that sounds smart but gives dangerous answers. This isn’t theory. It’s what happens in real projects - and it’s avoidable.

Why SFT Is the Only Practical Way to Customize LLMs

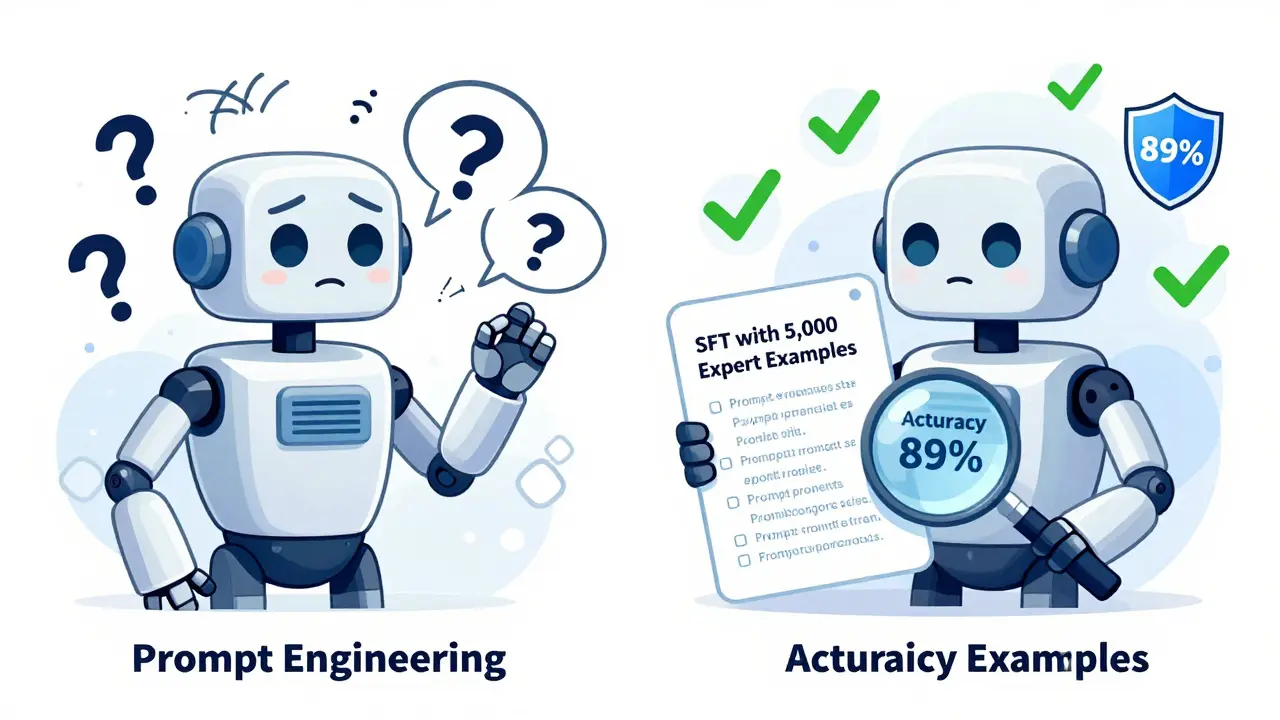

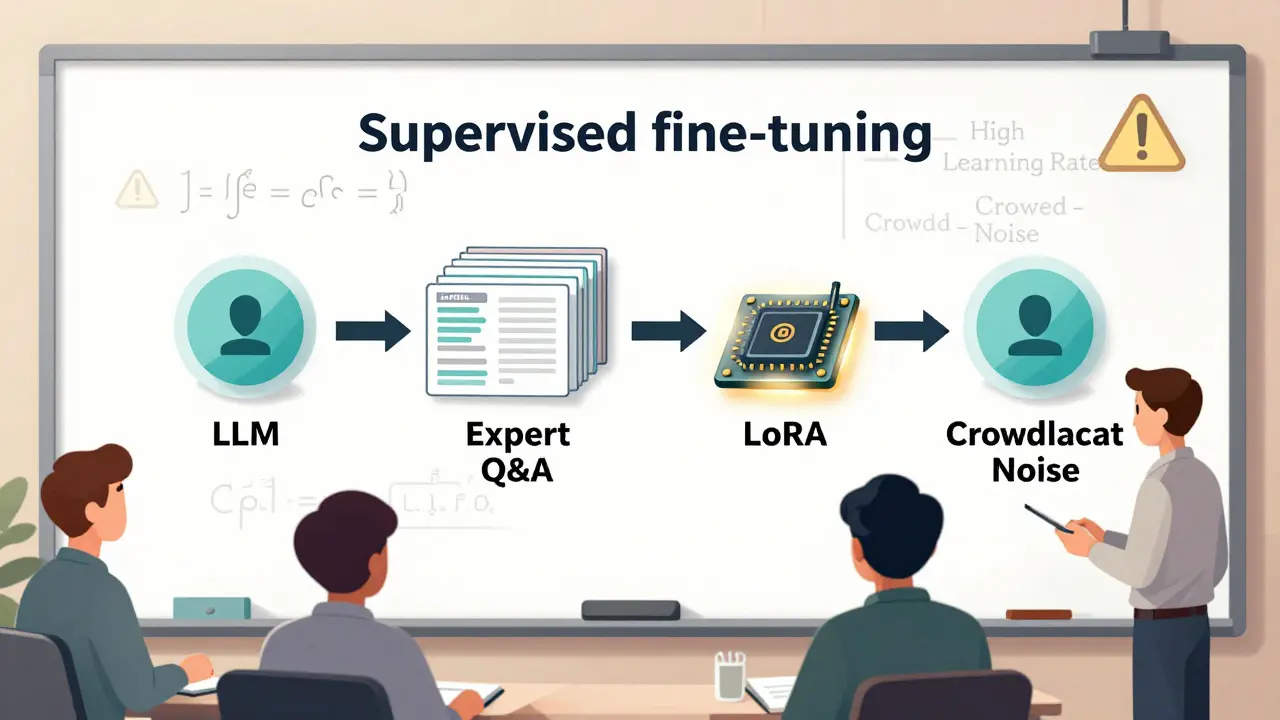

Pre-trained models like LLaMA-3 or Mistral are powerful, but they’re not tailored. They answer questions like a well-read stranger - helpful sometimes, wildly off-base others. You don’t want a customer service bot that guesses answers. You want one that follows your rules, uses your terminology, and sticks to your policies. That’s where SFT comes in. SFT is the second step in the standard LLM training pipeline: pre-train on massive text, then fine-tune with labeled examples. Unlike prompt engineering - which tries to guide the model with clever wording - SFT actually changes the model’s weights. It teaches the model what good answers look like for your domain. A study from Meta AI found SFT improved accuracy on domain-specific tasks by 25-40% over prompt engineering alone. And unlike full fine-tuning, which requires retraining the entire model, modern SFT uses methods like LoRA that adjust less than 1% of parameters. That means you can run it on a single GPU instead of a cluster. The key insight? SFT isn’t about making the model smarter. It’s about making it consistent. It’s about turning a generalist into a specialist - without needing a PhD in machine learning.What You Need Before You Start

You can’t fine-tune without data. And not just any data. You need high-quality, labeled examples: input prompts and the exact responses you want the model to generate. This is where most teams fail. Start with at least 500 examples. Google’s Vertex AI team says this is the bare minimum. But if you’re doing anything complex - legal contracts, medical advice, technical support - aim for 5,000 to 10,000. Stanford CRFM found that models trained on fewer than 10,000 examples often plateau in performance. One team at a healthcare startup tuned a model on 2,400 physician-verified Q&A pairs. It jumped from 42% to 89% accuracy on medical questions. Another team used 10,000 crowd-sourced examples. It scored worse than the 2,400 expert ones. Quality beats quantity every time. A user on Stack Overflow reported that 500 perfectly formatted legal contract examples outperformed 10,000 messy ones. Why? Because inconsistent formatting confuses the model. If one prompt says "Explain this clause," and another says "What does this mean?" and another says "Break down the legal jargon," the model doesn’t learn the task - it learns noise. You also need a base model. For open-source, LLaMA-3 8B is a solid choice. It runs on a single 24GB GPU with 4-bit quantization. For cloud users, Google’s text-bison@002 or Anthropic’s Claude 3 Haiku work well. Avoid trying to fine-tune models larger than 13B unless you have multiple high-end GPUs. Most teams don’t need them.How to Structure Your Training Data

Your data format matters more than you think. The standard is a JSONL file - one example per line. Each line has two fields:instruction and output.

Example:

{

"instruction": "What are the symptoms of type 2 diabetes?",

"output": "Common symptoms include increased thirst, frequent urination, unexplained weight loss, fatigue, and blurred vision. Consult a doctor for diagnosis."

}

Don’t mix formats. If you’re doing code generation, use consistent prompt styles: "Write a Python function to...", not "Make a script that..." or "How do I...". A YouTube tutorial by ML engineer Alvaro Cintas showed that inconsistent prompts reduced accuracy by 18%. That’s like losing half your training effort.

Split your data properly: 70-80% for training, 10-15% for validation, 10-15% for testing. Skipping validation is a recipe for overfitting. One team reported 35%+ overfitting because they used the same data for training and testing. Their model performed great on their test set - but failed completely in production.

And don’t forget to remove duplicates. A single example repeated 50 times will make the model over-index on that pattern. Use a simple script to deduplicate. It’s fast and saves weeks of debugging later.

Choosing the Right Tools and Setup

You don’t need to build everything from scratch. Hugging Face’s Transformers library with the TRL (Transformer Reinforcement Learning) package is the industry standard. Use theSFTTrainer class. It handles batching, tokenization, and gradient updates automatically.

For memory efficiency, use 4-bit quantization with bitsandbytes. A 7B model that normally needs 14GB of VRAM drops to 6GB. That means you can run it on an NVIDIA A10 or even a high-end consumer GPU like the RTX 4090.

Here’s what your setup should look like:

- Model: LLaMA-3 8B (4-bit quantized)

- Tokenizer: Use the model’s default tokenizer. Set

padding_side='left'for decoder-only models. - Training library: Hugging Face TRL with

SFTTrainer - Parameter efficiency: LoRA (rank 8 or 16)

- Batch size: 4-8 (if using 8GB VRAM)

- Gradient accumulation: 4-8 steps (to simulate larger batch sizes)

- Learning rate: 2e-5 to 5e-5

- Epochs: 1-3

- Sequence length: 2048 tokens

- Packing: Enabled (combines short examples to fill context windows)

What to Measure - And What to Ignore

Don’t just look at loss. Loss tells you if the model is learning, but not if it’s useful. Use three metrics:- Perplexity: Measures how surprised the model is by the output. For conversational tasks, aim for below 15. Higher means the model is guessing.

- Task-specific accuracy: If you’re answering medical questions, test on a held-out set of 100-200 real questions. Count correct answers.

- Human evaluation: Have 3-5 domain experts rate 50 outputs on clarity, relevance, safety, and factual accuracy. Use a 1-5 scale. This is non-negotiable.

Common Mistakes and How to Avoid Them

Here’s what goes wrong - and how to fix it:- Mistake: Using low-quality or crowd-sourced data. Fix: Pay experts. Even if it costs $1-2 per example, it’s cheaper than fixing a broken model.

- Mistake: Training for more than 3 epochs. Fix: Stop at 2-3. More epochs = more forgetting.

- Mistake: Not validating on real-world examples. Fix: Test on data that looks like what users will actually ask.

- Mistake: Ignoring prompt templating. Fix: Use one format. Always. No exceptions.

- Mistake: Assuming more data = better results. Fix: Google’s 2023 paper showed models trained on 1,500 expert examples beat those trained on 50,000 crowd-sourced ones.

When SFT Isn’t Enough

SFT is powerful - but it has limits. It’s great for instruction-following, factual recall, and simple reasoning. But it struggles with multi-step logic, ethical trade-offs, or tasks where the "right" answer isn’t obvious. DeepMind’s Sparrow model improved 72% on factual QA after SFT - but only 38% on multi-step reasoning. Why? Because you can’t easily label "the right way" to solve a complex problem. You need reinforcement learning for that. That’s why most top models use SFT + RLHF. OpenAI’s InstructGPT got 85% human preference alignment with both steps. With SFT alone? Only 68%. If you’re building a high-stakes system - legal, medical, financial - plan for RLHF after SFT. Also, if your task changes often, SFT isn’t scalable. You can’t retrain the model every time your policy updates. For dynamic environments, consider retrieval-augmented generation (RAG) instead.What’s Next for SFT

The field is moving fast. Google’s Vertex AI now auto-scores data quality and blocks bad examples during training. Hugging Face’s TRL v0.8 uses dynamic difficulty adjustment - it starts with easy examples, then ramps up complexity. That improved accuracy by 12-18% on hard tasks. By 2026, Gartner predicts 80% of SFT will use AI-assisted data labeling. That means humans will review fewer examples - and AI will help write them. But experts warn: human oversight is still critical. The EU AI Act now requires proof of data provenance for high-risk applications. If you’re in healthcare or finance, you’ll need audit trails for every example used in training. The biggest bottleneck isn’t compute. It’s annotation. Stanford researchers estimate that expert labeling costs may become the main barrier to SFT adoption after 2027. If you’re starting now, build your data pipeline right. Automate where you can. But never skip the human review.Final Advice: Start Small, Measure Everything

Don’t try to fine-tune for your entire business on day one. Pick one task. One use case. One team. Maybe customer support for returns. Or summarizing meeting notes. Train on 1,000 examples. Test it. Measure accuracy. Ask real users for feedback. Then scale. The best teams don’t have the most data. They have the most discipline. They know that SFT isn’t magic. It’s craftsmanship. And like any craft, it rewards patience, precision, and attention to detail.What’s the minimum amount of data needed for supervised fine-tuning?

You need at least 500 high-quality labeled examples to start. But for most real-world tasks - especially those requiring domain knowledge - you’ll want 5,000 to 10,000. Google’s Vertex AI team says 500 is the bare minimum, while Stanford CRFM found optimal results typically require 10,000-50,000 examples. Quality matters more than quantity: one team achieved 89% accuracy on medical questions using just 2,400 expert-verified examples, while another with 50,000 crowd-sourced examples performed worse.

Can I fine-tune a large model like LLaMA-3 on a single GPU?

Yes, if you use 4-bit quantization and parameter-efficient methods like LoRA. A 7B-8B model like LLaMA-3 can run on a single 24GB GPU (like an RTX 4090 or A10) with 4-bit quantization, reducing memory use from 14GB to about 6GB. LoRA only updates 0.1-1% of parameters, so you don’t need to retrain the whole model. Training will take days instead of hours, but it’s doable without a cluster.

Why does my model forget basic facts after fine-tuning?

This is called catastrophic forgetting, and it usually happens when the learning rate is too high (above 3e-5) or you train for too many epochs (more than 3). The model overwrites its pre-trained knowledge while trying to learn your examples. Use LoRA to limit changes to a small subset of weights, keep learning rates between 2e-5 and 5e-5, and always test the model on general knowledge questions like "Who wrote Romeo and Juliet?" after training.

Should I use cloud services or open-source tools for SFT?

It depends. Cloud platforms like Google Vertex AI, AWS Bedrock, or Azure ML are easier - they handle infrastructure and offer managed SFT pipelines. But they cost $15-30 per hour. Open-source tools like Hugging Face TRL are free but require ML expertise. If you have a small team and need speed, use the cloud. If you have a dedicated ML engineer and want control, go open-source. Many teams start with cloud to test the idea, then move to open-source for scaling.

Is supervised fine-tuning enough for compliance-heavy industries like healthcare or finance?

SFT is necessary but not sufficient. In healthcare, you need to prove every training example was reviewed by a licensed professional. In finance, you must track how the model generates advice to avoid hallucinations. The EU AI Act and other regulations require documented data provenance and human oversight. Many teams add guardrails, rule-based filters, or human-in-the-loop reviews after SFT. SFT gets you to 80% - the rest needs policy and process.

How do I know if my fine-tuned model is ready for production?

Test it on real user inputs - not just your validation set. Run a small pilot with 50-100 real queries from your target users. Measure task accuracy, response safety, and user satisfaction. If your model scores above 85% on task accuracy, has low perplexity (<15), and passes human review for coherence and reliability, it’s ready. Never skip human evaluation. One team at JPMorgan Chase had 92% accuracy on metrics but 28% hallucination rate - a dangerous gap only humans could catch.

Michael Jones

January 13, 2026 AT 13:40Just spent 3 weeks trying to get SFT to work on a customer support bot and holy hell it's not magic

We threw 20k crowd-sourced examples at it and the thing started inventing fake policies like 'free pizza on Tuesdays' for returns

Went back to 3k expert-labeled ones and boom - suddenly it stopped lying

Quality over quantity isn't a slogan it's survival

allison berroteran

January 13, 2026 AT 23:42I love how this post breaks down the real pain points instead of just listing tools

It's funny how we all assume more data means better results until we see our model start hallucinating that the capital of France is Berlin

I spent months trying to automate labeling until I realized the only thing worse than bad data is *confident* bad data

Our team started paying freelance nurses to annotate medical Q&A at $1.50 per example

Turns out their intuition for what a patient actually asks is way better than any AI labeling tool

And yes, we still manually review every 10th example even with the new Hugging Face auto-score feature

It's not glamorous but it's the difference between a tool that helps and a tool that gets you sued

Gabby Love

January 15, 2026 AT 12:23Learning rate above 3e-5 causes catastrophic forgetting - this is critical and often overlooked.

Also, always test on pre-training facts like 'Who wrote Hamlet?' after fine-tuning.

Simple check, huge impact.

Jen Kay

January 17, 2026 AT 04:00Somehow I'm not surprised that Walmart Labs succeeded by filtering out 75% of their data

Most teams treat data like a buffet - more is better

But real engineering is knowing what to throw away

Also, LoRA at rank 8 is the unsung hero of modern SFT

It's like giving your model a tiny brain transplant instead of a full lobotomy

And yes, I'm still side-eyeing anyone who tries to fine-tune a 70B model on a laptop

It's not a flex, it's a fire hazard

Michael Thomas

January 18, 2026 AT 12:07USA still leads in real AI work. Europe wastes time on paperwork. Canada thinks they can do it with 1000 examples. You need 5k expert-labeled examples or you're just playing with toys. No exceptions.

Abert Canada

January 19, 2026 AT 05:14Man I read this while sipping maple syrup in Montreal and it hit different

Our team in Toronto tried SFT on insurance claims and got wrecked by inconsistent prompts

One guy wrote 'What's covered?' another said 'Explain policy coverage' another just typed 'help'

We standardized to 'Explain the coverage for [condition]' and accuracy jumped from 51% to 88%

Turns out the model doesn't care about your creativity

It just wants consistency like a cat that demands food at 7am

Also, 4-bit quantization on an RTX 4070? Yes please

My wallet and my GPU both thank you

Xavier Lévesque

January 21, 2026 AT 01:13Let me guess - someone's about to comment 'just use RAG instead'

Look, SFT isn't the end-all be-all but it's the only thing that makes your model stop sounding like a drunk chatbot

I watched a model go from 'I think the patient might have diabetes' to 'Symptoms include polydipsia, polyuria, and unexplained weight loss' after SFT

That's not progress, that's a medical breakthrough

And yeah, we still need RLHF later

But first you gotta teach it to not make up facts

Then you can worry about ethics

Thabo mangena

January 22, 2026 AT 04:52It is with profound respect for the discipline of machine learning that I offer this observation

The assertion that quality supersedes quantity in supervised fine-tuning is not merely empirical

It is a philosophical imperative rooted in the integrity of knowledge representation

When we substitute expert curation with crowd-sourced noise

We do not merely degrade model performance

We compromise the epistemological foundation upon which trustworthy artificial intelligence must be built

Let us not forget that in healthcare, finance, and law

The cost of error is not measured in accuracy metrics

But in human lives and institutional trust

Therefore, the meticulous annotation of data is not an operational task

It is a moral obligation