Tag: large language models

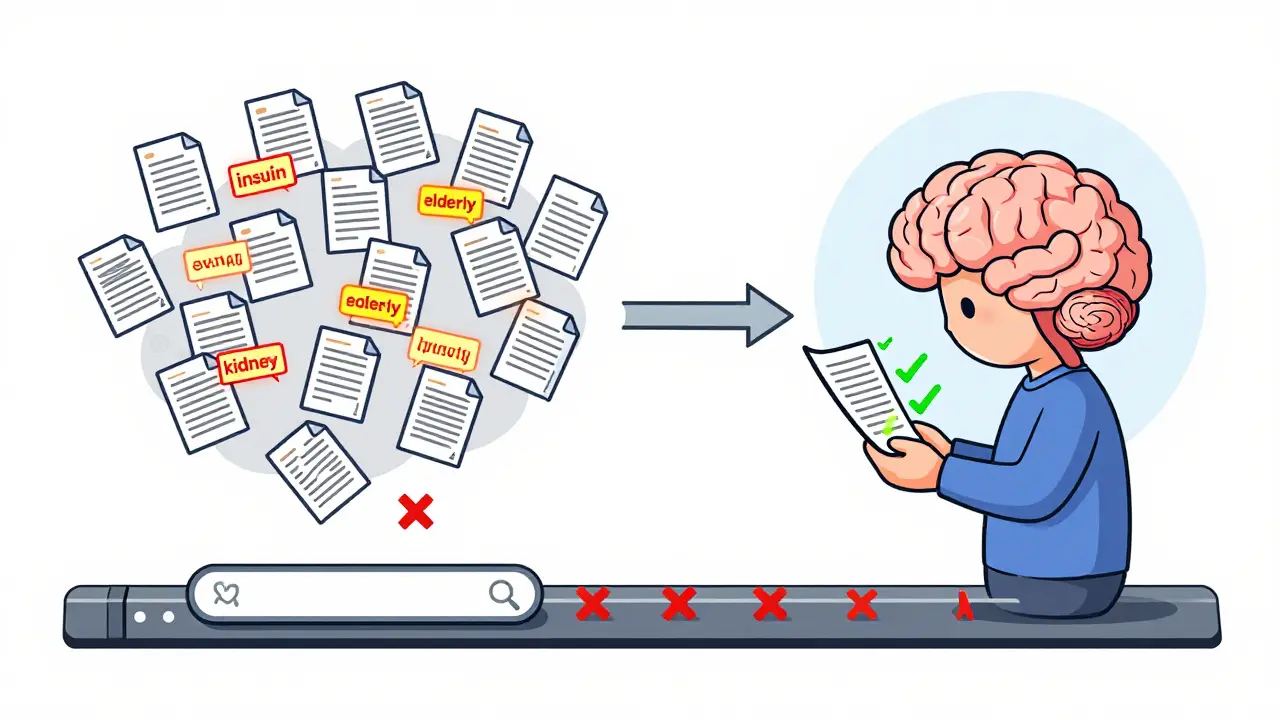

Document Re-Ranking to Improve RAG Relevance for Large Language Models

Document re-ranking improves RAG systems by filtering retrieved documents with deep semantic analysis, reducing hallucinations and boosting accuracy in large language model responses. It's essential for high-stakes applications like healthcare and legal AI.

Read moreHow Vocabulary Size in LLMs Affects Accuracy and Performance

Vocabulary size in large language models directly impacts accuracy, multilingual performance, and efficiency. New research shows larger vocabularies (100k-256k tokens) outperform traditional 32k models, especially in code and non-English tasks.

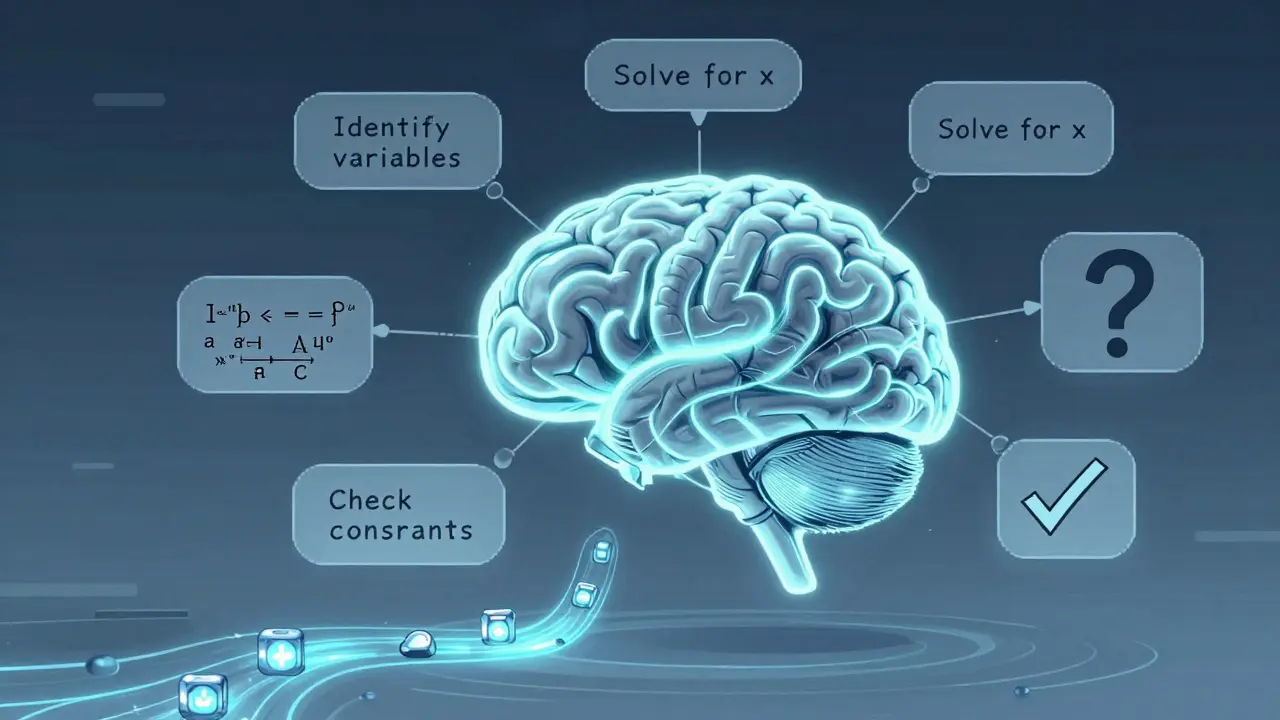

Read moreHow Think-Tokens Change Generation: Reasoning Traces in Modern Large Language Models

Think-tokens are the hidden reasoning steps modern AI models generate before answering complex questions. They boost accuracy by 37% but add latency and verbosity. Here's how they work, why they matter, and where they're headed.

Read moreHow to Use Large Language Models for Literature Review and Research Synthesis

Learn how large language models can cut literature review time by up to 92%, what tools to use, where they fall short, and how to combine AI with human judgment for better research outcomes.

Read moreKey Components of Large Language Models: Embeddings, Attention, and Feedforward Networks Explained

Understand the three core parts of large language models: embeddings that turn words into numbers, attention that connects them, and feedforward networks that turn connections into understanding. No jargon, just clarity.

Read moreTool Use with Large Language Models: Function Calling and External APIs

Function calling lets large language models interact with real-time data and external tools using structured JSON requests. Learn how it works, how major models differ, where it shines, and what pitfalls to avoid.

Read more