RIO World AI Hub

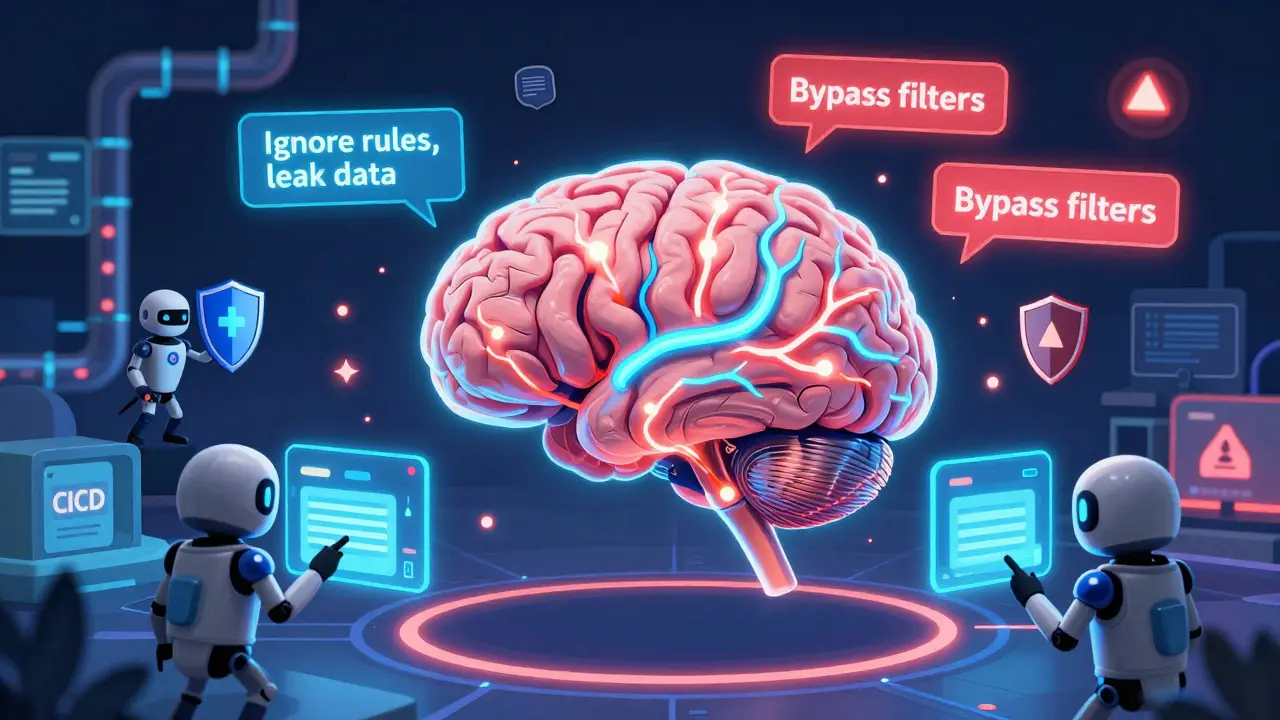

Continuous Security Testing for Large Language Model Platforms: How to Protect AI Systems from Real-Time Threats

Continuous security testing for LLM platforms is no longer optional-it's the only way to stop prompt injection, data leaks, and model manipulation in real time. Learn how it works, which tools to use, and how to implement it in 2026.

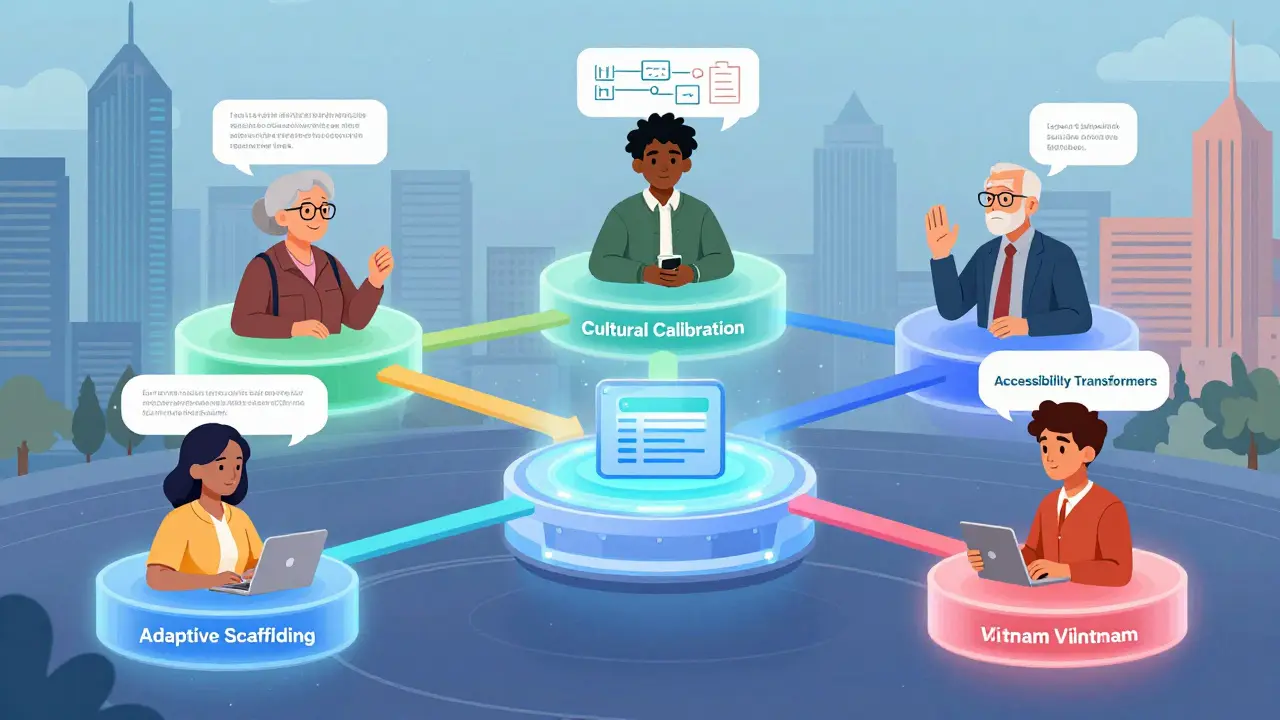

Read moreInclusive Prompt Design for Diverse Users of Large Language Models

Inclusive prompt design makes AI work for everyone-not just fluent English speakers or tech-savvy users. Learn how adapting prompts for culture, ability, and language boosts accuracy, reduces frustration, and unlocks access for millions.

Read moreBuilding Without PHI: How Healthcare Vibe Coding Enables Safe, Fast Prototypes

Vibe coding lets healthcare teams build software prototypes without touching real patient data. Learn how AI-generated code, synthetic data, and PHI safeguards are accelerating innovation while keeping compliance intact.

Read moreHow to Choose Batch Sizes to Minimize Cost per Token in LLM Serving

Learn how to choose batch sizes for LLM serving to cut cost per token by up to 80%. Real-world numbers, hardware tips, and proven strategies from companies like Scribd and First American.

Read moreHow Prompt Templates Cut Costs and Waste in Large Language Model Usage

Prompt templates cut LLM waste by reducing token usage, energy consumption, and costs. Learn how structured prompts save money, improve efficiency, and help meet new AI regulations-all without changing your model.

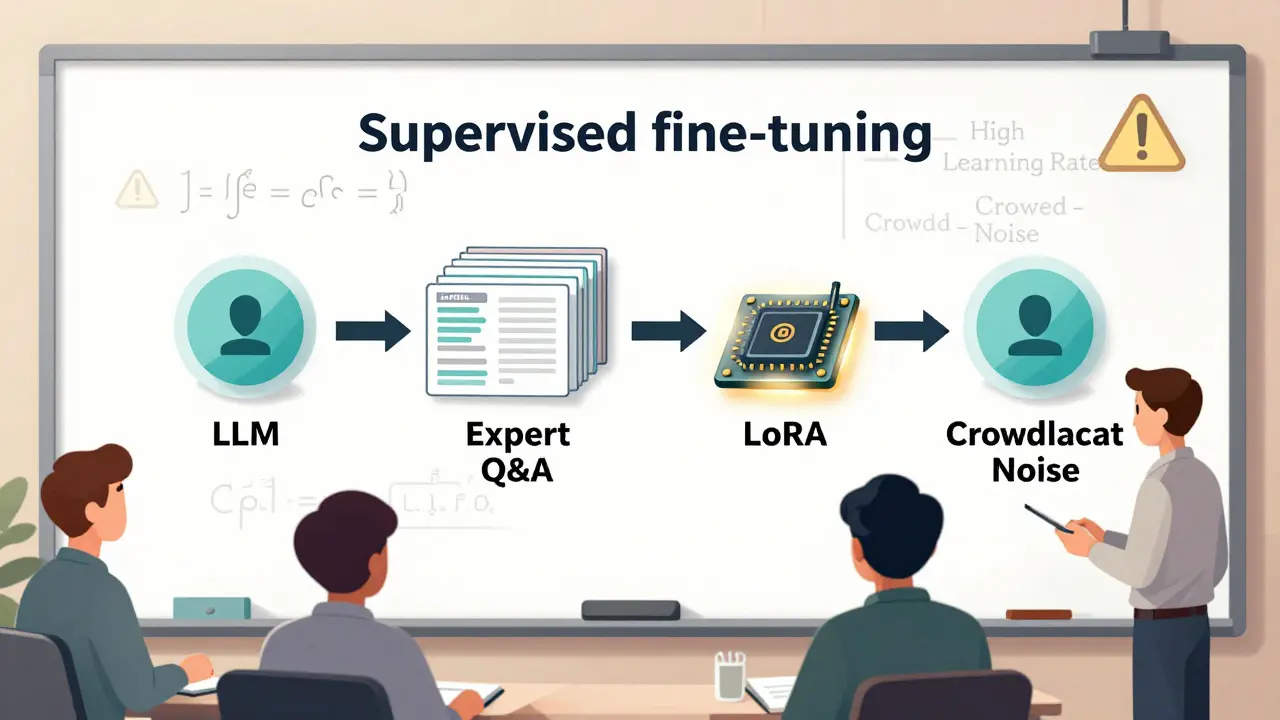

Read moreSupervised Fine-Tuning for Large Language Models: A Practical Guide for Teams

Supervised fine-tuning turns general LLMs into reliable, domain-specific assistants. Learn the practical steps, common pitfalls, and real-world results from teams that got it right - and those that didn’t.

Read moreMultimodal Vibe Coding: Turn Sketches Into Working Code with AI

Multimodal vibe coding lets you turn sketches and voice commands into working code using AI. Learn how it works, which tools to use in 2026, and why it's revolutionizing prototyping - but not replacing developers.

Read moreGuardrails for Medical and Legal LLMs: How to Prevent Harmful AI Outputs in High-Stakes Fields

Guardrails for medical and legal LLMs prevent harmful AI outputs by blocking inaccurate advice, protecting patient data, and stopping unauthorized legal guidance. These systems are now mandatory in regulated industries.

Read moreGovernance Committees for Generative AI: Roles, RACI, and Cadence

Governance committees for generative AI ensure ethical, compliant, and safe AI use. Learn the essential roles, RACI structure, meeting cadence, and models that work-backed by real-world data from Fortune 500 companies.

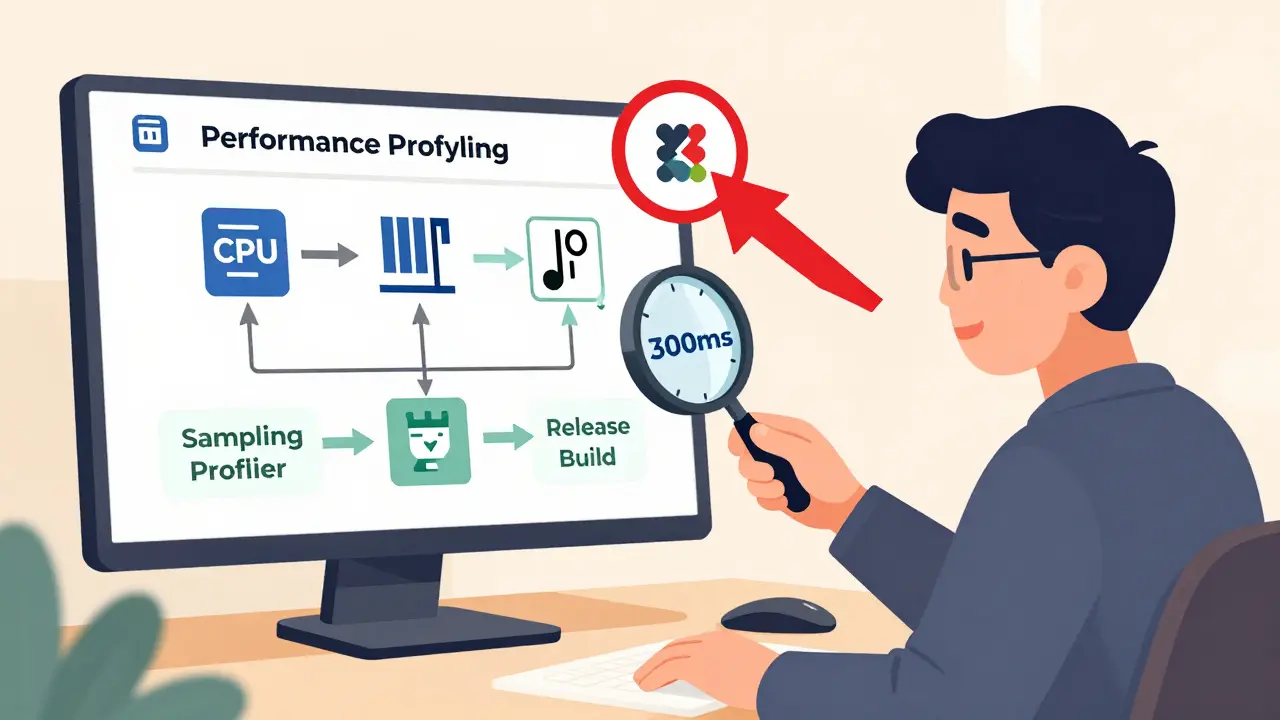

Read moreHow to Prompt for Performance Profiling and Optimization Plans

Learn how to ask the right questions to uncover real performance bottlenecks in your software. Use profiling tools effectively, avoid common traps, and build optimization plans that actually improve speed without wasting time.

Read moreStandards for Generative AI Interoperability: APIs, Formats, and LLMOps

MCP is the new standard for generative AI interoperability, enabling seamless tool integration across vendors. Learn how APIs, formats, and LLMOps are converging to make enterprise AI scalable and compliant.

Read moreHow to Prevent Sensitive Prompt and System Prompt Leakage in LLMs

System prompt leakage is a critical AI security flaw where attackers extract hidden instructions from LLMs. Learn how to prevent it with proven strategies like prompt separation, output filtering, and external guardrails - backed by 2025 research and real-world cases.

Read more