Archive: 2026/01

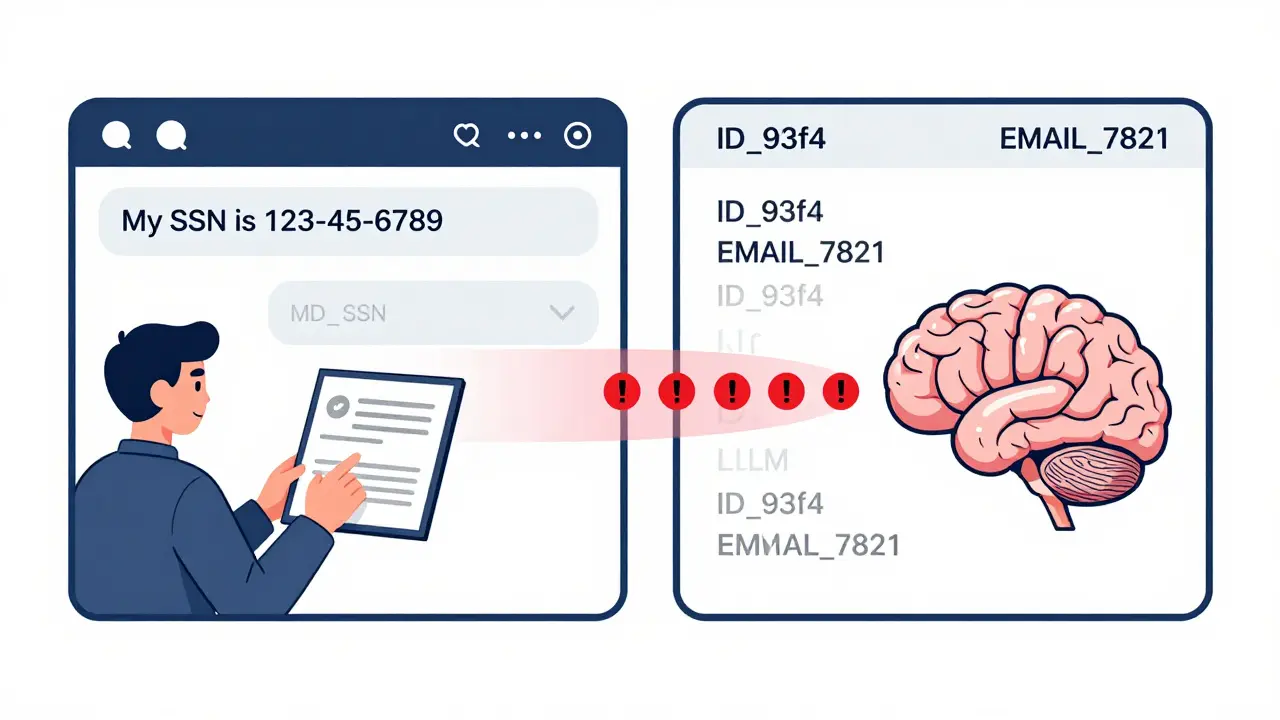

Token-Level Logging Minimization: How to Protect Privacy in LLM Systems Without Killing Performance

Token-level logging minimization stops sensitive data from being stored in LLM logs by replacing PII with anonymous tokens. Learn how it works, why it's required by GDPR and the EU AI Act, and how to implement it without killing performance.

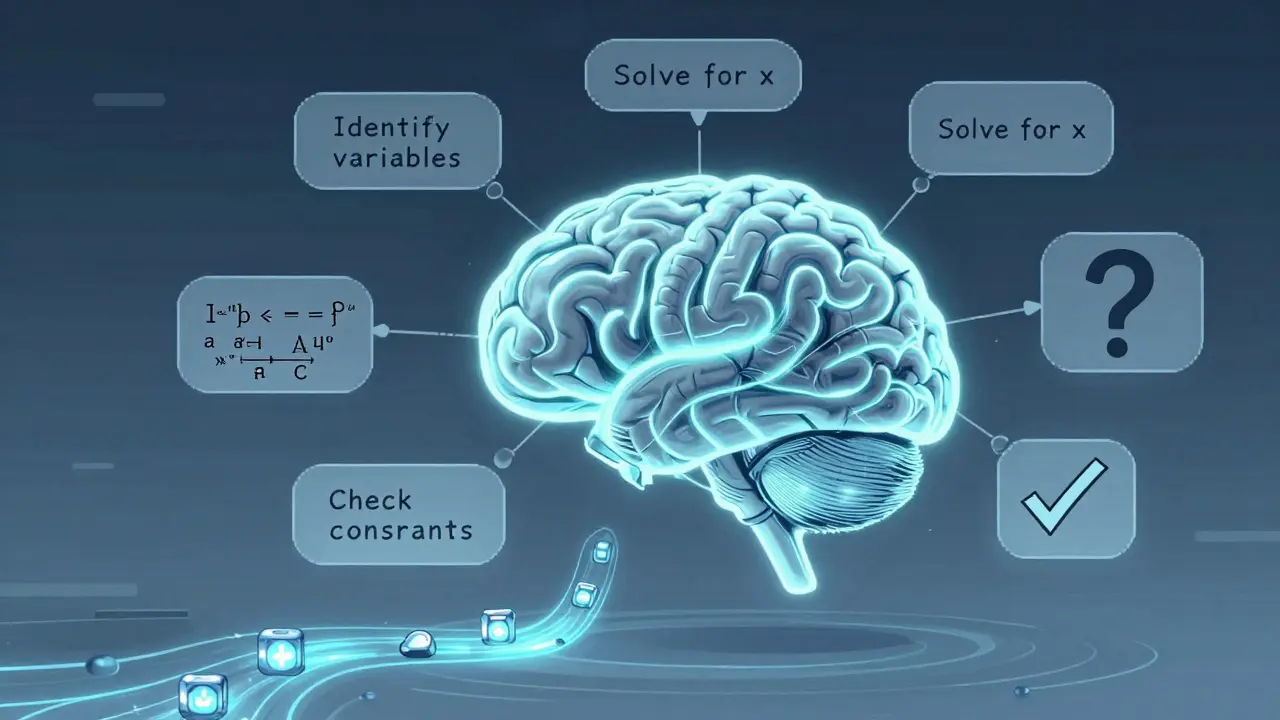

Read moreHow Think-Tokens Change Generation: Reasoning Traces in Modern Large Language Models

Think-tokens are the hidden reasoning steps modern AI models generate before answering complex questions. They boost accuracy by 37% but add latency and verbosity. Here's how they work, why they matter, and where they're headed.

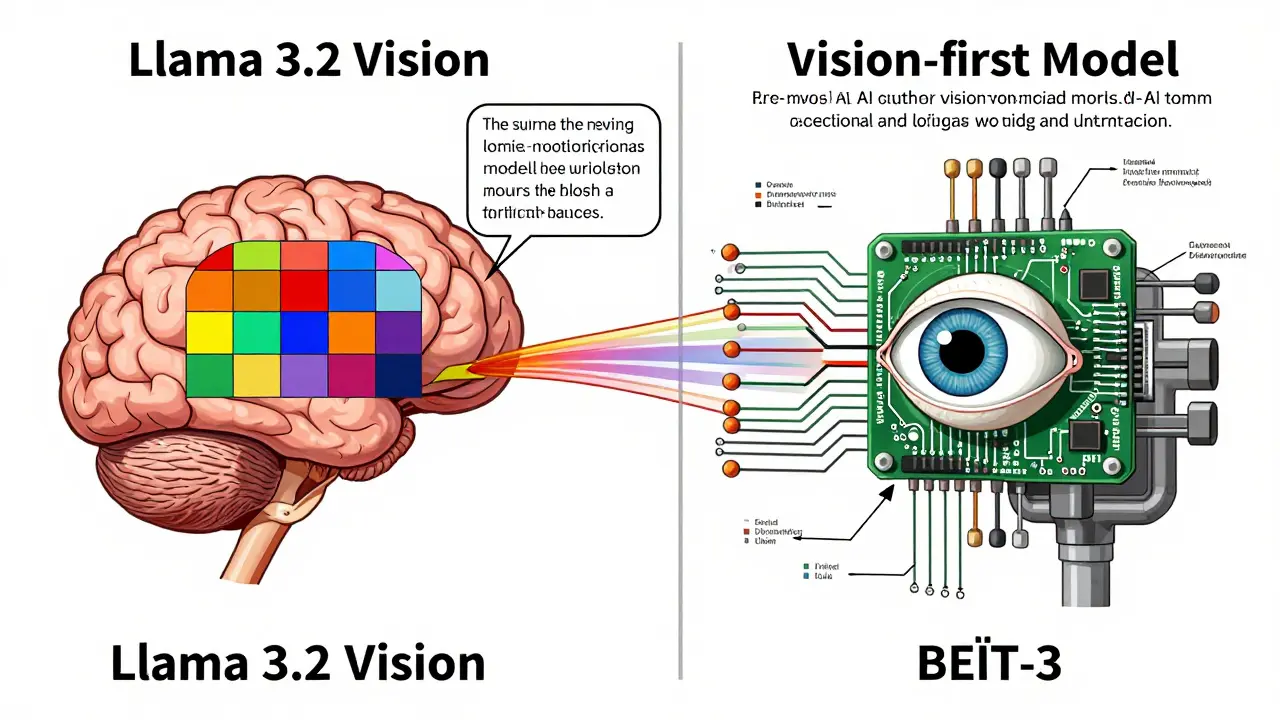

Read moreVision-First vs Text-First Pretraining: Which Path Leads to Better Multimodal LLMs?

Vision-first and text-first pretraining offer two paths to multimodal AI. Text-first dominates industry use for its speed and compatibility; vision-first leads in research for deeper visual understanding. The future belongs to hybrids that combine both.

Read moreHow to Use Large Language Models for Literature Review and Research Synthesis

Learn how large language models can cut literature review time by up to 92%, what tools to use, where they fall short, and how to combine AI with human judgment for better research outcomes.

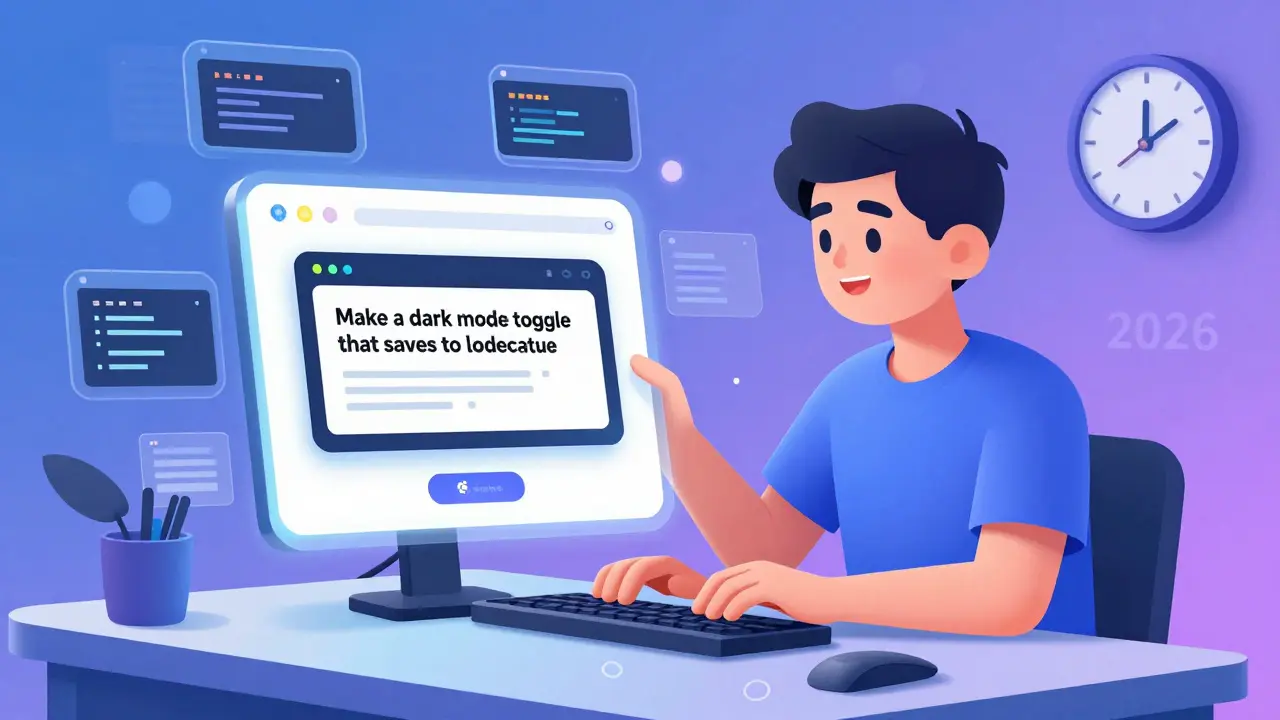

Read moreTalent Strategy in the Age of Vibe Coding: Roles You Actually Need

Vibe coding is changing how software is built. In 2026, you don't need more coders-you need prompt engineers, hybrid debuggers, and transition specialists who can turn AI-generated prototypes into real products. Here's what roles actually matter now.

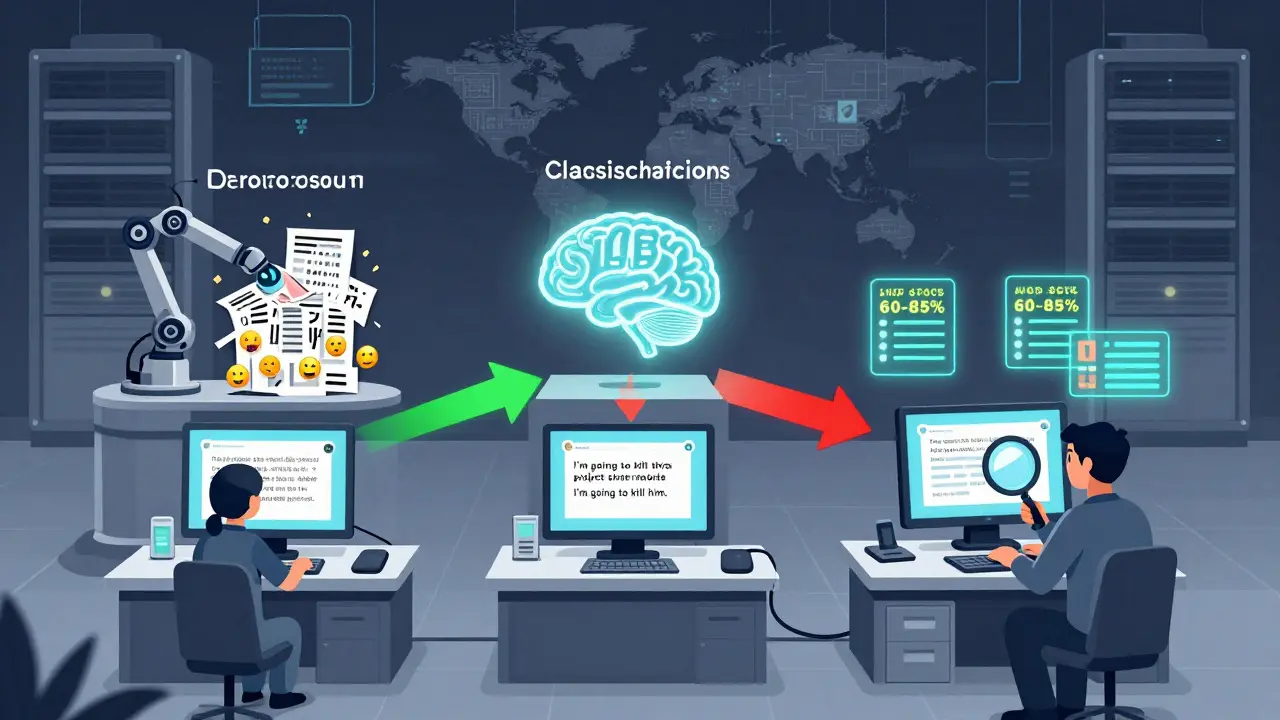

Read moreContent Moderation Pipelines for User-Generated Inputs to LLMs: How to Block Harmful Content Without Breaking Trust

Learn how modern AI systems filter harmful user inputs before they reach LLMs using layered pipelines, policy-as-prompt techniques, and hybrid NLP+LLM strategies that balance safety, cost, and fairness.

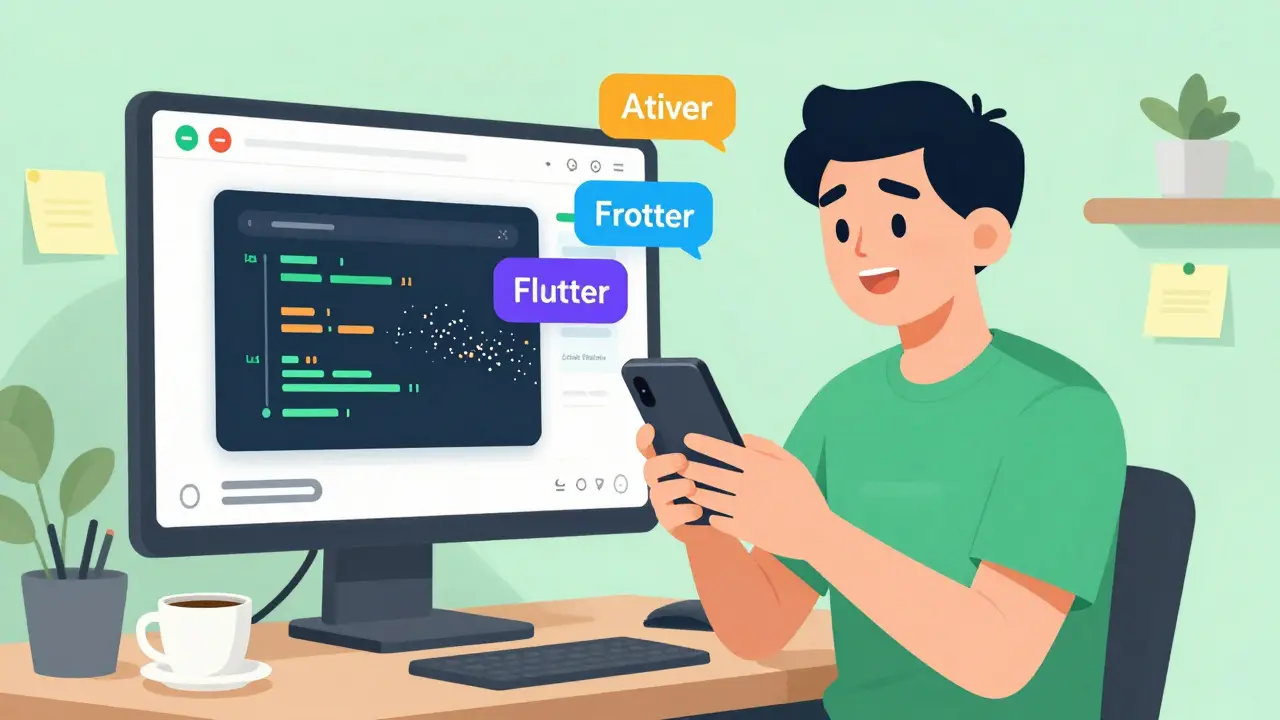

Read moreRapid Mobile App Prototyping with Vibe Coding and Cross-Platform Frameworks

Vibe coding lets you create mobile app prototypes in hours using AI prompts instead of writing code. Learn how to use it with React Native and Flutter, why 92% of prototypes need rewriting, and how to avoid costly mistakes.

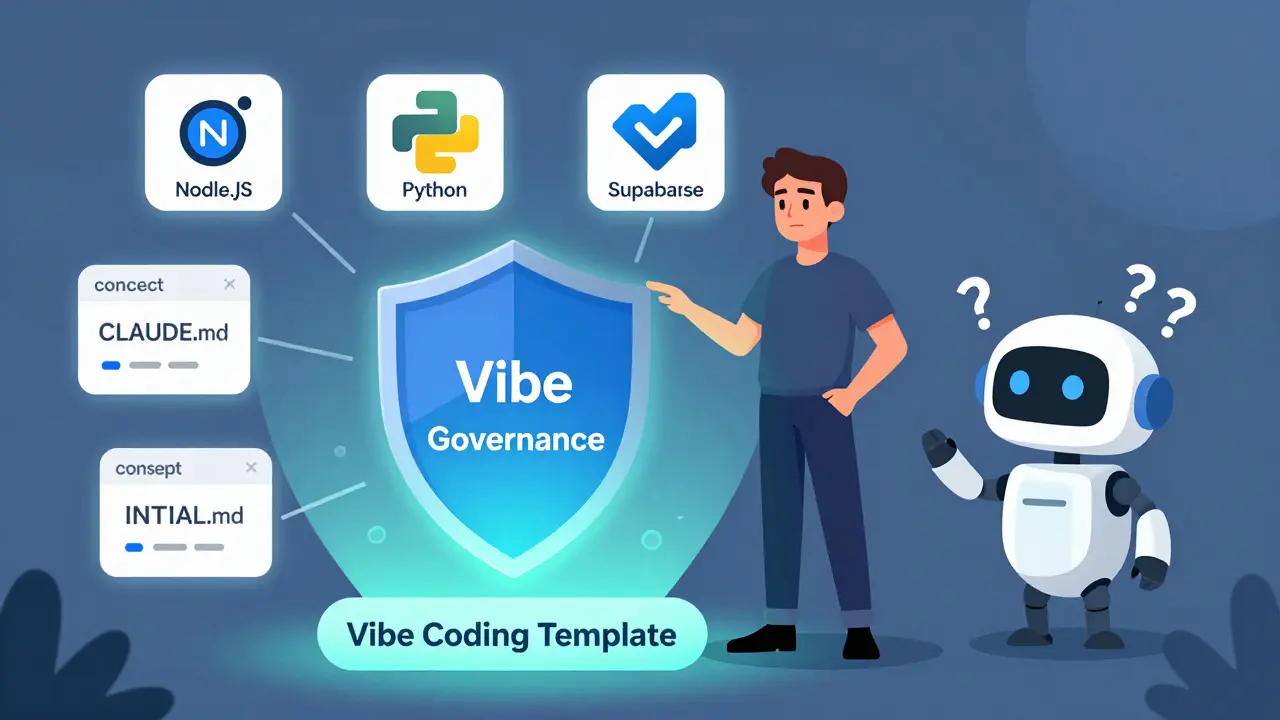

Read moreTemplate Repos with Pre-Approved Dependencies for Vibe Coding: Governance Best Practices

Vibe coding templates with pre-approved dependencies are governance tools that standardize AI-assisted development. They reduce risk, enforce best practices, and cut development time by locking in trusted tools and context rules.

Read moreEnterprise Integration of Vibe Coding: Embedding AI into Existing Toolchains

Enterprise vibe coding embeds AI directly into development toolchains, cutting internal tool build times by up to 73% while enforcing security and compliance. Learn how it works, where it succeeds, and how to avoid common pitfalls.

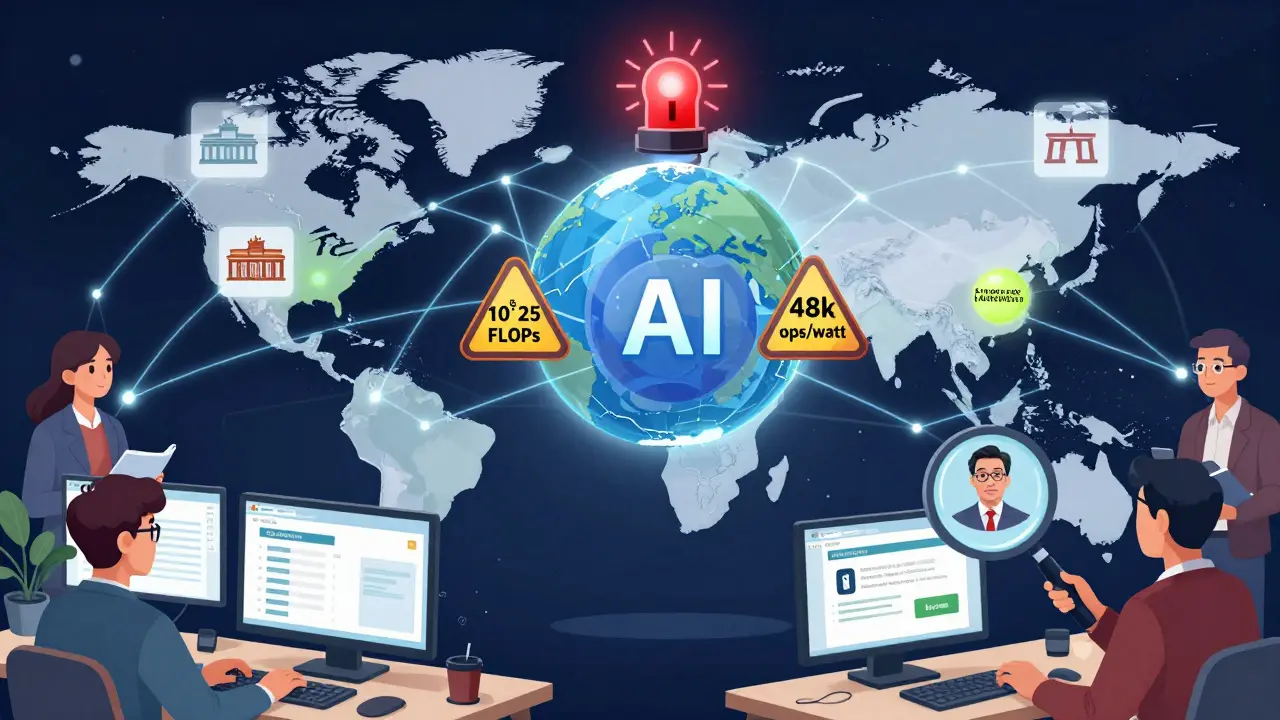

Read moreExport Controls and AI Model Use: Compliance Guide for Global Teams

Global teams using AI models must navigate complex export controls that can block shipments, trigger fines, or shut down markets. This guide breaks down the 2025 U.S. and EU rules, how to avoid hidden traps like deemed exports, and how automation and training turn compliance into a competitive advantage.

Read moreContinuous Security Testing for Large Language Model Platforms: How to Protect AI Systems from Real-Time Threats

Continuous security testing for LLM platforms is no longer optional-it's the only way to stop prompt injection, data leaks, and model manipulation in real time. Learn how it works, which tools to use, and how to implement it in 2026.

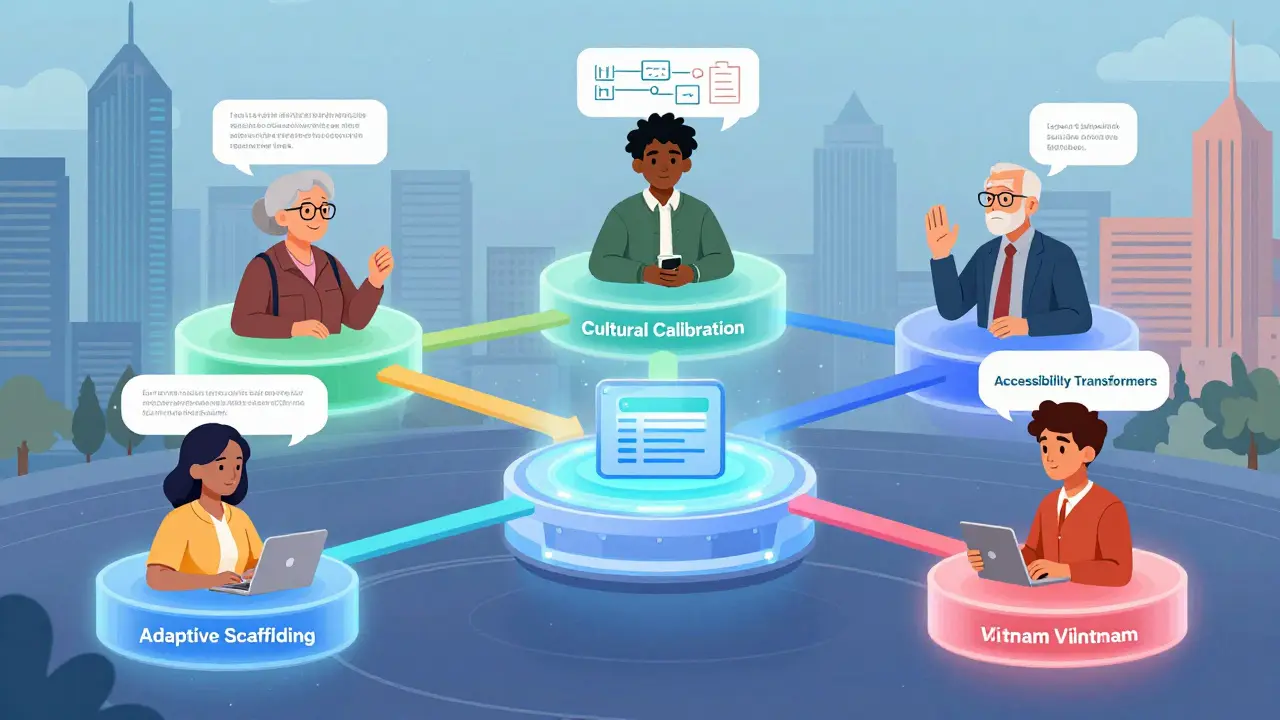

Read moreInclusive Prompt Design for Diverse Users of Large Language Models

Inclusive prompt design makes AI work for everyone-not just fluent English speakers or tech-savvy users. Learn how adapting prompts for culture, ability, and language boosts accuracy, reduces frustration, and unlocks access for millions.

Read more