Archive: 2026/02

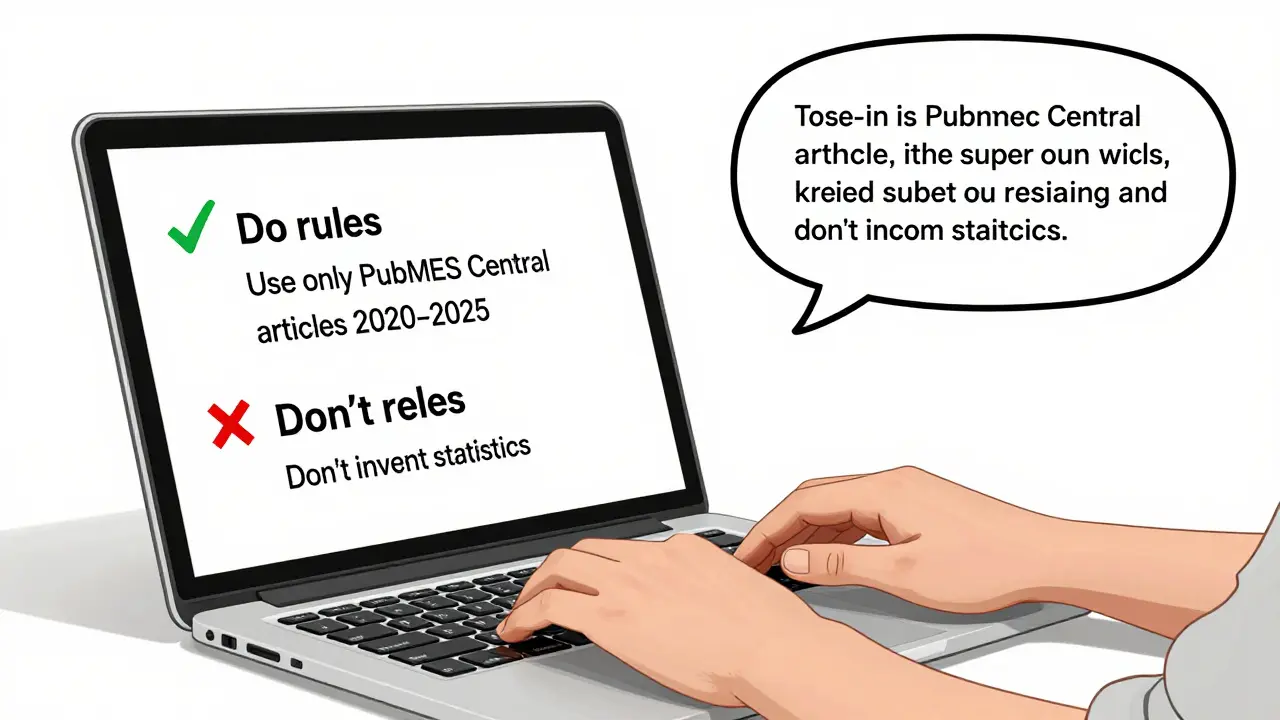

How to Prompt for Accuracy in Generative AI: Constraints, Quotes, and Extractive Answers

Learn how to use constraints, role prompts, and extractive techniques to reduce AI hallucinations and get accurate, reliable answers from generative AI tools. No fluff - just practical methods backed by real research.

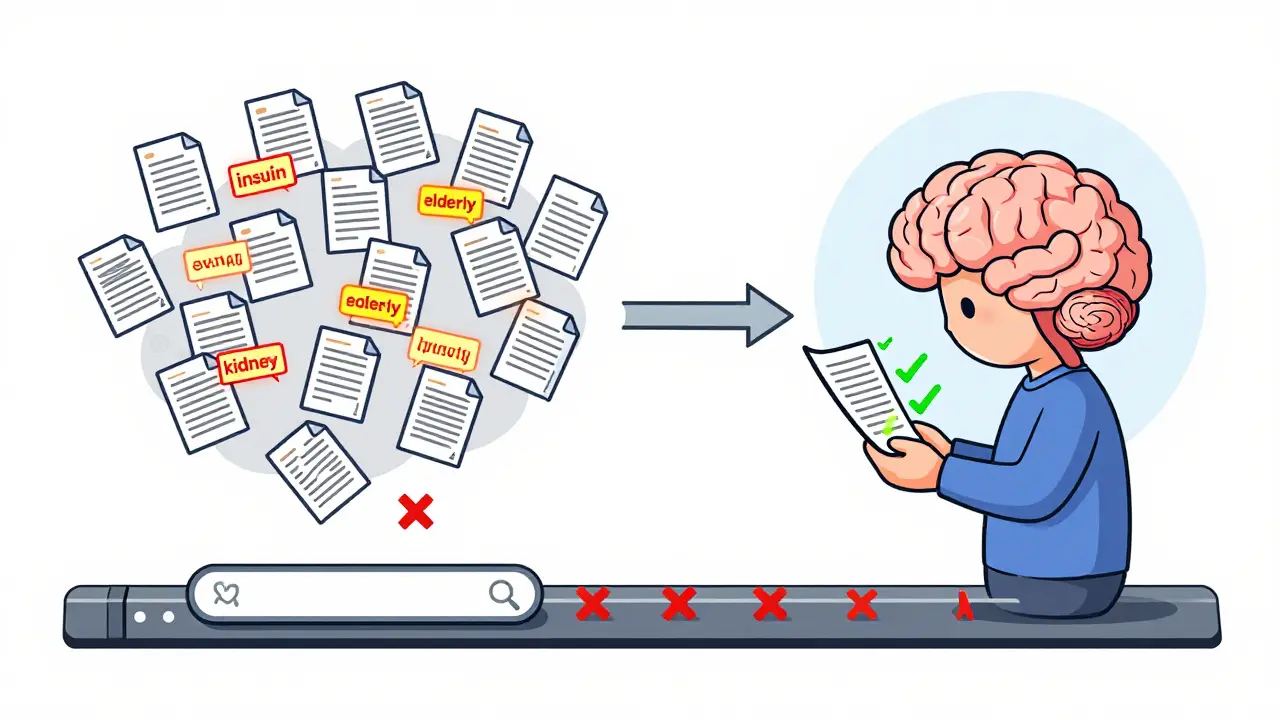

Read moreDocument Re-Ranking to Improve RAG Relevance for Large Language Models

Document re-ranking improves RAG systems by filtering retrieved documents with deep semantic analysis, reducing hallucinations and boosting accuracy in large language model responses. It's essential for high-stakes applications like healthcare and legal AI.

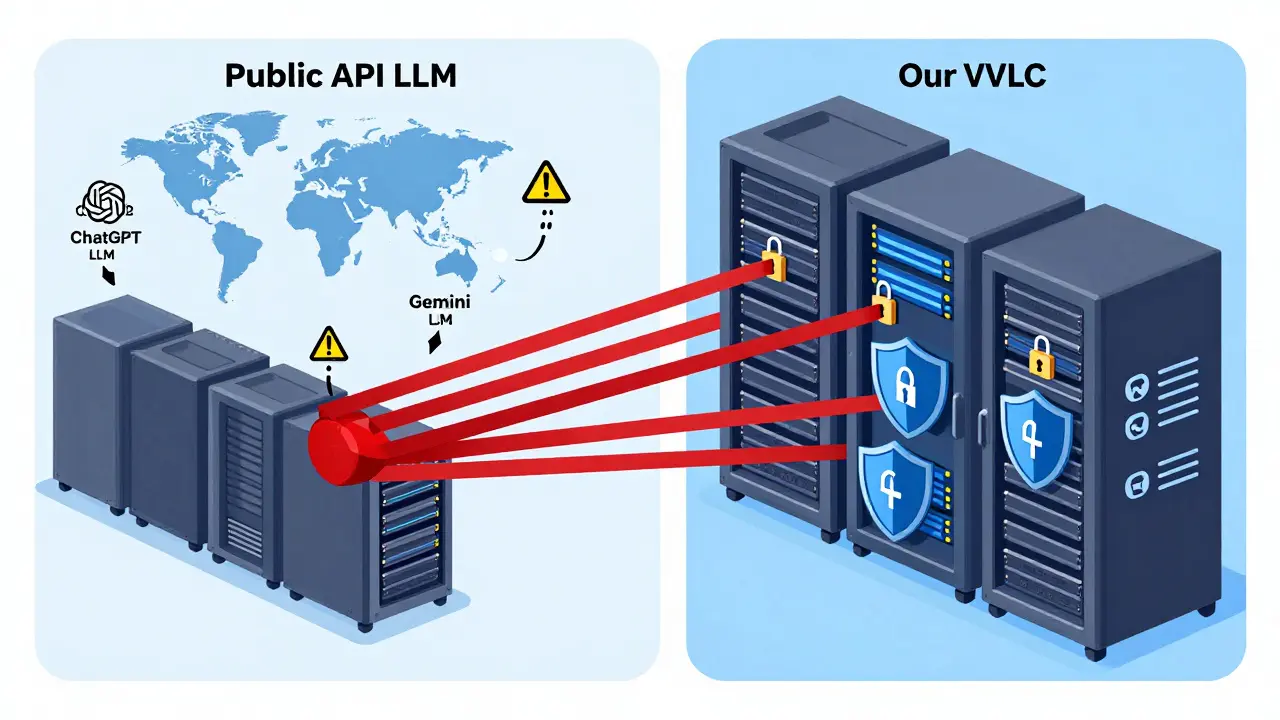

Read moreSecurity Posture Differences: API LLMs vs Private Large Language Models

Public API LLMs like ChatGPT expose your data to third parties, risking compliance and IP theft. Private LLMs keep data inside your cloud, giving you control, audit trails, and regulatory compliance. For regulated industries, the choice isn't optional.

Read moreInfrastructure Requirements for Serving Large Language Models in Production

Serving large language models in production requires specialized hardware, smart software, and careful cost planning. This guide breaks down what you actually need - from VRAM and GPUs to quantization and scaling - to run LLMs reliably at scale.

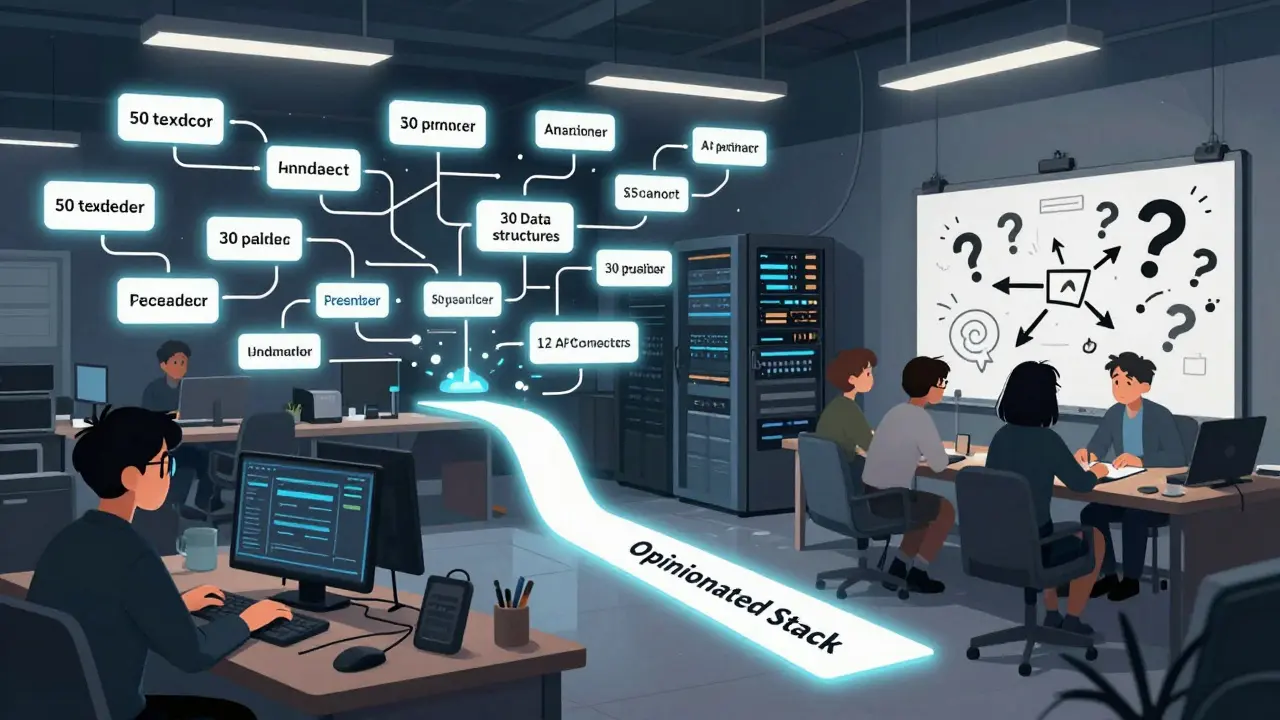

Read moreChoosing Opinionated AI Frameworks: Why Constraints Boost Results

Opinionated AI stacks cut through complexity by enforcing clear workflows, not endless options. Data shows they deliver faster results, higher user satisfaction, and lower costs - if chosen wisely.

Read moreEnterprise RAG Architecture for Generative AI: Connectors, Indices, and Caching

Enterprise RAG architecture combines data connectors, hybrid indices, and intelligent caching to deliver fast, accurate, and scalable generative AI for corporate use. Learn how to connect live data, build efficient search indexes, and cut latency by 80% with semantic caching.

Read moreWhy Large Language Models Hallucinate: Probabilistic Text Generation in Practice

Large language models hallucinate because they predict text based on patterns, not facts. This article explains why probabilistic generation leads to convincing lies - and how businesses are fixing it.

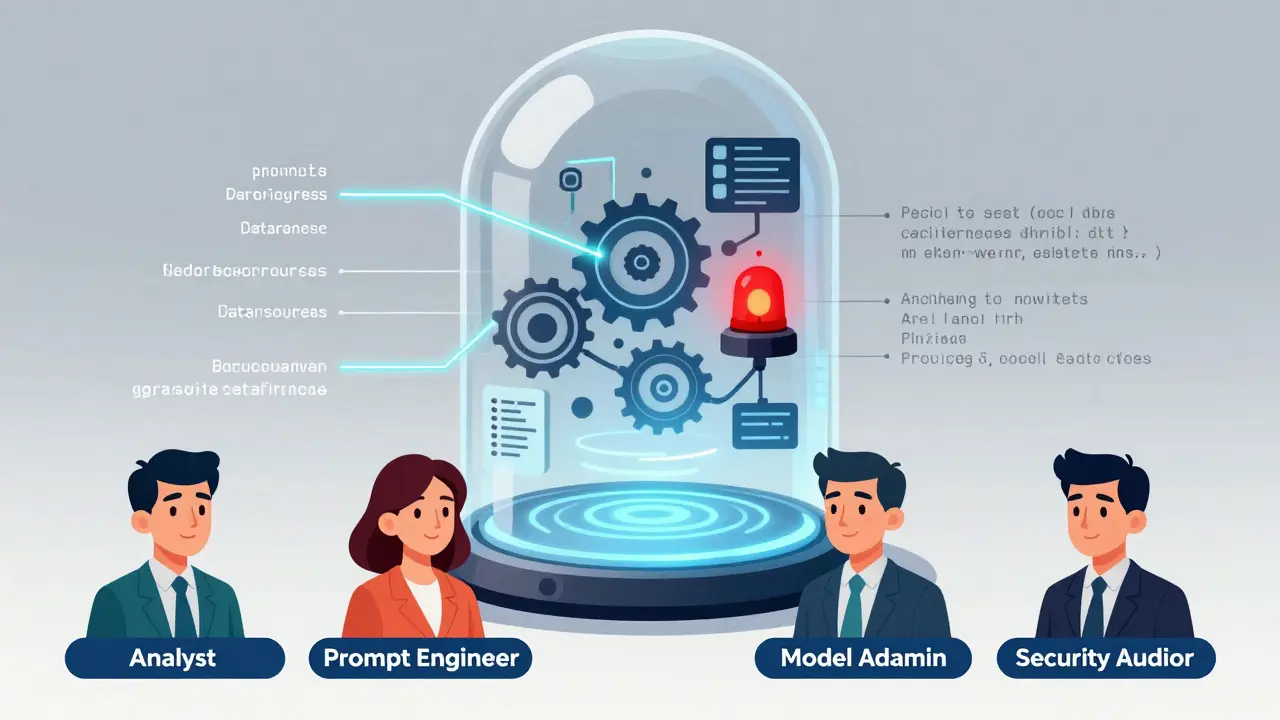

Read moreAccess Controls and Audit Trails for Sensitive LLM Interactions

Access controls and audit trails are critical for securing sensitive LLM interactions. Without them, organizations risk data leaks, regulatory fines, and loss of trust. Learn how to implement them effectively in 2026.

Read moreEstimating Inference Demand to Guide LLM Training Decisions

Accurately forecasting LLM inference demand helps teams decide which models to train, how much infrastructure to buy, and when to scale. This guide breaks down the methods, tools, and real-world impact of demand-driven training decisions.

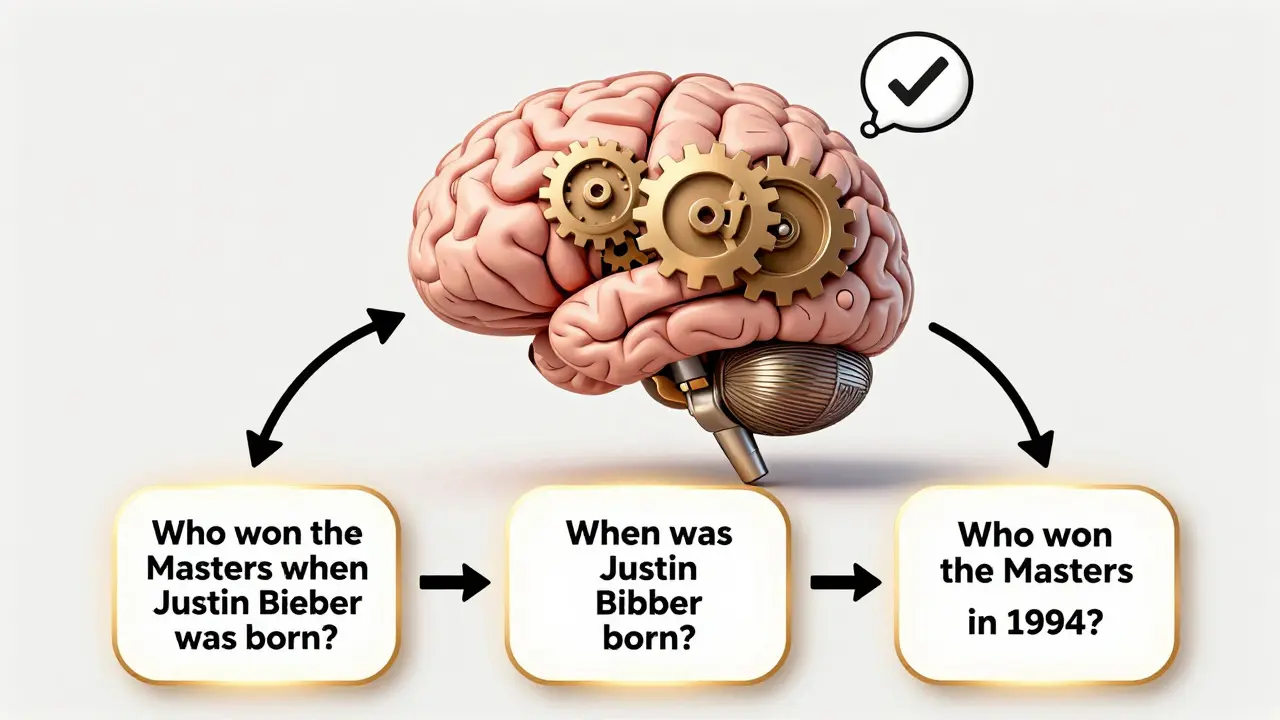

Read moreSelf-Ask and Decomposition Prompts for Complex LLM Questions

Self-Ask and Decomposition Prompts improve LLM accuracy on complex questions by breaking them into clear, verifiable steps. Used in legal, medical, and financial AI systems, they boost reasoning accuracy by over 13%-but come with higher costs and latency.

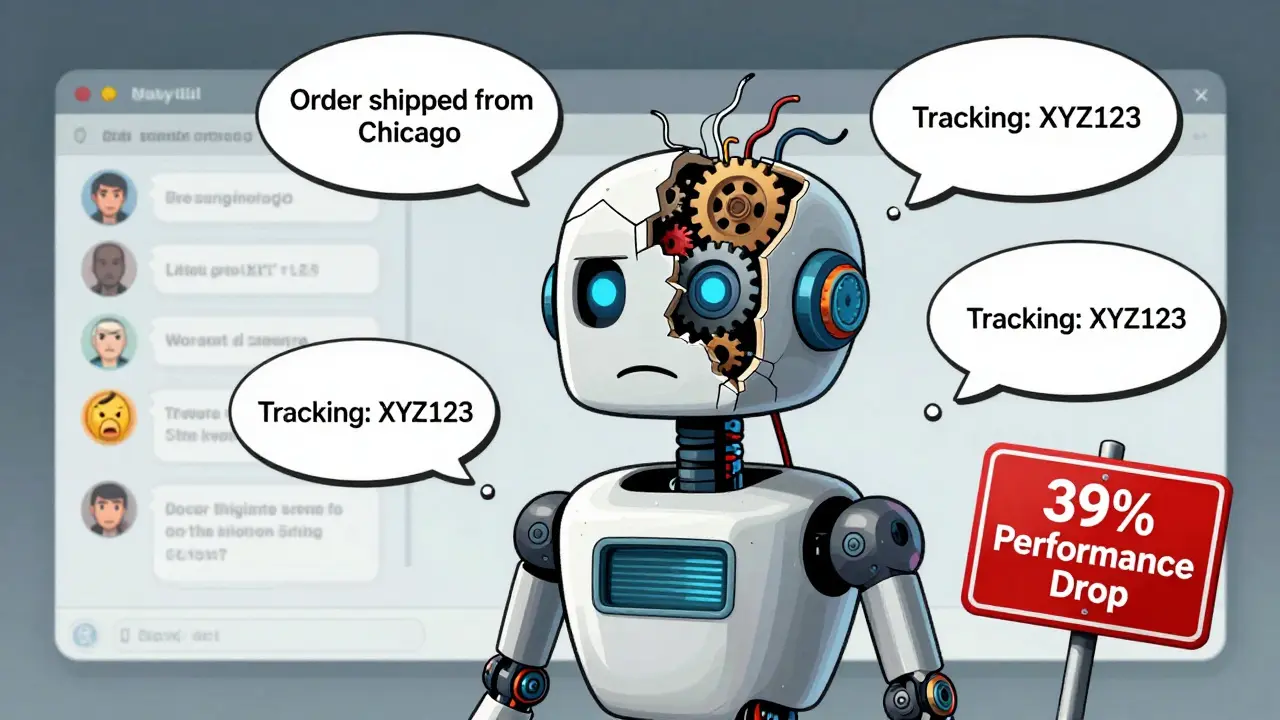

Read moreMulti-Turn Conversations with Large Language Models: Managing Conversation State

LLMs lose track in multi-turn conversations, causing 39% performance drops. Learn how loss masking, context summarization, and frameworks like Review-Instruct fix this-and why state management is now critical for real-world AI.

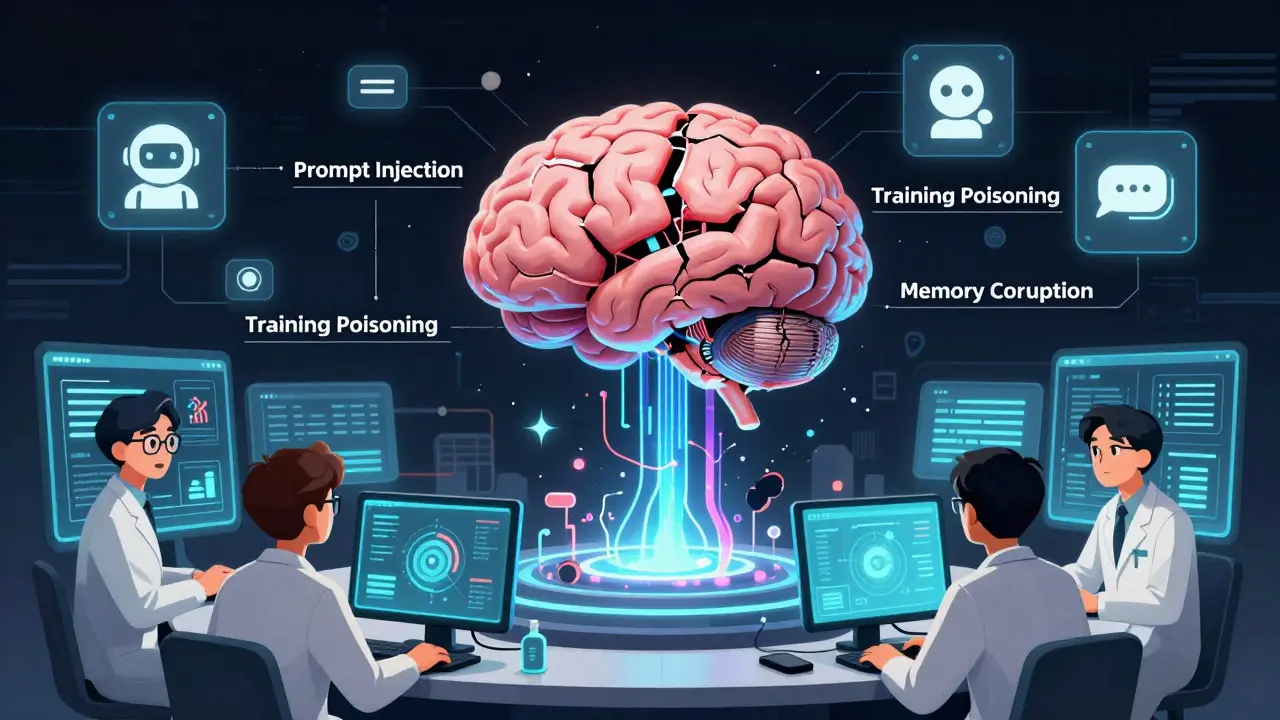

Read moreIncident Response for AI-Introduced Defects and Vulnerabilities

AI introduces unique security risks like prompt injection and data poisoning that traditional incident response can't handle. Learn how to build a specialized response plan using the CoSAI framework and AI-specific monitoring.

Read more